Upskilling has always been part of staying relevant in tech. But every so often, the industry shifts in a way that calls for more than just new skills; it calls for a rethinking of how teams are built and how work gets done.

That’s where we are now. The shift is changing what it means to run a high-performing development team.

At HatchWorks AI, we’ve been navigating that change head-on, and we’ve found that a redesign of the team structure and workflows is in order.

This guide lays out what that redesign looks like, drawing from our work with GenDD (Generative-Driven Development). If you’re figuring out how to evolve your team for an AI-native future, take a sigh of relief, because you’re in the right place.

Is Software Development As We Knew It Dying?

You’ve seen the LinkedIn posts. You know, the ones claiming artificial intelligence (AI) will be the end of software developers because businesses won’t need developers to write code anymore. That software development as we know it is dying.

They’re written to drive engagement, but still you wonder…is there any truth to it?

Well, yes and no. Some companies do think they can replace their software developers with AI. Microsoft has just laid off 6,000 employees, with around 40% of them being engineers. However, the truth is more nuanced than a simple 1:1 swap.

What’s really changing is the software development process itself.

The savviest development teams are integrating AI into the software development lifecycle and reshaping how work gets done. Modern software engineers are now managing AI agents more than writing boilerplate themselves.

Yes, projects may require fewer people, but make no mistake, developers (human ones) are still essential. Because if AI can perform tasks typically done by humans, someone still needs to manage it.

For the companies that are laying off developers, there’s no telling yet if they have made a big mistake. In fact, we’ve seen it already with Klarna recently hiring humans again after a disappointing two years with their AI customer service agents.

Instead of replacing talent, companies should be building smaller pods of software developers. You can take your existing developers and split them into those pods, or AI development teams, where more projects can be completed without spreading a single developer too thin.

What is an AI Development Team?

An AI development team is one that embeds AI into the software development lifecycle, working alongside AI systems to build faster than they could on their own.

Team members manage workflows, set intent, and design prompts that guide machine outputs. That shift leverages human intellect to define intent, not replace it.

Then, AI takes on repeatable execution (coding, testing, refining) while humans focus on strategy, oversight, and product alignment. Human intervention is intentional and focused on the areas it makes the biggest difference, like refining AI-generated outputs, guiding architectural decisions, and aligning deliverables with business strategy.

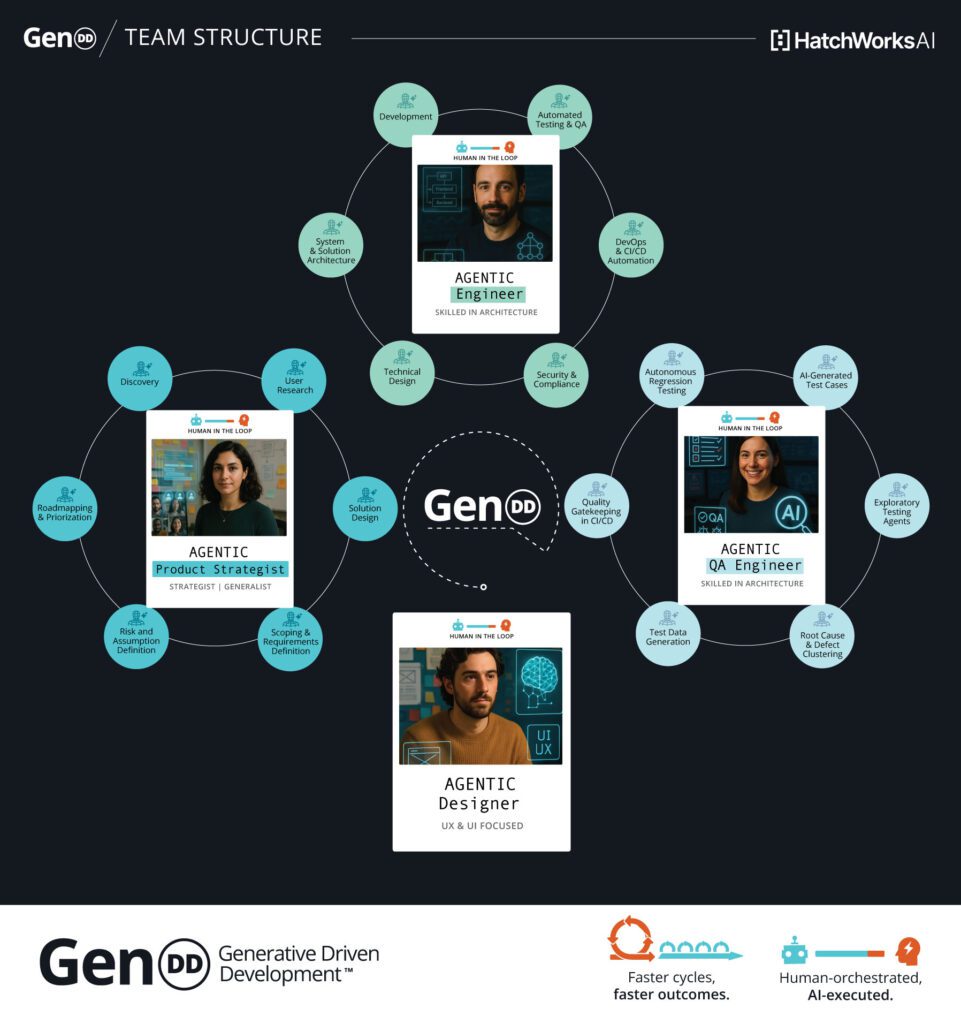

It’s the team model we use at HatchWorks AI with our Generative-Driven Development (GenDD) approach. In that approach, software developers become orchestrators of AI.

📚Read all about our GenDD methodology and how you can adopt it in your business here.

How AI Development Teams are Approaching the SDLC with GenDD

Most teams are still applying AI at the edges. They autocomplete in IDEs, maybe use a code suggestion here or a test case there.

AI development teams are embedding AI throughout the software development lifecycle.

With GenDD, the SDLC gets restructured so that human team members define goals, manage priorities, and give context. AI systems carry out the repeatable execution, writing code, testing outputs, and optimizing results. These teams are practicing supervised learning loops on AI inputs by reviewing and refining generated outputs continuously.

The process is faster, more adaptive, and centered around collaboration between people and machines.

At the heart of this model are three core pillars that reshape how AI-native teams plan, build, and learn in real time.

Pillar 1 – Context-First Collaboration

AI built on natural language processing has been known to hallucinate a time or two. Which is why AI development teams prioritize giving it accurate information, quality data, and complete contexts before having it execute a single task.

Teams often use prompt packs, shared project memories, and retrieval-augmented generation (RAG) pipelines to keep context flowing.

This creates continuity and ensures outputs from design, coding, or testing stages are consistent and rooted in the same understanding of the work.

Pillar 2 – Human-in-the-Loop Orchestration

AI development teams don’t just prompt and hope for the best (cough, cough—vibe coding). They decide which tools to use when, what tasks to automate, and how to sequence multi-agent flows to match business priorities.

The human role shifts from execution to orchestration and shapes workflows by setting constraints and reviewing outputs for alignment and quality. And they apply deep learning techniques (like transformer-based orchestration, retrieval-augmented generation (RAG), and reinforcement learning with human feedback) when choosing the architecture and constraints of multi-agent workflows.

Done right, this changes project management itself. Instead of handing off work across silos, teams guide AI through entire phases of the lifecycle.

Pillar 3 – Continuous, AI-Driven Feedback

AI development teams get feedback faster and more often.

They use self-healing tests to monitor code as it changes, conversational debugging tools to surface issues while they work, and AI-assisted retros to reflect on sprint performance in real-time, turning team insights into immediate process improvements.

Core Roles in the AI Development Team of the Future

As an industry, we have to consider what high-impact human contribution looks like now. So that rather than being replaced, human contribution is redesigned.

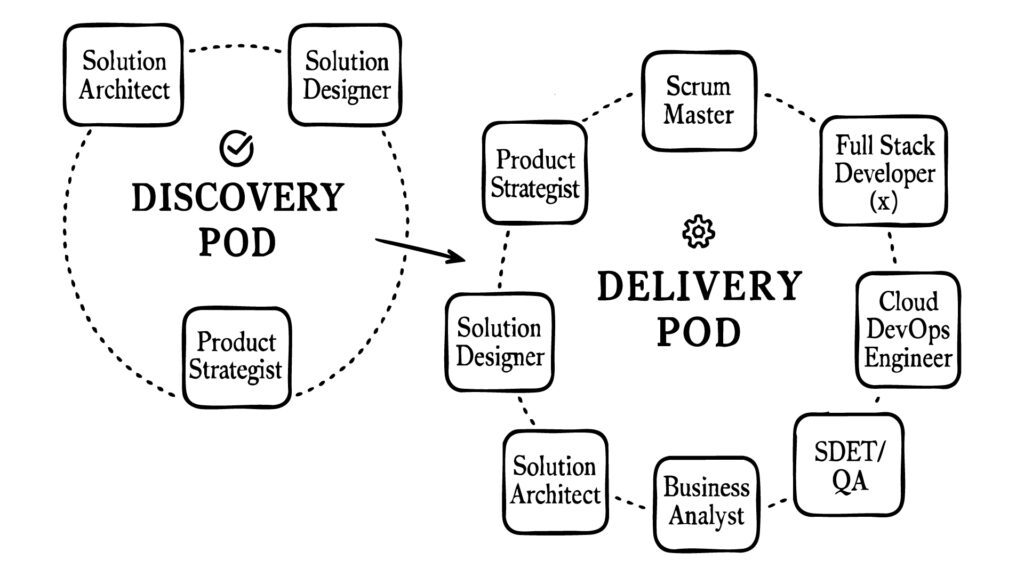

Traditional software development teams were often organized in rigid, siloed structures, handing work off from one role to the next.

In contrast, today’s AI-native teams are designed for continuous collaboration and adaptability across both discovery and delivery, as shown below:

The Agentic Product Strategist

Legacy roles this builds on: Product Manager, Business Analyst, sometimes Innovation Lead

The Agentic Product Strategist is the connective tissue between business intent and AI-powered execution. Their job is to define what success looks like and then use AI to pressure test that definition before a single line of code is written.

They explore product ideas by prompting AI models with user feedback, competitor insights, and strategic goals. Instead of guessing what might work, they can simulate outcomes and map trade-offs in minutes.

Skills that matter most:

- Strategic thinking rooted in business outcomes

- Prompt fluency and experience with AI-assisted decision making

- Curiosity, context awareness, and willingness to iterate

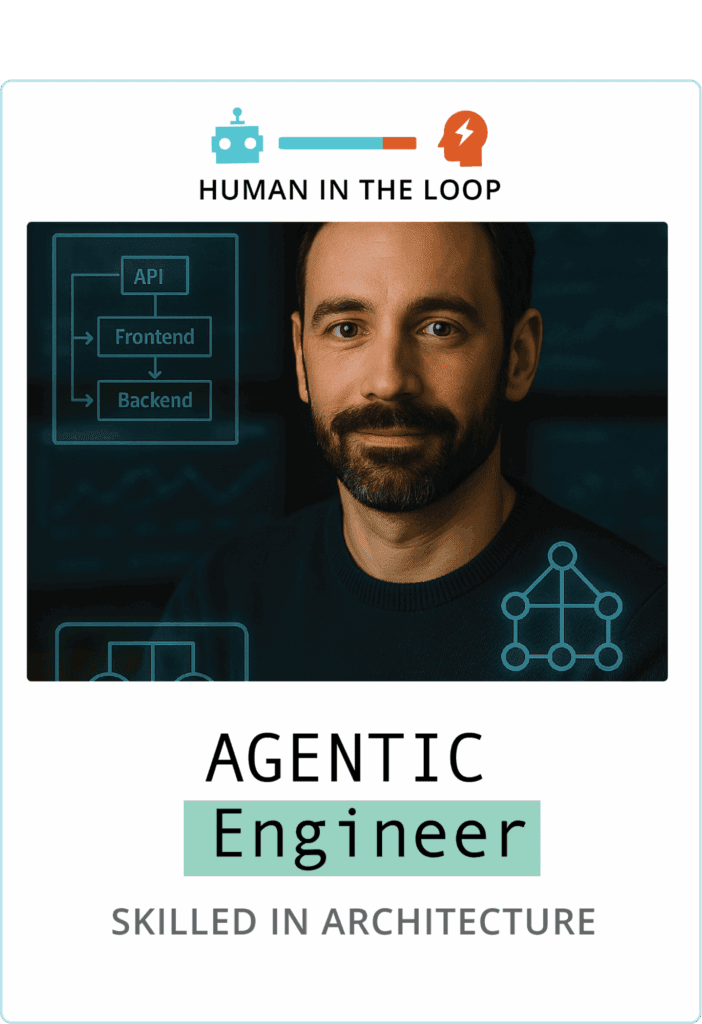

The Agentic Engineer

Legacy roles this builds on: Software Developer, Tech Lead

The Agentic Engineer blends software development expertise with AI orchestration. Instead of writing every line of code themselves, they coordinate AI agents to do the heavy lifting, while focusing their energy on architecture, integration, and intent-setting.

They choose the right models for the task, craft effective prompts, and manage execution through multi-agent workflows. They’re not coding in the traditional way because what they’re actually doing is designing how the code gets written, tested, and deployed with AI in the loop.

This role still requires strong technical chops, but the real value lies in their ability to design systems that balance machine speed with human oversight.

Skills that matter most:

- Fluency in programming languages and modern frameworks

- Experience orchestrating AI tools and deep learning models

- Systems thinking, architecture, and prompt design expertise

The Agentic QA Engineer

Legacy roles this builds on: QA Engineer, SDET

The Agentic QA Engineer is focused on one thing: making sure AI doesn’t accelerate broken code. They work alongside developers to design test coverage before features are built and use AI to generate, run, and refine those tests as the product evolves.

It’s a shift from gatekeeping to continuous guidance, and it changes the role from reactive to proactive.

Skills that matter most:

- Deep understanding of software quality and risk

- Ability to collaborate with AI testing tools and tune outputs

- Pattern recognition and critical thinking under fast-changing conditions

The Agentic Designer

Legacy roles this builds on: UX Designer, Product Designer

In traditional teams, designers often work ahead of the build, handing off specs to developers and hoping the user intent survives. That model breaks down in GenDD. When development is continuous and AI can generate dozens of UI variants in minutes, designers need to be embedded and adaptive.

That’s why the Agentic Designer works inside the pod, shaping the user experience in lockstep with development. They use AI to explore interaction patterns, simulate user behavior, and validate design choices as features are being built.

But speed isn’t the only goal. This role ensures rapid development doesn’t compromise usability. It requires strong design instincts and the ability to guide AI tools toward outcomes and a user interface that makes sense for real users.

Skills that matter most:

- Systems-level UX thinking

- Experience using AI to prototype, test, and adapt designs

- The judgment to balance speed with user impact

Specialist Roles Emerging on AI-First Teams

Most GenDD teams don’t start with specialists. In fact, one of the benefits of GenDD is that small, focused pods can deliver more with fewer people.

But as AI-native teams scale (more pods, more agents, more complexity), new roles emerge to keep systems aligned and performance high.

AI Solutions Architect: Designing End-to-End Agent Systems

Once you’ve got more than one pod running—or your agents are coordinating across multiple workflows—you need someone thinking at the systems level. That’s where the AI Solutions Architect comes in.

They design how deep learning models, neural networks, and machine learning algorithms fit together across tools and stages.

Their job is to make the whole system reliable and adaptive under scale. They anticipate edge cases, failure modes, and integration gaps before they slow the team down.

This role becomes essential when AI is helping execute multiple steps of your development process.

Prompt Librarian: Crafting Reusable Prompt Libraries

When teams start copying prompts between projects or revising the same instructions for the tenth time, it’s time to bring in a Prompt Librarian. Or designate someone to the role who has shown initiative.

This role curates and maintains prompt libraries tailored to different AI models and workflows. They standardize how tasks are executed, how tone is applied, and how context is embedded. Especially in teams working across a long software development process, the Prompt Librarian helps ensure that machine outputs are predictable, reusable, and aligned with project goals.

How many people do you need on an AI-native dev team?

Not as many as you think.

A typical GenDD dev team (or pod) includes just three to five people: a Product Strategist to define direction, an Engineer to orchestrate execution, and a QA lead to embed quality from the start. Designers and AI specialists can rotate in depending on the needs of the project.

The reason this smaller structure works is because it’s amplified by AI agents.

Compared to a traditional dev team with 8–12 people and multiple layers of handoff, GenDD pods deliver more with less. They maintain continuity, reduce coordination overhead, and improve the team’s performance through tighter cycles.

Each pod aligns to a clear set of business objectives, making it easier to scale by duplication.

Want to move faster or build more projects at once? Set up another pod.

Skills Your Team Will Need to Thrive

Every role in this new software development team will come with its own skill set, but there are also core competencies the entire team should have if they want to build effectively with AI:

- Prompt Fluency: Everyone should know how to write clear, structured prompts that guide AI behavior, whether they’re generating code, tests, or user flows.

- Constraint Design: Building with AI means defining the boundaries. It’s not enough to ask for output; you need to shape it with rules, examples, and structured guidance.

- Agentic Thinking: Teams need to think in systems. How can agents collaborate? Where can workflows be handed off to AI? This isn’t linear tasking anymore.

- Strategic Thinking: AI can execute, but it can’t align to your business strategy—that’s on your team. Everyone needs to understand how their work drives the outcome.

- Problem Solving (Still a core skill): Models aren’t magic. Bugs happen, context drops, things go sideways. The ability to diagnose and correct is still essential.

- Comfort with Code: Even if AI writes it, someone has to review, refactor, and debug. Programming languages are still a critical part of the toolkit. And what happens if the AI you use goes down one day? You still need to know how to build without AI.

- Learning Agility: The tools will change. Fast. The best teams embrace feedback, evolve quickly, and keep their stack and their skills sharp.

- Pattern recognition: Teams must get good at identifying patterns in AI behavior, outputs, and system performance—spotting when things are going right, when they’re drifting, and when intervention is needed to course-correct.

How to Prepare Your Team for This Transition

GenDD, and this new software development team structure, doesn’t require you to throw out everything you know about software development, but it does require a shift in how your people, processes, and platforms work together.

Here at HatchWorks AI, we lead workshops to help teams adopt the GenDD mindset and activate the methodology in their org.

This is what we tell them:

Audit Your Current Team’s Readiness

Before introducing new roles, tools, or methods, you need to understand your baseline. Think of it like a systems check and ask:

- Where does most manual work happen?

- Are roles siloed or cross-functional?

- Who’s already using AI tools, and for what?

This audit reveals where GenDD principles can deliver the most impact. You may find certain teams are already practicing early forms of orchestration, while others may need a complete rethink.

Run a GenDD Pilot and Workshop

Trying to overhaul your entire organization at once is a recipe for chaos. Instead, isolate one high-impact opportunity and pilot GenDD in a focused, contained way.

This could be a specific feature build, a new internal tool, or a project with flexible deadlines. Bring together a small team and give them the space to experiment with AI-powered workflows.

For a guided approach, our HatchWorks GenDD Workshop immerses your team in what it actually takes to orchestrate with AI. We cover:

- Building multi-agent flows

- Applying prompt engineering at scale

- Reimagining handoffs and ownership.

Train Leaders on Artificial Intelligence Orchestration

Your transformation will rise or fall based on how your leaders adapt. Project managers, team leads, and product owners must learn how to manage intelligent systems alongside their people.

That means letting go of micromanaging tasks and instead:

- Defining clear intent for AI execution

- Evaluating AI-generated outcomes against strategic goals

- Guiding cross-functional pods through high-trust, high-context workflows

Start with One GenDD Pod

Instead of building bigger teams, GenDD scales through replicable pods—each small, empowered, and AI-native. A typical pod has 3–5 core roles: a Product Strategist, an Agentic Engineer, and an Agentic QA, with optional roles like an Agentic Designer or AI Solutions Architect.

This structure unlocks:

- Tighter context loops

- Less overhead in coordination

- Higher ownership and autonomy

It also makes it easier to scale. So when starting out, start small and perfect the process before scaling wide.

Adopt New Onboarding Standards

In GenDD, every new hire is expected to collaborate with AI, not just use it. That requires a foundational understanding of prompting, tooling, and orchestration from day one.

Onboarding should include:

- How to design effective prompts

- How to evaluate AI outputs

- How your team’s specific AI stack works together

- When to intervene vs. let AI run autonomously

Think of it as onboarding into a co-creation culture. When every contributor understands how to think with AI, not just about it, your team becomes more adaptable, autonomous, and aligned.

So as AI evolves, your team evolves with it. Just think, this is the worst AI will ever be.

Tools Powering the AI-Native Dev Stack

The GenDD stack is shaped around how teams actually work, with tools selected to support specific responsibilities across the SDLC.

Here are the tools AI development teams rely on to build by role:

| Role | Tool(s) | Use |

|---|---|---|

|

Agentic Product Strategist

|

ChatGPT / Claude / Gemini – Used for early-stage ideation, strategy simulation, and turning raw insights into feature roadmaps.

Notion AI / Qodo – Supports requirements gathering and collaborative planning workflows. GPT-4 with RAG – Integrates company data or product context to inform product-market fit and tradeoff analysis. |

Strategic exploration, product visioning, simulation

|

|

Agentic Engineer

|

Cursor – AI-native IDE deeply integrated with assistant agents for pair programming, refactoring, and debugging.

Devin / Codeium Windsurf – Multi-agent development environments for end-to-end task management and software creation. LangChain / AutoGen / CrewAI – Used to build and orchestrate multi-agent chains aligned with business logic. |

Execution, orchestration, architecture

|

|

Agentic QA Engineer

|

TestGPT / Agentic Test Agents – AI systems that auto-generate test coverage based on feature specs.

Aider / Codegen agents – Help create test stubs and validate regressions in real-time. Prompt-based testing tools – Allow QA to simulate edge cases and detect failure patterns with minimal manual setup. |

Quality enforcement, test automation, continuous feedback

|

|

Agentic Designer

|

v0.dev – Transforms natural language into production-ready UI components.

Loveable / Figma AI Plugins – AI-assisted prototyping for faster design iterations and user testing. Computer vision-enabled AI tools – Used in multimodal feedback scenarios to align visual inputs with functional design. |

UI/UX prototyping, visual interaction, multimodal design

|

Ready to Build the GenDD Way?

AI development is here, and you want to make sure you’re doing it the right way—the GenDD way.

It gives your team structure, clarity, and speed by turning AI into a true collaborator rather than a chaotic sidekick.

In our GenDD Training Workshop, we take a tailored approach, helping you redesign your team roles, workflows, and delivery models.

Your teams will learn how to:

- Make GenDD part of your daily development

- Integrate AI into your existing stack

- Build a repeatable, AI-native system for delivery

Fill out the form, and let’s get your team building the GenDD way.