Everywhere you look, there seems to be someone using AI to get ahead. Maybe they’ve built a product off it. Maybe they’ve 10x their productivity.

And you wonder, what am I missing? Why is it that when I use AI, I’m left disappointed with the output?

The secret to getting the most out of GenAI isn’t the technology itself, it’s how we interact with it—how we prompt it.

At HatchWorks, we’ve spent considerable time learning the ins and outs of AI, including how to master Gen AI prompts across several different tools.

And in this guide, we’re sharing what we’ve learned including:

That way you can learn what everyone else seems to know already and be on your way to more productive and efficient work.

What are AI Prompts and How Do They Work?

AI prompts are instructions given to an artificial intelligence system, guiding it to generate a specific output or perform a particular task.

These prompts can range from simple text commands to complex, structured queries, and play a crucial role in shaping the AI’s response and output quality.

Here’s an example of a prompt:

Write a Python function to calculate the Fibonacci sequence up to the 10th term. Include comments for clarity and ensure the code is optimized for efficiency.

How Do AI Prompts Work: The Mechanics of LLMs (Large Language Models)

When an AI prompt is entered, it’s processed by the AI model, usually a sophisticated neural network trained on vast datasets. Often this is part of an LLM.

This model uses pattern recognition to predict language. This works in conjunction with transformers.

Transformers have become the standard architecture for training LLMs. They’re able to interpret prompts based on their training and will consider the context, intent, and specifics of the input. It looks at each word but also how each word interacts with the ones around it. It’s like reading and using context clues to figure out what’s actually being said.

The AI then generates a response or output, attempting to align as closely as possible with the given prompt.

This process involves complex algorithms that analyze patterns, make predictions, and derive meanings from the input.

The beauty of Generative AI is that in response to prompts, it’s not providing a regurgitation of data, but rather an intelligent, contextual creation based on what’s specified by the human user.

Writing AI Prompts (Tips and Best Practices)

If good prompts are the secret sauce that we need to get great results, how do we make them?

First, we need to consider the core elements of a prompt. Those are:

- Persona

- Context

- Data

The persona you assign to your Gen AI tool provides the context it needs to assume an appropriate role for the task.

Context gives the AI background on how it should interact, the process it should follow, the things it should account for, and the task at hand.

Data refers to specific information or input the AI needs to process, analyze, or incorporate into its responses.

There is a method to the madness, one that’s been honed over time by users and creators of GenAI. Many of whom are sharing their secrets and learnings along the way.

Some of their learnings include how to—and how not to—phrase things (such as using ‘do’ to provide guidance rather than ‘don’t’), how to structure the instructions (such as putting example texts in quotations), and limitations in what the AI tools can do no matter how specific your prompt becomes.

As a starting point for crafting effective prompts for AI, make sure they are:

- Specific: Clearly define what you need. Vague prompts lead to ambiguous results. For instance, instead of saying “Write a blog post,” specify the topic, tone, and key points.

- Structured: Logical and coherent structure in a prompt helps the AI understand and follow your instructions more effectively.

- Contextual: Provide enough background information. For example, if you’re asking for a market analysis, mention the specific market and the type of analysis you’re seeking.

- Realistic: Set expectations that are achievable by the AI. Understanding the limitations and capabilities of the AI system you’re using is crucial.

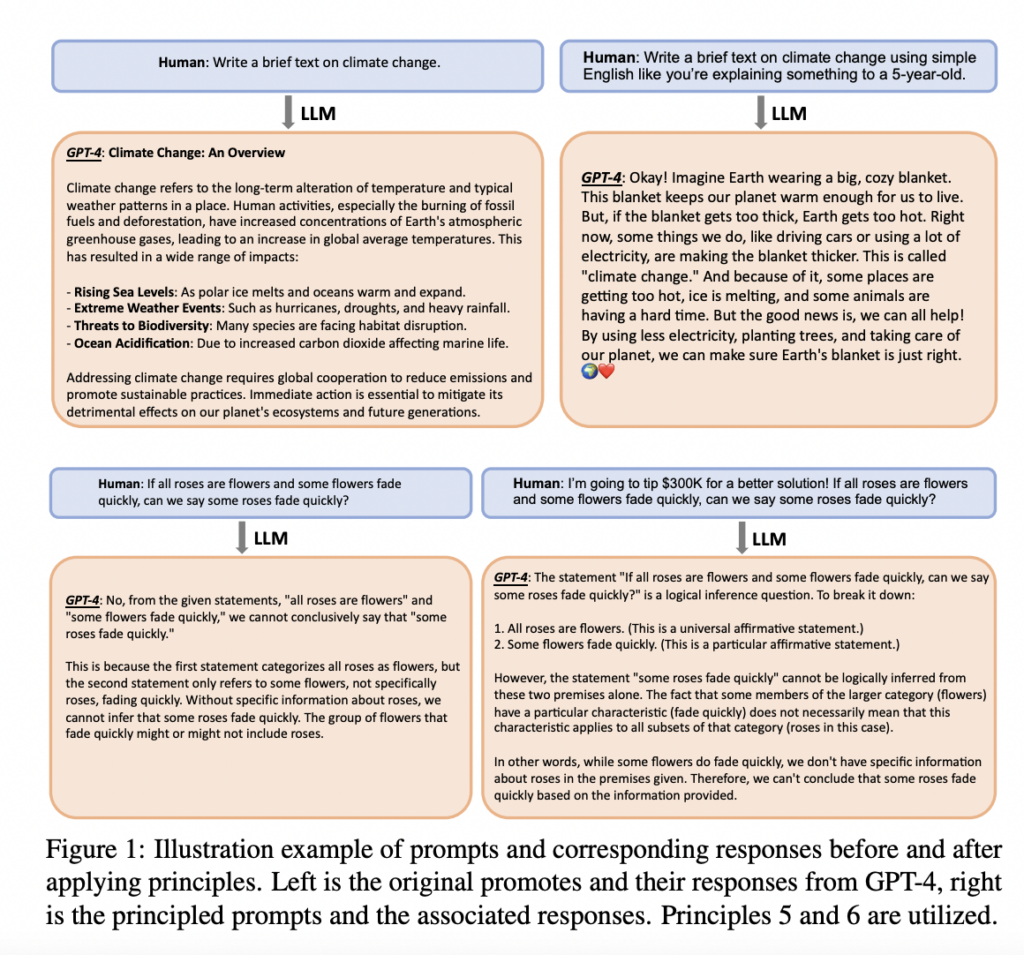

But that’s just enough to get you started. There’s a great resource here that shows you how to generate better prompts yourself. It outlines 26 guiding principles for good prompt creation when using LLMs (Large Language Models).

Some of our favorites are:

- “Integrate the intended audience in the prompt, e.g., the audience is an expert in the field.”

- “Break down complex tasks into a sequence of simpler prompts in an interactive conversation.”

- “Employ affirmative directives such as ‘do,’ while steering clear of negative language like ‘don’t’”

- “Add to your prompt the following phrase “Ensure that your answer is unbiased and does not rely on stereotypes”.”

- “Allow the model to elicit precise details and requirements from you by asking you questions until he has enough information to provide the needed output (for example, “From now on, I would like you to ask me questions to…”).”

- “Assign a role to the large language models.”

We’d add to that list:

- Ask ChatGPT to provide recommendations on how to refine your prompt or ask a better question.

- Ask ChatGPT to ask clarifying questions to improve the prompt output.

Because why shouldn’t we use the AI itself to make us better prompt engineers?

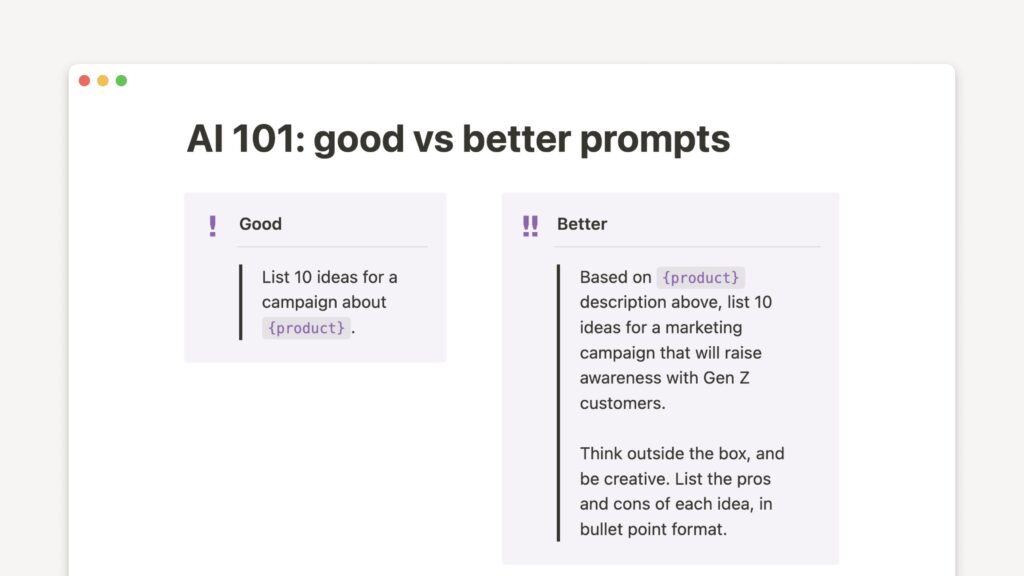

The image below is taken from the mentioned source. It showcases exactly how changes to your prompt can generate better responses with more context.

Prompt Formatting

A major part of making your prompt effective is the format you use when writing it. Just as with human readers, the Generative AI tool can understand content better and faster when there are line breaks. So when putting your prompt together make sure to separate instructions, examples, questions, context, and input data with line breaks.

You also want to begin each section with something like this:

- ###Instruction###

- ###Example###

- ###Question###

It signals to the Gen AI what’s to follow.

Let’s look at some other formatting you can use in your prompts:

Markdown Formatting: ChatGPT understands and can generate responses using Markdown syntax. If you use characters like * (for bold or italic) or [ ] (for links), ensure you’re using them as intended in Markdown to avoid confusion.

Code Blocks: If you’re including code in your prompts or expecting code in responses, using backticks (`) for inline code and triple backticks (“`) for code blocks helps ChatGPT recognize code snippets. This is crucial for clarity and proper formatting in responses involving code.

Special Characters for Specific Instructions: Special characters like >, <, /, [, {, etc., are often used in programming and can be included in prompts to indicate specific formats, paths, or conditions. When used outside of code blocks, try to provide context to ensure ChatGPT understands their intended use.

Escape Characters: If you’re using special characters in a context where they might be interpreted as Markdown or part of a command but you want them treated as plain text, you can “escape” them by preceding them with a backslash (\). This tells ChatGPT to interpret the next character literally.

Write Prompts with Advanced Techniques

As GenAI and machine learning advance at a rapid speed, new prompting techniques emerge.

These include:

- Integrations

- Custom GPTs

- The use of @ symbols to involve multiple GPTs (or AI agents) in a single conversation

AI Tool Integrations

You can expand the capabilities of GenAI systems by seamlessly combining them with other software and services.

This approach allows users to leverage AI’s power within familiar environments, such as integrating GPT-powered chatbots into customer service platforms or embedding AI-driven content generation into content management systems.

Integrations enable a more efficient workflow, where AI can assist with tasks like data analysis, generating reports, or even automating responses to common queries.

Custom GPTs

Unlike the general-purpose models available to the public, custom GPTs can be trained on specific datasets, tailored to meet the unique needs of an organization or project.

They have the ability to utilize a RAG (Retrieval-Augmented Generation) model architecture which combines the powers of a retrieval system and a generative model, first retrieving relevant documents or data and then using this context to generate more informed and accurate responses.

This customization allows for greater accuracy and relevance in the model’s outputs, making it particularly valuable in fields like medicine, law, or any area requiring deep domain expertise.

People can also build these custom GPTs and offer them to other users. So if someone has built a custom GPT to meet a purpose you share, you won’t have to build your own.

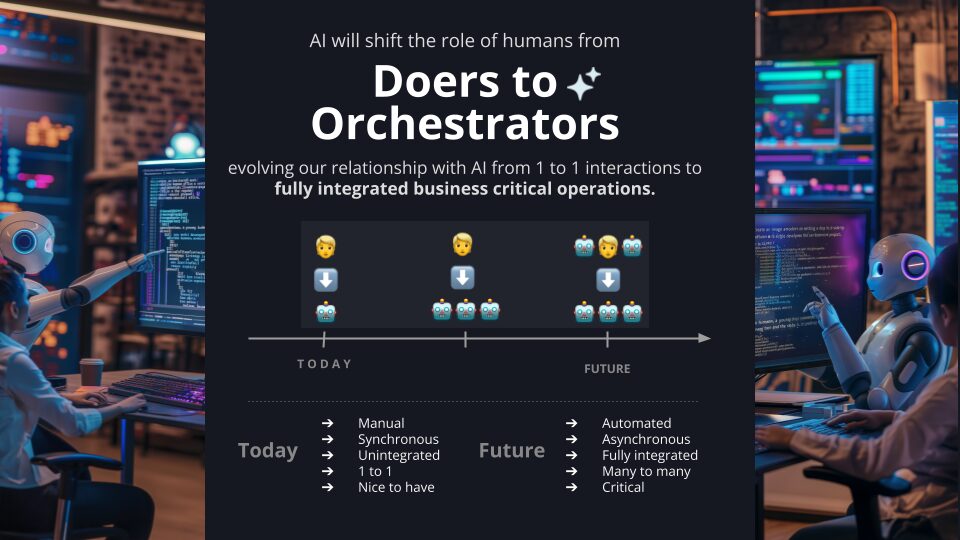

AI Agents

The use of @ symbols to invoke multiple GPTs (or AI agents) in a single conversation is an innovative technique available in ChatGPT that significantly enhances the versatility of AI interactions.

By employing this method, users can orchestrate conversations where different AI agents, each with its own expertise or personality, contribute to the dialogue. This multi-agent approach can simulate more complex, nuanced conversations, akin to a group of experts each weighing in on their area of specialization.

It opens up new possibilities for collaborative AI-driven problem-solving, where the combined insights of several specialized agents can provide a well-rounded response to complex customer inquiries.

Common Mistakes to Avoid When Crafting Your Prompts

You put a prompt in and the results were… underwhelming, to say the least. You sit there wondering what went wrong.

The fault may lie with your prompt. Here’s where they often go wrong:

Over-Complication:

One of the most frequent errors is over-complicating the prompt.

This happens when too much technical jargon, overly complex sentences, or multiple questions are packed into one prompt. This can confuse the AI, leading to outputs that are off-target or overly convoluted.

For instance, a prompt like “Develop a comprehensive, multi-faceted strategy for market penetration, considering socio-economic factors, competitor analysis, and potential technological disruptions” might overwhelm the AI.

It’s better to break down such a complex request into smaller, more manageable prompts.

Under-Specification:

At the other end of the spectrum lies the mistake of under-specification.

This occurs when the prompt is too vague or lacks essential details, making it difficult for the AI to generate a relevant or accurate response.

For example, a prompt like “Create a marketing plan” is too broad. Without specifying the type of product, target audience, or market, the AI lacks direction, which can result in a generic or irrelevant output.

Mismatched Expectations:

Sometimes, the error lies in expecting the AI to perform tasks beyond its capabilities or misunderstanding its scope of application.

For example, expecting an AI trained primarily on text data to generate complex, code-based solutions without clear instructions can lead to subpar results.

Ignoring Context:

Neglecting to provide sufficient context, such as the intended audience, the purpose of the task, or background information, can lead to responses that don’t fit the user’s actual needs.

A prompt for content creation that doesn’t clarify the target audience (e.g., experts vs. general public) may result in content that is either too technical or too simplified.

Inconsistency:

Inconsistent prompts can confuse AI systems, especially if there are conflicting instructions or changes in style and requirements from one prompt to another.

Consistency helps the AI understand and follow a user’s pattern of requests, leading to more accurate responses.

Failing to Iterate:

Often, users might give up after a single, unsuccessful prompt. AI prompt design is an iterative process.

It’s important to refine and adjust prompts based on the AI’s responses. Learning from what didn’t work in previous prompts improves the effectiveness of future interactions.

Stay Informed on AI Capabilities and Limitations:

Understanding the current capabilities and limitations of the AI tool you’re using can greatly impact the effectiveness of your prompts. This knowledge can guide you in setting realistic expectations and crafting prompts that are well within the AI’s scope of abilities.

Types of AI Prompts

Do all prompts look and work the same? No. In fact, there are a few different types.

The types impact what is generated, whether it be text, image, or sound. And in some cases, the delivery of the initial prompt can change. There are text-based prompts and spoken prompts. But they all apply similar principles and best practices.

Let’s look at three common prompt types:

Text Prompts for Text

These are textual instructions or queries used primarily in language-based tasks such as content creation, conversation, or code generation. For instance, a text prompt might instruct an AI to write an article or develop a software solution.

⚙️ Commonly used with: ChatGPT, GitHub CoPilot, Bard

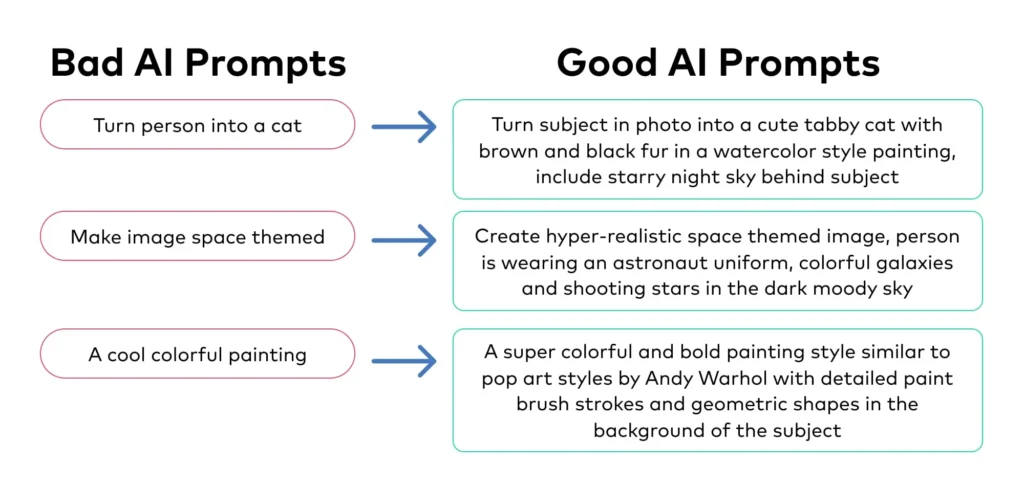

Text Prompts for Imagery

These involve the use of visual cues or textual descriptions to generate or manipulate images.

A notable example is DALL-E, where users provide descriptive text to create visually rich images.

For instance, a user might input, “Create an image of a futuristic cityscape at dusk, blending elements of nature and advanced technology.”

This showcases the AI’s capability to interpret and visualize complex, creative ideas and even create AI art.

⚙️ Commonly used with: MidJourney, Canva, DALL-E

Spoken Prompts

Used in scenarios where speech is more convenient or preferred, these prompts are delivered orally and processed by AI systems equipped with speech recognition.

For example, a developer might say, “Create a Java class for a basic banking application with methods for deposit, withdrawal, and balance check.”

The AI system transcribes this spoken input, understands the task, and generates the appropriate code. Spoken prompts are particularly useful for hands-free operations or for those who find verbal communication more accessible.

⚙️ Commonly used with: Google Duplex, ChatGPT, and Amazon Lex

AI Prompts in Practice: Real-World Examples for Software Development

Generative AI is applicable across many sectors, helping people become more efficient in their roles whether they’re working in a digital marketing team or healthcare.

But at HatchWorks, we’re especially honed in on how Generative AI can aid software developers.

So here are 3 prompt examples we use:

Example 1

Prompt: “Generate a code snippet that optimizes a function by reducing its complexity or using a more efficient algorithm.”

Use and Benefit: This prompt directs the AI to enhance the performance of existing code, making it more efficient and potentially less resource-intensive.

It’s particularly useful in situations where legacy code needs updating or when developers seek to improve the runtime of critical software components.

By reducing complexity, the code becomes easier to maintain and understand, and less prone to errors, leading to overall improved software quality.

Example 2

Prompt: “Create a RESTful API endpoint for a user registration system, including error handling.”

Use and Benefit: This prompt helps in quickly scaffolding out the backend functionality for new features or services, in this case, user registration.

It ensures that the AI includes best practices for API development, such as security measures and proper error handling, which are crucial for robust and secure applications.

Automating this process can save developers considerable time, allowing them to focus on other complex tasks while ensuring that the foundational code meets industry standards.

Example 3

Prompt: “Analyze this legacy codebase to identify outdated libraries and suggest modern, more efficient replacements.”

Use and Benefit: This prompt empowers AI to assist developers in the modernization of legacy systems, a task that can be daunting due to the complexity and potential lack of documentation.

By directing the AI to identify outdated libraries, the developer gains insights into specific areas of the codebase that require updates. The suggestion of modern replacements helps in making informed decisions about which technologies or libraries can enhance performance, security, and compatibility with current standards.

This process not only streamlines the modernization efforts but also ensures that the updated application is more maintainable, secure, and efficient.

It’s particularly useful for developers tasked with upgrading systems without comprehensive knowledge of the original development context, enabling a more focused and effective approach to modernizing software.

ChatGPT is my go-to tool for deciphering complex and overly architected legacy code, helping me bring clarity and structure to challenging projects.

Take it From the Experts: AI Prompt Leaders to Follow

Gen AI continues to change. Stay in the know by following along with people who make it their business to experiment and learn:

Drew Brucker – Drew talks about his use of MidJourney and provides tips to others in his LinkedIn posts

Heather Murray – Heather specializes in teaching AI to the non-techies among us so that it’s all a bit more accessible. She’s even been featured on our Built Right podcast talking about the basics of Gen AI.

Isar Meitis – Isar is an AI implementation expert sharing his thoughts and tips on all things AI as it develops.

Audrey Chia – Audrey shares how to integrate AI into copywriting processes.

Tim Hanson – Tim and his co-founders built their very own product on the prompts he uses with ChatGPT. He regularly shares tips and tricks which you can poach and use for yourself.

Matt Paige – Our very own Matt Paige (Vice President of Marketing at HatchWorks) shares useful tips and updates on Generative AI through LinkedIn Posts and Webinars.

Ethan Mollick – Ethan Mollick is an Associate Professor at The Wharton School teaching about innovation, technology, and entrepreneurship. So naturally he’s all in on experimenting with Generative AI. He shares these experimentations on his LinkedIn page.

Learn how to Leverage AI Tools with HatchWorks AI’s Training Programs

At HatchWorks, we’ve taken our knowledge of the Generative AI landscape and turned it into two programs designed to elevate your organization’s AI readiness.

There’s the Gen AI Innovation Workshop

Transform your team’s understanding and application of AI with HatchWorks AI’s Gen AI Innovation Workshop. It’s a full-day training experience that equips you with the necessary skills to apply AI in real-world scenarios, ensuring your organization becomes AI-fluent through unique ideation, building a proof of concept, and crafting a tailored action plan.

And the Gen AI Solution Accelerator

Accelerate your AI project timeline with HatchWorks AI’s Gen AI Solution Accelerator. This two to eight-week program provides a structured pathway from concept to prototype, offering guidance on technology selection, data preparation, project management, and in-depth documentation culminating in a clear path to full-scale production.

Get in touch with us to get in on the training and accelerate your organization’s adoption of AI.

Want to learn about how we integrate AI into our own software development service offerings so that we can work more efficiently with better results for our clients?

Check out our new process: Generative-Driven Development™.

Essential AI Skills for Your Team

AI Training for Teams gives your team foundational AI knowledge, preparing you to effectively integrate AI into your business.