As businesses add more artificial intelligence (AI) tools to their tech stack, the more disjointed and siloed their work with AI becomes, creating an operational nightmare.

The true value of AI lies in its ability to work together and to integrate into your existing systems and processes.

But how can you make AI systems ‘talk’ and ‘work together’? The answer lies in AI orchestration.

The good news is, most businesses aren’t doing this. So, if you pull AI orchestration off you’ll be placing yourself ahead of the competition and realizing the full potential of AI in your workplace.

In this article, we talk you through the basics of AI orchestration and help you get started with integrating diverse AI capabilities into one powerhouse operation.

What is AI Orchestration?

📖Definition: AI orchestration is the process of coordinating different AI tools and systems so they work together effectively.

It’s like being the conductor of an orchestra, where each musician (or AI tool) needs to play in harmony with others to create beautiful music (or successful outcomes).

AI orchestration, if done well, increases efficiency and effectiveness because it streamlines processes and ensures the AI you’re using communicate, share data, and function as one system.

What’s the difference between AI orchestration tools and a traditional AI application?

Mainly, that an AI application is a standalone system designed to perform specific tasks while AI orchestration is the act of making those standalone systems work together for broader, more complex tasks.

| Key characteristics of singular AI applications: | Key characteristics of multiple AI applications working together through AI orchestration |

|---|---|

|

Focused Functionality: Designed for specific tasks like speech recognition, chatbot interactions, or customer behavior predictions.

|

Integration: Links various AI models and systems, enabling them to communicate and share data seamlessly.

|

|

Isolated Operation: Works independently without the need to interact or integrate with other AI systems.

|

System-Wide Efficiency: Optimizes the entire ecosystem of AI applications, ensuring that resources are allocated efficiently and all systems are aligned with the overall business goals.

|

|

Limited Scalability: Scaling often involves enhancing the existing system but not necessarily integrating with others.

|

Adaptability and Scalability: Facilitates easier scaling of AI capabilities across an organization by adding new components and ensuring they work well with existing ones.

|

Through AI orchestration these AI applications can access more data sources, capabilities, and specializations to operate in a way a single model never could.

👂Listen to Alexander De Ridder, CTO & Co-Founder of Smyth OS, talk about that exact concept on one of our recent Built Right podcast episodes at time code 08:47:

What’s the relationship between AI agents and AI system orchestration?

AI agents and AI orchestration are closely related but distinct concepts:

| AI Agents | AI Orchestration |

|---|---|

|

These are individual AI systems or software entities that are designed to perform specific tasks autonomously. AI agents can range from simple chatbots to complex decision-making systems embedded within larger applications.

They operate based on algorithms that allow them to learn from data inputs and make decisions or perform actions accordingly. |

AI orchestration involves integrating these agents so they can work together harmoniously, enhancing their individual capabilities and ensuring they contribute effectively to broader system objectives.

This orchestration includes managing data flows between agents, synchronizing their activities, and optimizing resource use across the system. |

So going back to our comparison to an orchestra, if AI orchestration is the act of conducting, AI agents and tools are the performers.

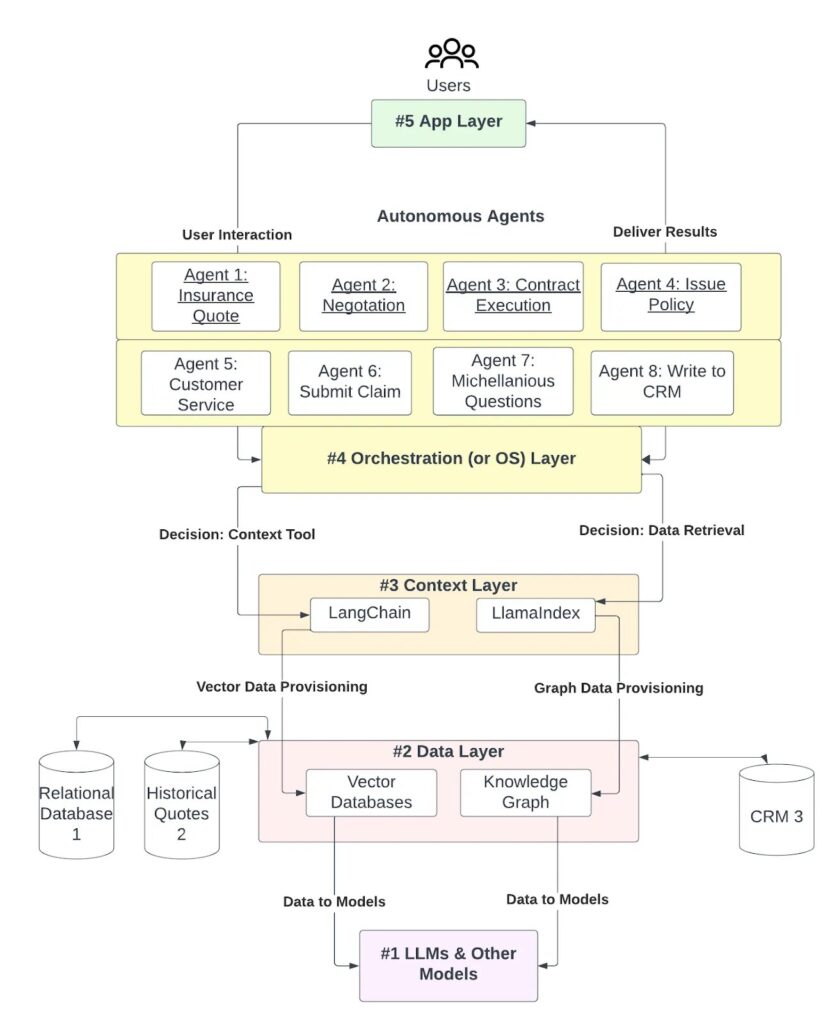

Here’s an example of a modern workflow orchestration platform in practice:

Imagine a large online retailer like Amazon. This online retailer uses AI technology for a variety of tasks, including, recommending products to customers, managing inventory, and optimizing delivery routes.

| AI Recommendation System: | AI Inventory Management: | AI Delivery Optimization: |

|---|---|---|

|

AI analyzes your past purchases and browsing behavior to suggest products you might like.

|

Another AI predicts which products will be in high demand, ensuring that they are sufficiently stocked.

|

Yet another AI calculates the fastest and most cost-effective delivery routes.

|

Without AI orchestration, these systems might not share information effectively, leading to less accurate recommendations, potential stockouts, or inefficient delivery routes.

But with AI orchestration, these systems work together:

- The inventory system tells the recommendation system which items are available, enhancing the accuracy of suggestions.

- The delivery optimization AI uses data from the inventory system to plan routes based on where items are currently available, reducing shipping times and costs.

The Core Components of AI Orchestration

AI orchestration involves several core components working in harmony to maximize the efficiency and effectiveness of AI systems within your organization.

Here, I’ll break down these components into three primary categories:

- Automation

- Integration

- Management

I’ll also discuss the technological backbone that supports them, cloud computing and APIs.

Automation

Automation is the use of technology to complete tasks that could be done by humans. It’s the basis of most AI applications. Think of Github’s Co-Pilot tool. It automates coding tasks, freeing developers up to do other work and accelerating the process.

AI orchestration automates the interactions between different AI tools and systems.

Key Elements:

- Automated Deployment: Tools and systems that automatically deploy new AI models or updates to existing models across various environments.

- Self-Healing Systems: Systems designed to automatically detect and correct errors or inefficiencies without human intervention.

- Resource Allocation: AI orchestration platforms can dynamically allocate computational resources based on the needs and priorities of different AI tasks.

Integration

Integration is crucial for enabling different AI applications and data sources to work together. To do it successfully, all parts of the AI ecosystem need to communicate and operate as a unified whole.

Key Elements:

- Data Integration: Ensuring that data flows seamlessly between systems and that all AI applications have access to the data they need to function effectively.

- Model Integration: Tools and frameworks that allow different AI models to interact with each other, potentially enhancing each other’s capabilities or outputs.

Management

Effective management of AI systems is vital for maintaining the health and effectiveness of AI applications throughout their lifecycle.

Key Elements:

- Lifecycle Management: From development and testing to deployment, monitoring, and updates, managing the lifecycle of each AI application is crucial.

- Performance Monitoring: Continuously tracking the performance of AI systems to ensure they are operating optimally and making adjustments as necessary.

- Compliance and Security: Ensuring all AI operations comply with relevant laws and regulations, and maintaining robust security protocols to protect data and systems from unauthorized access.

What makes it all possible? APIs and Cloud Computing

AI orchestration isn’t possible without two crucial technologies:

- APIs (Application Programming Interfaces)

- Cloud computing

If you’re not familiar with them, here’s a brief breakdown of how they support AI orchestration:

APIs

APIs are crucial for integration, allowing different software components and services to communicate.

In the context of AI orchestration, APIs enable seamless data exchange and functionality between disparate AI models and applications, regardless of the programming languages or architectures used to develop them.

Cloud Computing

Cloud platforms provide the scalable infrastructure needed to deploy and manage AI applications.

They offer flexibility, scalability, and the computational power necessary to handle large volumes of data and complex AI algorithms.

Cloud services also facilitate easier integration and management of AI systems across different locations and platforms.

The Benefits of AI Orchestration

When the topic of AI orchestration comes up here at HatchWorks, we think less about what it is and more about what it can help us achieve.

And two core benefits always come to mind. Those are:

- Enhancing our operational efficiency

- Improved performance

Enhancing Operational Efficiency

AI orchestration enhances operational efficiency by streamlining operations and reducing the need for manual, repetitive tasks.

Without AI orchestration for example, if you wanted to pull company data from Salesforce into ChatGPT for a specific prompt then you would have to do that manually. With AI orchestration, however, you could automate that sharing of data.

Here are a few other examples of streamlined operations:

- Continuous Integration/Continuous Deployment (CI/CD): AI orchestration can automate the software development lifecycle, from code integration, testing, to deployment. For instance, it can automatically deploy new code changes after passing set tests, reducing the need for manual oversight.

- Resource Optimization: It dynamically allocates computational resources where they are most needed, ensuring that no single process consumes excessive resources that could slow down other operations.

By automating routine processes, businesses can reduce the labor hours required for tasks such as deploying updates, monitoring system performance, and managing data flow between services.

Cost savings are also significant as AI orchestration minimizes downtime and optimizes resource use, ensuring that expensive computing power is not wasted. This leads to a more efficient use of the IT budget, directing funds towards innovation rather than maintenance.

Improved Performance of AI Systems and Business

AI Orchestration enables organizations to tackle complex problems more effectively because it combines the specialized capabilities of different models, such as natural language processing, computer vision, and machine learning.

Consider a fraud detection system in the financial industry. AI Orchestration can integrate various AI models, each trained to identify specific patterns or anomalies.

By orchestrating these models to work together, the system can analyze transactions from multiple perspectives, such as historical patterns, user behavior, and geolocation data. This holistic approach enhances the accuracy and reliability of fraud detection, minimizing false positives and negatives.

AI Orchestration also enables continuous learning and improvement of AI models. By collecting feedback and data from the orchestrated workflow, organizations can fine-tune individual models and optimize their performance over time.

This iterative process ensures that the AI system remains up-to-date and adapts to evolving business needs, customer preferences, and user needs.

Key Steps to Implement AI Orchestration

To reap the rewards of AI orchestration you have to implement it correctly. Here are the steps we would take for a seamless transition:

- Assessment and Planning: Begin by assessing your current AI capabilities and identifying the processes that could benefit from orchestration. Define clear objectives and the scope of integration.

- Choose the Right Tools and Platforms: Selecting appropriate orchestration tools and platforms is crucial. Look for solutions that can integrate seamlessly with your existing AI systems and offer the scalability and security features you need.

- Design the Architecture: Design an architecture that allows for efficient data flow and communication between different AI models. Ensure that it supports both current needs and future expansions.

- Integration and Testing: Start integrating your AI systems with the orchestration platform. It’s important to conduct thorough testing during this phase to address any technical issues that arise.

- Deployment and Monitoring: Deploy the orchestrated systems and continuously monitor their performance. Be prepared to make adjustments as needed to optimize operations.

Challenges and Solutions

Unfortunately, AI orchestration isn’t without its challenges. Here are the ones we’ve come up against in our journey to orchestrate AI:Challenge 1: Integration Complexity

Integrating disparate AI systems can be challenging due to different formats and standards.

Our Solution: Use middleware or integration platforms that provide connectors and APIs to facilitate seamless data integration.

Challenge 2: Security Risks

Integrating multiple AI systems increases the risk of data breaches and security vulnerabilities.

Our Solution: Implement robust security protocols, regular security audits, and ensure that all integrated systems comply with security standards.

Challenge 3: Scalability Issues

As the number of AI systems increases, ensuring the orchestration solution can scale effectively is crucial.

Our Solution: Opt for cloud-based orchestration tools that offer elasticity and can dynamically adjust resources based on demand.

Challenge 4: Interoperability Concerns

Different AI systems may use different data models and architectures, making interoperability a challenge.

Our Solution: Adopt standard data formats and open architectures that facilitate interoperability among diverse systems.

Best Practices for Businesses

1. Start Small and Scale Gradually:

Begin with a pilot project that integrates a few AI systems, perhaps focusing on a specific machine learning task. This allows you to manage the complexities of your AI infrastructure at a smaller scale and understand the nuances of AI orchestration layers before expanding to more complex workflows.

2. Focus on Data Quality and Accessibility:

Ensure that raw data is clean, well-organized, and accessible across all AI systems. Involve data scientists in the data process to validate data quality and optimize data workflows. High-quality data is crucial for effective AI decision-making and interoperability.

3. Choose the Right Tools for Your Needs:

Select orchestration tools that align with your business goals and technical requirements, perhaps leaning heavily towards low-code platforms for ease of use. Consider factors like compatibility with your existing infrastructure, scalability, and support for security protocols within a modular architecture.

4. Adopt a Modular Approach:

By designing AI workflows as a series of modular building blocks, organizations can easily manage complex workflows and orchestration layers, allowing them to modify, upgrade, or replace individual components without disrupting the entire system.

One way to implement a modular approach is by adopting a microservices architecture or modular architecture. In this architectural style, the AI system—including machine learning components—is decomposed into small, loosely coupled services that can be developed, deployed, and scaled independently, delivering more value to the business.

5. Invest in Training and Development – You Need a Senior Platform Software Engineer:

Equip your development team, including data scientists and engineers, with the necessary skills and knowledge to manage and optimize AI orchestration and infrastructure. Ongoing training and professional development are crucial as AI technologies and best practices evolve, and adopting low-code solutions can accelerate this process.

6. Monitor Performance and Gather Feedback:

Regularly monitor the performance of your orchestrated AI systems to ensure they deliver business value. Use analytics to track efficiency and effectiveness of your data processes, and gather feedback from users to identify areas for improvement, thereby delivering more value.

7. Implement Robust Security Measures:

Security is paramount in AI orchestration and the underlying infrastructure. Implement comprehensive security measures throughout your data processes, including data encryption, secure APIs, and regular security audits to protect against potential vulnerabilities, especially when collecting data.

Future Trends in AI Orchestration

Most of the world is still playing catch-up with the newest capabilities and technological evolution of AI but by keeping an eye on the future, you’re able to adapt faster and differentiate yourselves sooner from your competition.

With that in mind, I asked some of our AI experts at HatchWorks what they see developing in AI orchestration and where it’s going next.

Here’s a list of their insights:

- There’s going to be greater emphasis on creating autonomous systems that can manage and heal themselves without human intervention. This shift will enhance system resilience and reduce downtime, further boosting operational efficiency.

- An increase in the adoption of hybrid cloud and multi-cloud strategies. AI orchestration will evolve to seamlessly manage AI operations across these diverse platforms, enabling more robust data management and processing capabilities.

- Blockchain technology could be integrated into AI orchestration to enhance security and transparency, particularly in sectors like finance and healthcare. Blockchain could help manage the data flows between AI systems in a secure, traceable, and tamper-proof manner.

Another thing we’ve seen developing among companies using AI is what’s being called ‘model gardens’ and is a practice that reduces reliance on any single AI system.

When it comes to AI orchestration, this could prove useful as a way to switch and change which model you use at any given time.

So if a newer, better option becomes available or if you have multiple use cases for AI, the switch is easy.

Resources and Tools for AI Orchestration

AI orchestration requires a robust toolkit to help manage and optimize the deployment and operation of AI systems.

Below is a list of some of the leading tools for effective AI orchestration.

Smyth OS:

SmythOS is a platform that allows users to design and deploy AI agents with ease using a no-code, drag-and-drop interface.

It supports a wide range of integrations and is designed to streamline complex processes by enabling seamless integration of AI, APIs, and data sources.

Smyth OS offers tools for various applications including ChatGPT, Claude, CoPilot, Meta, and more, making it highly versatile for creating tailored AI solutions.

Want to hear the CTO of Smyth OS talk about orchestration? Check out this episode of our Built Right podcast: Orchestrating the Web: The Untapped Potential of AI

Kubernetes:

Kubernetes is an open-source platform designed for automating deployment, scaling, and operations of application containers across clusters of hosts.

It provides the foundation for orchestration in cloud environments, particularly useful for managing microservices and distributed applications.

Kubernetes can manage AI workloads, automate rolling updates for AI applications, and efficiently handle scaling requirements.

Apache Airflow:

Airflow is ideal for setting up and managing data pipelines required for feeding data into AI models, ensuring that models are trained with the most recent and relevant data.

It’s an open-source tool for orchestrating complex computational workflows and data processing pipelines.

Flyte:

Flyte is a modern workflow orchestration platform designed for building, managing, and scaling complex data, machine learning (ML), and analytics workflows.

It emphasizes scalability, handling large datasets across distributed environments efficiently.

Identify Your Top AI Agent Use Cases

The AI Agent Opportunity Lab helps you discover and prioritize high-impact AI agent opportunities tailored specifically to your business objectives.