Should we go broad or go deep?

That’s the real choice behind general-purpose vs vertical AI agents. One gives you range and adaptability while the other gives you precision and domain control.

And increasingly, both are expected to work alongside other AI agents as part of larger, orchestrated systems.

This guide breaks down the trade-offs, shows where each type wins, and offers a practical playbook for building agent systems that deliver real outcomes.

TL;DR: When to Use Which

Use general-purpose AI agents when you need flexibility, speed, or exploration. They’re best when you need to perform simple tasks, navigate ambiguity, or generate data-driven insights from past interactions, especially when plugged into existing software or a wide range of AI tools through natural language processing.

Choose vertical agents when the goal is precision, compliance, or scaling task automation across business processes. They’re optimized to perform specific tasks repeatedly, drawing on curated data and connecting deeply with external tools.

But you can also use both.

The teams that are really mastering AI in their business operations now run multiple AI agents, each designed to perform tasks within scope, while working alongside other agents or handing off to human agents when needed. This hybrid model is what powers today’s most effective virtual agents in production.

What AI Agents Are (and Aren’t)

| ✅ What they are | ❌ What they aren’t |

|---|---|

|

Goal-seeking systems with autonomy

|

Simple assistants that only respond to prompts

|

|

Capable of reasoning, memory, and tool use

|

Static chatbots with no memory or context

|

|

Designed to complete multi-step workflows

|

Hardcoded scripts with no flexibility

|

|

Able to make decisions and act across systems

|

One-size-fits-all solutions for every task

|

AI agents are systems that combine reasoning, memory, tool use, and autonomy to accomplish goals. Unlike AI assistants, which typically respond to single prompts or queries, AI agents operate over time, across multiple steps, and make decisions based on changing inputs.

At a technical level, an AI agent includes:

- Reasoning capabilities to break down and sequence tasks.

- Short- and long-term memory to retain state across interactions.

- Tool access to APIs, databases, and external systems for real-world execution.

- Autonomy to make decisions and act within guardrails, without needing constant input.

This makes AI agents well-suited for workflows that involve multiple steps, decision points, or tool integrations.

For example, an agent might triage support tickets, fetch customer history, draft a response, and update a CRM.

The available tools and systems an agent can access play a major role in how effectively it performs. So does its ability to reason through options, escalate when needed, and make decisions.

Want to go deeper? Check out: AI Agents Explained: What They Are and Why They Matter

General Purpose AI Agents: The Swiss Army Layer

General purpose agents are built for breadth. They excel at navigating ambiguity, connecting across tools, and adapting to rapidly changing requirements. You’ll find them in systems like Do Browser, Ollama-based copilots, and Vertex Agent Builder.

What defines this class of agent:

- Broad skillsets across domains

- Natural-language orchestration

- Fast prototyping and iteration

- Multi-user or team-aware workflows

- A wide surface area of available tools for integration and action

These agents thrive in “unknown unknowns” territory. They’re ideal for early discovery, MVP workflows, or serving as the connective tissue across disconnected systems.

A field study of agent usage on platforms like Perplexity found that general-purpose agents are most actively used for exploratory tasks like synthesizing research, comparing sources, and generating structured outputs from unstructured inputs.

That said, general agents come with trade-offs. Their lack of domain grounding can lead to hallucinated logic or missed compliance nuances.

For teams needing more precision, one emerging approach is layering in domain-specific Standard Operating Procedures, which the SOP-Agent framework explores as a bridge between generality and specialization.

Where General Purpose Agents Win

General-purpose agents are built for speed, flexibility, and breadth. They perform best in workflows that are fluid, fast-changing, or loosely defined. Common wins include:

- Exploratory tasks: Research synthesis, competitive data analysis, or breaking down fuzzy business problems.

- Software development support: Acting as code copilots, agentic helpers like Jules assist with scaffolding, integration setup, and test generation, ideal for prototyping and early builds.

- Cross-app automation: These agents glue together actions across disparate tools and apps. Using platforms like n8n, teams can design agent workflows visually, connecting tools, triggering automations, and embedding decision logic without writing custom code.

- MVP and proof-of-concept phases: When requirements change daily, general-purpose agents let teams build momentum without waiting for perfect specs or training data.

Vertical AI Agents: The Digital Specialist

Vertical AI agents are purpose‑built, domain‑specific systems trained on industry knowledge, workflows, and tools tailored to a particular business context. They combine domain workflows, curated data, and built‑in guardrails to deliver higher accuracy, lower drift, faster time‑to‑value, and inherent compliance compared to general‑purpose alternatives.

Their value lies in precision. These agents are engineered for tasks where accuracy, compliance, and structure are non-negotiable.

Key features include:

- Higher accuracy due to narrow focus and curated inputs

- Lower model drift over time

- Faster time-to-value with minimal configuration

- Built-in compliance tied to domain-specific constraints

They usually ship with opinionated user interfaces and fixed workflows, offering guided, efficient paths through common tasks.

Where Vertical Agents Win

Vertical AI agents work best when the task is high-volume, rules-driven, and intolerant of errors. They’re purpose-built for environments where consistency matters more than flexibility.

Key win areas include:

- Repetitive, rules-heavy workflows: Ideal for structured processes like claims, KYC, or service ticket validation, where outcomes must align with strict business or regulatory logic.

- Strong domain vocabularies and document types: Domains with established taxonomies, templates, and structured records benefit most. Retrieval-augmented generation (RAG) over private document stores adds depth while keeping responses grounded.

- Simpler stakeholder signoff: With pre-defined guardrails and visible logic paths, vertical agents reduce ambiguity, making them easier to approve, easier to trust, and easier to scale.

The Core Comparison: General Purpose vs Vertical AI Agents

The table below highlights where each agent type shines across four common axes. Use it as a quick gut-check when mapping agent opportunities across your org.

| Category | General Purpose Agents | Vertical Agents |

|---|---|---|

|

Task Ambiguity

|

Strong — excels when the problem is undefined or exploratory

|

Limited — performs best with clearly scoped tasks

|

|

Data Governance & Compliance

|

Weaker — fewer built‑in guardrails

|

Strong — designed around domain rules

|

|

Integration Depth

|

Broad but shallow connectors

|

Deep, contextual integrations (e.g., SAP, Salesforce, EMR)

|

|

Change Velocity

|

Strong — adapts quickly to evolving workflows

|

Weaker — optimized for steady, repeatable processes

|

A Special Note on Cost

Understanding how each agent type performs functionally is one thing, but real-world adoption depends just as much on cost, maintenance, and business fit over time.

Many teams choose general-purpose agents because they appear cheaper and faster to launch. But hidden costs, like prompt management, API usage, and downstream fixes, can erode that advantage quickly.

Vertical agents may carry higher upfront costs, but they often deliver lower total cost of ownership (TCO) by reducing rework, aligning faster with stakeholders, and avoiding one-off configurations.

In other words: initial cost ≠ operating cost.

Why Leaders Run Hybrid Agent Stacks

No single agent can do it all. General-purpose agents are flexible but prone to hallucinations. Vertical agents are precise but rigid.

Leaders are solving this by combining them. One discovers while the other delivers.

A general-purpose agent handles open-ended input, detects intent, and routes the task, while vertical agent executes with precision. And then there’s an orchestrator that ensures observability, handles guardrails, and logs everything for compliance and ROI tracking.

Hybrid stacks deliver three core benefits:

- Observability into how decisions flow through systems

- Controllable autonomy, with clear handovers between agent types

- Clearer ROI, because execution paths are auditable and aligned with business outcomes

This approach mirrors how real agent ecosystems are being built today: instead of relying on one monolithic “super agent,” teams compose multi‑agent systems where different agents specialize and collaborate to solve complex tasks.

Research on multi‑agent architectures shows that decomposing work across specialized agents can improve accuracy and goal success, especially on long‑horizon and multi‑step workflows, compared to single‑agent designs with similar capabilities.

For example, collaborative agent frameworks achieve higher end‑to‑end task performance by enabling role‑specific interactions and error correction between agents.

How it works in practice

User → General Purpose Agent → Router/Orchestrator → Vertical Agents

Each step of the pipeline has guardrails and monitoring built in, so leaders can maintain trust and traceability as automation scales.

This hybrid paradigm aligns perfectly with HatchWorks’ approach to Agentic AI Automation, which helps organizations orchestrate these layered agent flows with visibility and control.

The Playbook to Using General vs Vertical AI Agents

Playbook Step 1: Map Your Agentable Work

Before buying or building AI agents, identify the work that’s actually worth automating. The best candidates are high-friction, exception-prone, or repetitive tasks across functions like software development, support, and operations.

Start by looking for work that’s:

- High-friction or repetitive

- Prone to human error or exception handling

- Dependent on multiple tools or manual hops

These are strong signals of “agentable” work, where a well-designed agent can deliver impact without introducing new risk.

A simple way to triage is with:

- Human exception rate: How often does a person need to intervene?

- Data sensitivity: Does the task touch regulated or customer-facing data?

- Tool variety: Are people jumping between systems to get it done?

Teams that follow this approach of task-first, not tool-first, are more likely to hit production and prove ROI. It’s a consistent pattern in orgs that succeed with AI while others stall at pilot.

Playbook Step 2: Choose the Right Agent Type

Once you’ve mapped your agentable work, the next move is matching the right type of agent to the job.

If the task is poorly defined, lacks a UI, or calls for creative problem-solving, then start with a general-purpose agent.

If the task is document-heavy, follows strict rules, or requires compliance, choose a vertical agent.

If your org has multiple interconnected systems (CRMs, ERPs, support platforms) you’ll be best off combining both. You can start with a general-purpose agent, layer in 1–2 vertical agents, and connect everything through an orchestrator.

Teams that map work types to agent types early avoid overbuilding or boxing themselves in.

Want help making the call? Use our downloadable General Purpose vs Vertical AI Agents Decision Matrix to score candidate use cases.

And if you’re planning a larger rollout, HatchWorks offers an AI Roadmap & ROI Workshop to validate your approach, prioritize opportunities, and design the architecture to scale with confidence.

Playbook Step 3: Ground in Domain-Specific Data

Even the best agent architecture won’t deliver without the right data behind it. When using vertical agents, you need to feed them the materials your teams already rely on, such as policy manuals, past tickets, care plans, SOPs, documentation, and contracts.

This is what lets a vertical agent reason with context rather than purely relying on pattern-match across prompts.

The most effective setups follow a simple loop: Retrieve → Call Tools → Output Action

This pattern is what keeps responses accurate and aligned. Without it, you get drift, hallucination, or brittle behavior.

General-purpose agents without grounding may work fine for exploration. But when precision matters, ungrounded agents become a liability.

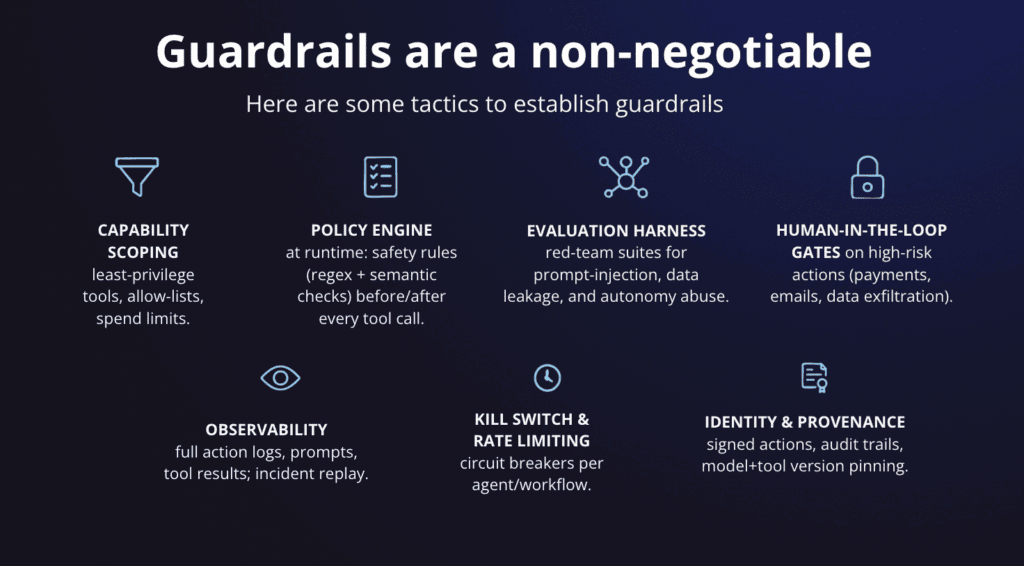

Playbook Step 4: Orchestrate Tools and Guardrails

It’s not just documentation agents need access to.

To drive real outcomes, they also need to interact with the systems your teams rely on every day. That includes CRMs, ERPs, Git repos, ticketing platforms, and tools like n8n that orchestrate workflows.

This layer of tool access is what turns passive agents into active ones. Without it, agents can recommend, but they can’t resolve.

But with execution comes risk, which is why guardrails matter just as much as access.

To operate safely and predictably, agents need clear parameters:

- Which tools they’re allowed to use

- How many actions they can take per session

- What types of data they can access or write to

- When to pause and escalate to a human in the loop

Without these controls, even a well-trained agent can create operational risk, triggering actions it shouldn’t, or missing context it can’t retrieve.

Playbook Step 5: Measure What Matters (Not Just Tokens)

LLM token counts and model usage stats might look useful, but they rarely tell you if your agents are actually working.

The metrics that matter most are operational:

- Mean Time to Resolution (MTTR): How fast does the agent complete the task?

- Task success rate: Did it get the right result the first time?

- Human override rate: How often does someone need to step in?

- Data access failures: Is the agent hitting roadblocks retrieving what it needs?

These metrics give you a functional view of performance.

To get even more value, break these metrics out by agent type. This helps prove the ROI of vertical agents, where fewer errors and faster completions often translate directly to cost savings and better user experience.

It also tells you where to go next. If a general-purpose agent is frequently escalating or misfiring on a specific task type, that’s a strong signal it’s time to verticalize.

Common Failure Patterns to Avoid

Certain mistakes show up again and again in failed agent deployments. But if you name them early, most are easy to avoid.

1. General-purpose agents without grounding

When agents aren’t connected to relevant data or tools, they hallucinate or go generic. Trust erodes quickly, and users stop engaging as a result.

2. Vertical agents with no way to adapt

Prebuilt doesn’t mean permanent. If you can’t update logic or workflows as the business changes, the agent becomes obsolete.

3. Trying to do it all with one mega-agent

One agent handling every request sounds efficient, but it really isn’t. You get bloated logic, routing confusion, and unpredictable costs. Smaller, focused agents scale better.

4. No alignment between governance and ownership

If the people approving the rollout aren’t the ones accountable for outcomes, things break. Security slows everything down, or worse, blocks deployment entirely.

Industry Mini-Scenarios

Healthcare Operations

Discharge summaries and medical code validation are high-friction, high-risk tasks.

A general-purpose agent handles the messy, freeform work of summarizing care notes in plain language.

Then, a vertical agent, trained on billing codes and healthcare compliance, validates the outputs before submission.

Why it works: GP handles nuance, vertical handles precision.

Field Service Management

Technicians in the field need fast, responsive support. A general-purpose agent manages scheduling, route planning, and inbound requests.

Meanwhile, a vertical agent ensures equipment checks follow SOPs, safety standards, and reporting protocols.

Why it works: GP drives logistics, vertical enforces quality.

Financial Services (FinServ)

Client onboarding and ongoing communication require both flexibility and compliance. A general-purpose agent assists with initial client comms, answering questions, and guiding forms.

Once documents are submitted, a vertical agent runs KYC and AML rule checks before approval.

Why it works: GP engages clients, vertical enforces regulation.

Build vs Buy: When to Bring in HatchWorks AI

The decision to build or buy depends on what you’re optimizing for. Is it speed and certainty or flexibility and control?

- Buy a vertical agent when speed matters, compliance is non-negotiable, or the task involves complex systems like SAP or Salesforce. Prebuilt agents in these cases reduce integration risk and accelerate time-to-value.

- Build a general-purpose agent when you want to explore, prototype, or scale experimentation across product, support, or software teams. This gives you control over how the agent works and how it evolves.

This is where HatchWorks AI comes in.

Our Agentic AI Automation offering helps you design and deploy agent stacks that balance flexibility with control, using best practices around orchestration, tool access, and data grounding.

And if you’re integrating agents into existing workflows, our approach to No-Code Workflow Automation with n8n lets you move fast without rebuilding everything around the agent.

You’ll also get access to HatchWorks’ AI-certified teams, experts in building and integrating agents without overstaffing your internal squads.

We help you move from AI interest to AI impact.

FAQs on General Purpose vs Vertical AI Agents

Can I start with ChatGPT or Gemini and add vertical agents later?

Yes, and that’s actually a common pattern. Start with a general-purpose agent for discovery or prototyping, then layer in vertical agents as tasks stabilize. An orchestrator can handle routing as your system evolves.

Do I need retrieval-augmented generation (RAG) for vertical agents?

Not always, but it helps. Vertical agents built on static rules or APIs can still perform well. Adding domain-specific retrieval boosts accuracy and resilience, especially in dynamic environments.

Are vertical agents just repackaged AI SaaS?

Some are, but the best go further. True vertical agents include an agentic control loop: they can reason, act, and adapt within a defined scope.

What’s the risk of doing nothing?

Shadow agents. When there’s no strategy, teams build their own, untracked, insecure, and impossible to scale. Meanwhile, competitors are cutting costs and accelerating workflows. Delay now means more cleanup and more catch-up later.