If you’re like us, always on the lookout for tools that take the hassle out of coding, you should test Codestral Mamba.

Whether you’re here to boost your productivity or simply to find out if Mamba’s the right co-pilot for your coding journey, we’ve got you covered!

This is Mistral’s latest coding-focused Large Language Model (LLM), designed to make writing code smoother and faster. Mamba comes packed with some impressive features, with performance frequently matching or surpassing that of larger 22B and 34B models.

In this article, we’ll dive into the key aspects of Codestral Mamba: its architecture, performance benchmarks, setup options, and a head-to-head comparison with other coding models.

What is Codestral Mamba?

Codestral Mamba is a revolutionary code generation model developed by Mistral AI, designed to elevate your code productivity and streamline code-related tasks.

At its core, Mamba boasts a 7-billion parameter architecture that leverages linear time inference, setting it apart from traditional transformer models. This advanced code generation model excels in producing efficient and accurate code, making it a game-changer for developers.

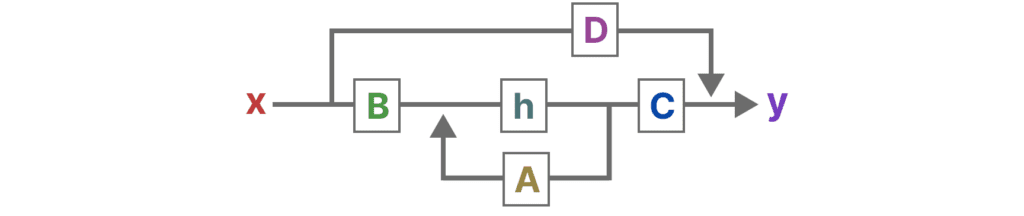

What truly sets Codestral Mamba apart is its state space model (SSM) architecture. Unlike traditional transformer models that process all input sequences uniformly, Mamba’s SSM architecture allows it to selectively focus on the most relevant parts of the input.

The diagram below illustrates the architecture, where Mamba processes input selectively, enhancing its performance and enabling it to tackle complex coding tasks with ease:

This selective processing not only enhances its performance but also ensures that it can handle complex coding tasks with ease. Whether you’re working on small scripts or large-scale projects, Mamba’s ability to efficiently process input sequences makes it an ideal choice for a wide range of coding applications.

📚 Explore how large language models (LLMs) can transform development. Read more on LLMs and their impact.

Overview of Architecture, Transformer Models, and Key Features

Let’s start with what makes Mamba special.

Unlike the more commonly known GPT models, Mamba is built on a State-Space Model (SSM). This means it processes information selectively, which is a fancy way of saying that Mamba only focuses on the important bits, speeding up tasks like context switching and allowing faster code completion.

The use of state space models addresses limitations in efficiency and context handling, particularly noting improved linear time complexity and unbounded context management compared to the quadratic scaling issues associated with Transformers.

Where Mamba really shines is its 256k token context limit, making it a fantastic option for large-scale coding projects where you need a model that can remember all the intricate details without missing a beat. This extended token limit helps Mamba perform with remarkable speed, especially compared to transformer-based models.

Architecture Comparison: Mamba2 vs. Transformer

Here’s a quick look at how Mamba2’s state space model architecture stacks up against the widely used Transformer model in terms of efficiency, context handling, and speed:| Feature | Transformer | Mamba2 |

|---|---|---|

|

Architecture Type

|

Attention-based

|

State Space Model (SSM)

|

|

Core Mechanism

|

Focuses on all parts of input equally

|

Focuses only on the most important parts

|

|

Efficiency

|

Slower as input size increases

|

Faster, even with large inputs

|

|

Context Handling

|

Limited by how much input it can process

|

Unbounded context handling

|

|

Key Innovations

|

Breaks input into smaller chunks to understand it

|

Selectively processes input, optimizing for speed

|

|

Training and Inference Speed

|

Slower due to its design

|

Faster, optimized for quick responses

|

But Mamba isn’t just fast; it’s also hardware-efficient.

Whether you’re working on smaller coding tasks or large enterprise projects, its architecture is designed to minimize resource consumption, which is a win for those who want to optimize their workflows without breaking the bank.

🔗 Related Reading: Amazon Q Developer: The AWS Tool Revolutionizing Cloud Interaction – A breakdown of Amazon’s AI-powered coding assistant.

Setting Up: Local vs API for Code-Related Tasks

Setting up Codestral Mamba is as flexible as you want it to be.

You can choose between running it locally or accessing it through an API. If you’re feeling adventurous, you can install it locally using the mistral-inference SDK or download the model from Hugging Face.

If local setup sounds too complicated, Mistral also offers a hosted API. Using the API is a breeze, making it a great option for developers who want to start coding right away without dealing with installation.

Keep in mind, though, that running Mamba locally can offer more control, especially if you’re working on resource-intensive tasks.

So, which option is better?

For developers handling larger projects or who want total control over the model, local installation is the way to go. But if you’re looking for quick access with minimal setup time, the API will save you a lot of headaches.

With its Apache 2.0 license, Codestral Mamba is not only powerful but also commercially viable. You can confidently use it in your projects without worrying about legal constraints.

📚 Curious about deploying AI models like Codestral Mamba? Explore our article on deploying large language models (LLMs).

Benchmark Performance: How Does Mamba Compare?

So, how does Codestral Mamba stack up when it’s put through its paces?

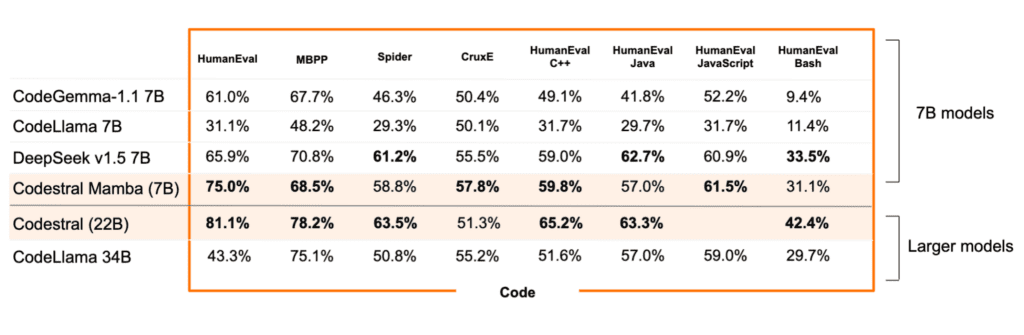

According to benchmark tests conducted by Mistral AI, Codestral Mamba demonstrated superior performance in coding tasks compared to other models in its class. As shown in the table below, Mamba outperformed other 7B models like DeepSeek v1.5 and CodeLlama, especially in tasks like HumanEval and MBPP, while even holding its own against larger models like Codestral 22B.

For those unfamiliar with these benchmarks:

- HumanEval is a test of code generation where models are required to write Python functions based on problem descriptions. It evaluates the model’s ability to generate correct, functional code that solves real-world programming challenges.

- MBPP (Massive Multitask Programming Prompt) is a benchmark that tests a model’s capacity to generate code across a variety of programming languages. It contains hundreds of diverse prompts, measuring the model’s versatility and accuracy in solving coding problems across different domains.

These results from Mistral AI’s testing reveal Codestral Mamba’s strength in handling real-world coding tasks, outperforming other models with a 75.0% score on HumanEval and 68.5% on MBPP. This highlights Mamba’s reliability in generating high-quality code efficiently.

📚 Interested in understanding the nuances between open-source and closed LLMs? Read our comparison guide on open-source vs. closed LLMs.

Enhancing Code Productivity: What Worked and What Didn’t

Codestral Mamba has proven to be a highly efficient tool for enhancing coding productivity. It performed admirably on simple tasks, such as generating Python functions or HTML pages, with clean and efficient code that ran correctly on the first try.

Its instruction-tuned model allows developers to ask questions and get direct responses, such as creating Python functions to calculate sums or find discounts.

The model shines in routine tasks where quick and accurate code generation is required.

Logical Reasoning: How Mamba Handles Non-Coding Tasks

Codestral Mamba also performed reasonably well in non-coding tasks requiring logical reasoning. In tests with basic math problems, such as calculating total sales, Mamba delivered accurate results.

However, it faltered when faced with multiple, simultaneous questions or more abstract reasoning tasks, such as complex pattern recognition.

Despite these limitations, Mamba shows promise as a valuable assistant for developers who need help with both coding and occasional reasoning-based tasks. Its strengths lie in structured, coding-related challenges, but for more creative or open-ended problem-solving, it may require further development.

📚 Want to explore more about how AI can help mitigate the talent gap? Check out our blog on the software engineer shortage.

Codestral Mamba for Developers: Co-Pilot Capabilities

Now, let’s talk about how Codestral Mamba works as a true developer co-pilot.

Can it be your go-to assistant for daily coding tasks? Absolutely.

It’s excellent for small-to-medium coding tasks, offering fast inference speed and the ability to handle repetitive tasks like writing functions, cleaning up syntax, or handling simple code generation. Mamba’s quick responses (around 0.5 seconds) make it a reliable assistant for everyday coding.

However, while Mamba excels in these areas, it does face limitations when tackling more complex, multi-step projects—such as code that spans across multiple files with deep interdependencies.

In such cases, Mamba’s smaller size can be a disadvantage, as it’s optimized for focused, isolated tasks. That said, Mamba shines in automating repetitive coding tasks, like generating boilerplate code or formatting according to project style guides, freeing up developers to focus on more creative and challenging work.

Here’s how Codestral Mamba compares to GitHub Copilot and Amazon Q:

| Feature | Codestral Mamba | GitHub Copilot | Amazon Q |

|---|---|---|---|

|

Inference Speed

|

0.5 seconds

|

0.7 seconds

|

Slower than both

|

|

Customization

|

Fine-tuning based on user projects

|

Pre-trained, limited customization

|

Structured suggestions, less customizable

|

|

Integration with IDEs

|

Requires setup (e.g., Continue.dev)

|

Seamless integration with VS Code, IntelliJ, etc.

|

Comparable to Copilot in ease

|

|

Pricing

|

Open-source (free), paid tiers for advanced features

|

Subscription: $10/month (individuals), $19/month (businesses)

|

Free access, ideal for budget-conscious users

|

|

Strengths

|

Fast, efficient, customizable, handles large token contexts

|

Widely adopted, multi-language support, easy to use

|

Highly structured suggestions, good optimization for focused tasks

|

|

Best For

|

Small-to-medium coding tasks, custom workflows

|

Developers needing quick setup and multi-language support

|

Budget-conscious developers, simpler tasks

|

Tool Friendliness and Developer Adoption

GitHub Copilot leads in user-friendliness with seamless IDE integration and minimal setup time, while Codestral Mamba requires more initial configuration using third-party tools.

Once set up, however, Mamba offers blazing-fast response times and is customizable for specific coding styles, making it a great option for developers who need more control over their AI assistant.

On the developer adoption side, GitHub Copilot benefits from an early launch and strong backing by OpenAI, making it the go-to tool for many developers. Amazon Q, while slower and less customizable, appeals to developers due to its free access and cost-effectiveness, making it ideal for those on a budget.

Pricing Breakdown: Cost vs. Value

When it comes to pricing, Codestral Mamba offers the most flexibility. As an open-source tool, Mamba is free to use, with paid tiers for advanced features. This makes it highly appealing for developers working on smaller projects or those looking for a cost-effective solution.

GitHub Copilot operates on a subscription-based model at $10/month for individuals, which can add up over time, especially for teams managing multiple projects.

Amazon Q, with its free tiers, is a great option for developers who need a tool but don’t want to commit to a subscription, though its slower speed may be a trade-off for those needing immediate responses.

Future Updates and Improvements for Codestral Mamba

While Codestral Mamba offers an impressive foundation in code generation, its future updates are where it truly aligns with HatchWorks AI’s philosophy behind Generative-Driven Development™ (GenDD).

Mamba’s developers are focusing on enhanced customization, allowing models to be fine-tuned not just at the individual level, but potentially across entire teams. This shift will enable smoother collaborative development, a core principle of HatchWorks’ GenDD, making the development process more efficient and consistent.

As Mamba continues to expand its native integration into platforms like GitLab and Jira, it will become even more attractive to teams that need a seamless, end-to-end AI solution to optimize their development pipeline.

Conclusion: Is Codestral Mamba the Future of Coding Assistants?

So, where does all this leave us?

After taking a good look at Codestral Mamba, it’s clear that this coding assistant is not just another AI tool—it’s a serious contender in the race for AI-driven productivity.

Of course, GitHub Copilot is still a great option, especially if you’re looking for a well-established tool with extensive language support. But for those looking for a bit more flexibility and a faster edge, Codestral Mamba just might be the future of coding assistants.

Where Codestral Mamba truly shines is in how it complements the Generative-Driven Development™ (GenDD) approach pioneered by HatchWorks AI. GenDD focuses on reducing friction in the development lifecycle by using AI-native tools to assist in prototyping, scaling, and delivering software solutions faster than ever before.

We’re ready to support your project!

Instantly access the power of AI with our AI Engineering Teams.