Large language models (LLMs) are the unsung heroes of recent Generative AI advancements, quietly working behind the scenes to understand and generate language as we know it.

But how do they work? What are they capable of? And what should we look out for when using them?

Understanding Large Language Models

Let’s get the basics out of the way. Here we’ll define the large language model (LLM), explain how they work, and provide a timeline of key milestones in LLM development.

What is a Large Language Model?

A large language model, often abbreviated to LLM, is a type of artificial intelligence model designed to understand natural language as well as generate it at a large scale.

When we say human language, we don’t just mean English, Spanish, or Cantonese. Those are certainly part of what LLMs are trained on but human language, in this context, also extends to:

- Art

- Dance

- Morse code

- Genetic code

- Hieroglyphics

- Cryptography

- Sign language

- Body language

- Musical notation

- Chemical signaling

- Emojis and symbols

- Animal communication

- Haptic communications

- Traffic signs and signals

- Mathematical equations

- Programming languages

LLMs are trained on billions of parameters and have the ability to learn from a wide range of data sources.

This extensive training enables them to predict and produce text based on the input they receive so that they can engage in conversations, answer queries, or even write code.

Some of the leading very large models include giants like GPT, LLaMa, LaMDA, PaLM 2, BERT, and ERNIE.

They’re at the heart of various applications, aiding in everything from customer service chatbots to content creation and software development.

Some companies even build their own LLMs but that requires significant time, investment, and tech knowledge. It’s much easier to integrate a pre-trained LLM into your own systems.

How Do Large Language Models Work?

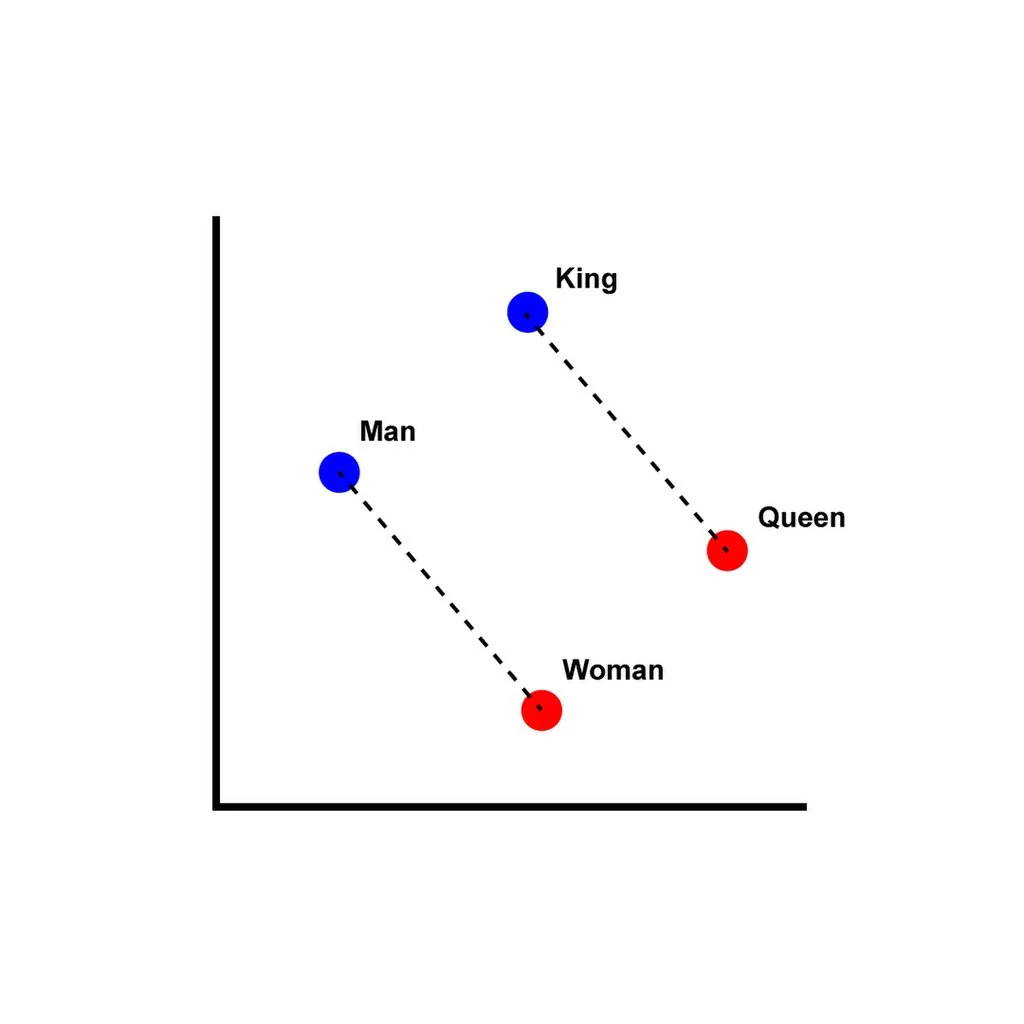

Large Language Models use a blend of neural networks and machine learning (ML). It’s this blend that allows the technology to first process and then generate original text and imagery.

Think of neural networks as the LLM’s brain. It’s these networks that learn from vast amounts of data, improving over time as they’re exposed to more.

As the model is trained on more data, it learns patterns, structures, and the nuances of language. It’s like teaching it the rules of grammar, the rhythm of poetry, and the jargon of technical manuals all at once.

Machine learning models then help the model to predict the next word in a sentence based on the words that come before it. This is done countless times, refining the model’s ability to generate coherent and contextually relevant text.

LLMs now also operate on a Transformer Architecture. This architecture allows the model to look at and weigh the importance of different words in a sentence. It’s the same as when we read a sentence and look for context clues to understand its meaning.

⚠️ While LLMs can generate original content, the quality, relevance, and innovativeness of their output can vary and require human oversight and refinement.

The originality is also influenced by how the prompts are structured, the model’s training data, and the specific capabilities of the LLM in question.

Key Milestones in Large Language Model Development

Large language models haven’t always been as useful as they are today. They’ve developed and been iterated upon significantly over time.

Let’s look at some of those key moments in LLM history. That way you can appreciate how far they’ve come and the rapid evolution in the last few years compared to decades of slow progress.

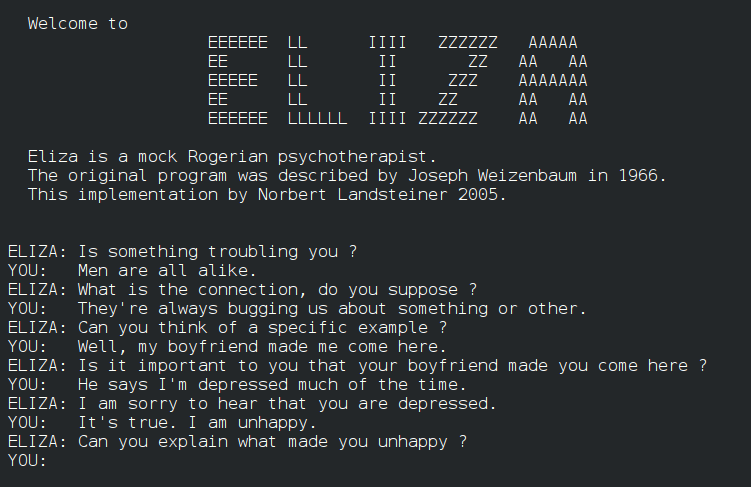

The first chatbot created by Joseph Weizenbaum, simulating a psychotherapist in conversation.

A groundbreaking tool developed by a team led by Tomas Mikolov at Google, introducing efficient methods for learning word embeddings from raw text.

- GPT (Generative Pretrained Transformer): OpenAI introduced GPT, showcasing a powerful model for understanding and generating human-like text.

- BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT significantly advanced the state of the art in natural language understanding tasks.

OpenAI released GPT-3, a model with 175 billion parameters, achieving unprecedented levels of language understanding and generation capabilities.

OpenAI introduced ChatGPT, a conversational agent based on the GPT-3.5 model, designed to provide more engaging and natural dialogue experiences. ChatGPT showcased the potential of GPT models in interactive applications.

The launch of Midjourney, along with other models and platforms, reflected the growing diversity and application of AI in creative processes, design, and beyond, indicating a broader trend towards multimodal and specialized AI systems.

OpenAI released GPT-4, an even more powerful and versatile model than its predecessors, with improvements in understanding, reasoning, and generating text across a broader range of contexts and languages.

Pre-2010: Early Foundations

- 1950s-1970s: Early AI research lays the groundwork for natural language processing. Most famously, a tech called ‘Eliza’ was the world’s first chatbot.

- 1980s-1990s: Development of statistical methods for NLP, moving away from rule-based systems.

2010: Initial Models

- 2013: Introduction of word2vec, a tool for computing vector representations of words, which significantly improved the quality of NLP tasks by capturing semantic meanings of words.

2014-2017: RNNs and Attention Mechanisms

- 2014: Sequence to sequence (seq2seq) models and Recurrent Neural Networks (RNNs) become popular for tasks like machine translation.

- 2015: Introduction of Attention Mechanism, improving the performance of neural machine translation systems.

- 2017: The Transformer model is introduced in the paper “Attention is All You Need”, setting a new standard for NLP tasks with its efficient handling of sequences.

2018: Emergence of GPT and BERT

- June 2018: OpenAI introduces GPT (Generative Pretrained Transformer), a model that leverages unsupervised learning to generate coherent and diverse text.

- October 2018: Google AI introduces BERT (Bidirectional Encoder Representations from Transformers), which uses bidirectional training of Transformer models to improve understanding of context in language.

2019-2020: Larger and More Powerful Models

- 2019: Introduction of GPT-2, an improved version of GPT with 1.5 billion parameters, showcasing the model’s ability to generate coherent and contextually relevant text over extended passages.

- 2020: OpenAI releases GPT-3, a much larger model with 175 billion parameters, demonstrating remarkable abilities in generating human-like text, translation, and answering questions.

2021-2023: Specialization, Multimodality, and Democratization of LLMs

- 2021-2022: Development of specialized models like Google’s LaMDA for conversational applications and Facebook’s OPT for open pre-trained transformers.

- 2021: Introduction of multimodal models like DALL·E by OpenAI, capable of generating images from textual descriptions, and CLIP, which can understand images in the context of natural language.

- 2022: The emergence of GPT-4 and other advanced models such as Midjourney, continuing to push the boundaries of what’s possible with LLMs in terms of generating and understanding natural language across various domains and tasks, including image generation. It’s also more accessible to larger numbers of people.

Capabilities of Large Language Models

The capabilities of Large Language Models are as vast as the datasets they’re trained on. Use cases range from generating code to suggesting strategy for a product launch and analyzing data points.

This is because LLMs serve as foundation models that can be applied across multiple uses.

Here’s a list of LLM capabilities:

- Text generation

- Language translation

- Summarization

- Question answering

- Sentiment analysis

- Conversational agents

- Code generation and explanation

- Named entity recognition

- Text classification

- Content recommendation

- Language modeling

- Spell checking and grammar correction

- Paraphrasing and rewriting

- Keyword and phrase extraction

- Dialogue systems

And here’s a breakdown of some of the more common ones we see:

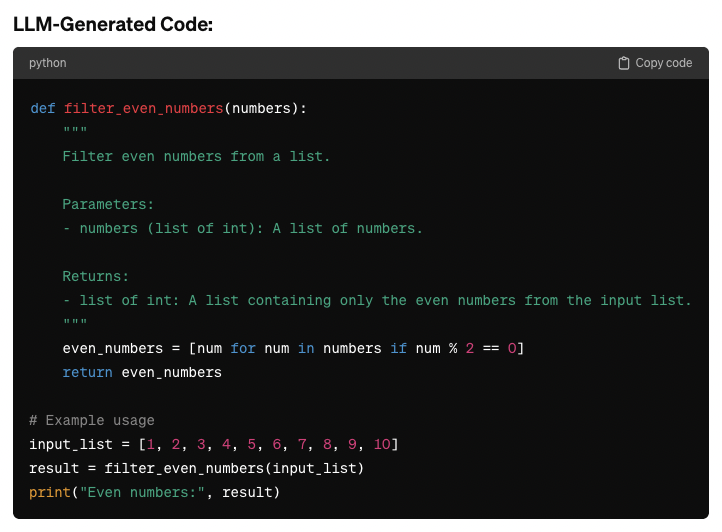

Automated Code Generation

LLMs can generate code snippets, functions, or even entire modules based on natural language descriptions, reducing the time and effort required to implement common functionalities.Here’s an example to illustrate how LLMs can be used for automated code generation:

Prompt:

“Write a Python function that takes a list of numbers as input and returns a list containing only the even numbers.”

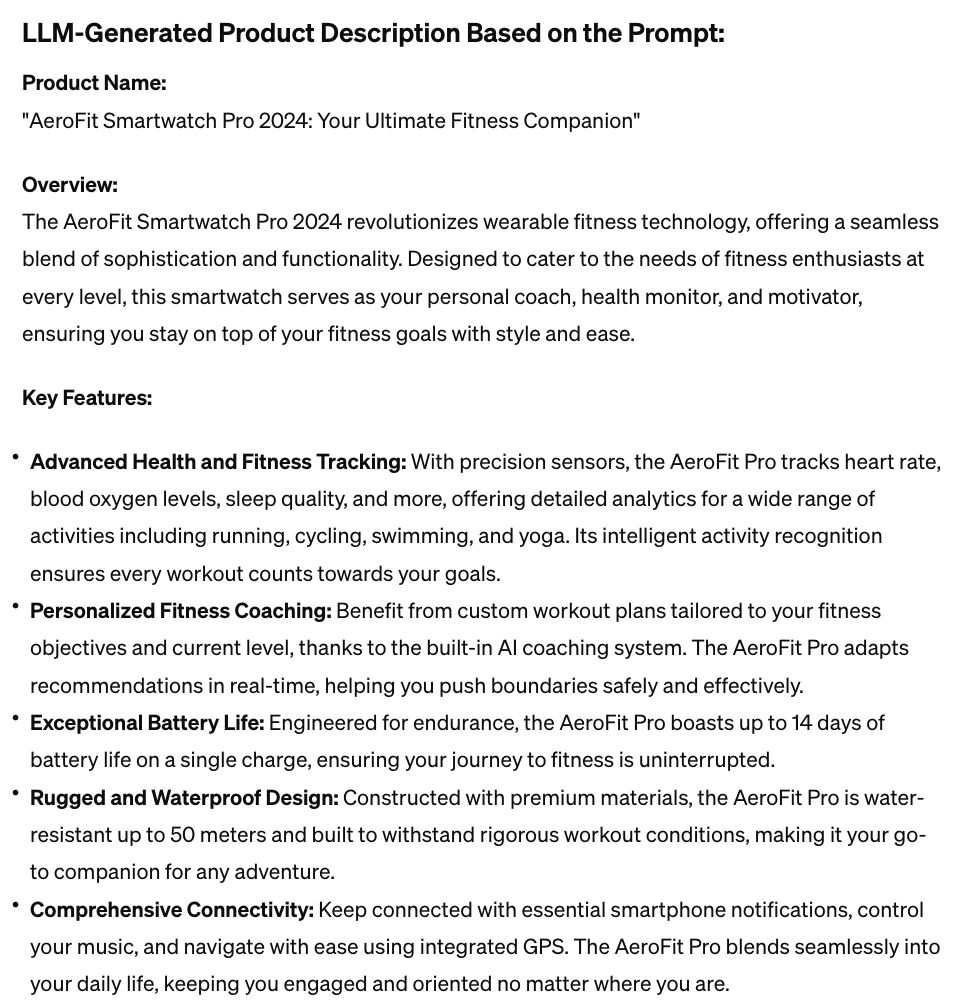

Text Generation

LLMs can generate coherent, contextually relevant text based on prompts. This includes creating articles, stories, and even generating product descriptions.Here’s an example to illustrate how LLMs can be used for text generation:

Prompt:

“Generate a product description for a cutting-edge smartwatch designed for fitness enthusiasts. The description should highlight its advanced health and fitness tracking, personalized coaching, long battery life, durability, connectivity features, and customizable design. Target the description to appeal to both seasoned athletes and beginners interested in tracking their fitness progress.”

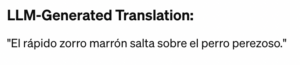

Language Translation

They can translate text between different languages, often with a high degree of accuracy, depending on the languages involved and the model’s training data.Here’s an example to illustrate how LLMs can be used for language translation:

Prompt:

“Translate the following English text into Spanish: ‘The quick brown fox jumps over the lazy dog.'”

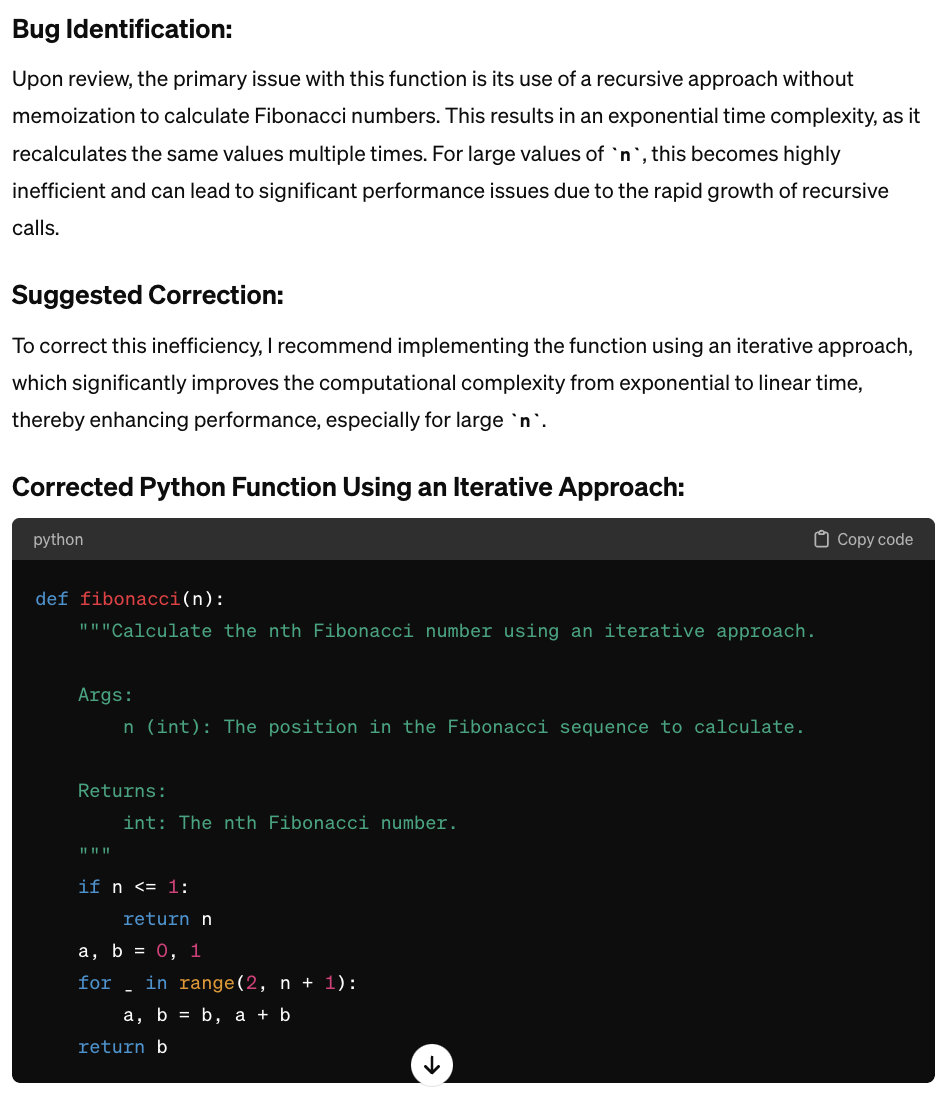

Bug Detection and Correction

LLMs can help identify bugs in code by analyzing code patterns and suggesting fixes for common errors, potentially integrating with IDEs (Integrated Development Environments) to provide real-time assistance.Here’s an example to illustrate how LLMs can be used for bug detection:

Prompt:

“The Python function below intends to return the nth Fibonacci number. Please identify and correct any bugs in the function.

Python Function:

def fibonacci(n):

if n <= 1:

return n

else:

return fibonacci(n – 1) + fibonacci(n – 2)”

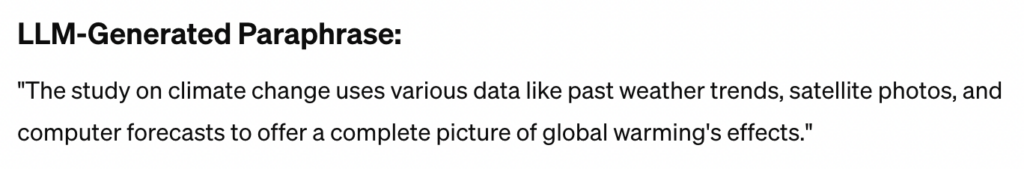

Paraphrasing and Rewriting

They can rephrase or rewrite text while maintaining the original meaning, useful for content creation and academic purposes.Here’s an example to illustrate how LLMs can be used for paraphrasing:

Prompt:

“Rewrite the following sentence in a simpler and more concise way without losing its original meaning: ‘The comprehensive study on climate change incorporates a wide array of data, including historical weather patterns, satellite imagery, and computer model predictions, to provide a holistic view of the impacts of global warming.'”

Dialogue Systems

LLMs power sophisticated dialogue systems for customer service, interactive storytelling, and educational purposes, providing responses that can adapt to the user’s input.

Think of a chatbot on a software product you use where you can ask it questions and it generates insightful, helpful responses.

Challenges and Limitations of LLMs

Large language models have come a long way since the early days of Eliza.

In the last two years alone, we’ve seen LLMs power Generative AI and create high-quality text, music, video, and images.

But with any technology, there will always be growing pains.

Technical Limitations of Language Models

Large Language Models sometimes face technical limitations impacting their accuracy and ability to understand context.

Domain Mismatch

Models trained on broad datasets may struggle with specific or niche subjects due to a lack of detailed data in those areas. This can lead to inaccuracies or overly generic responses when dealing with specialized knowledge.

Word Prediction

LLMs often falter with less common words or phrases, impacting their ability to fully understand or accurately generate text involving these terms. This limitation can affect the quality of translation, writing, and technical documentation tasks.

Real-time Translation Efficiency

While LLMs have made strides in translation accuracy, the computational demands of processing and generating translations in real-time can strain resources, especially for languages with complex grammatical structures or those less represented in training data.

Hallucinations and Bias

On occasion, LLM technology is too original. So original in fact that it’s making up information.

This is a lesson Air Canada learned the hard way when its chatbot told a customer about a refund policy when no such policy exists, which they then had to honor.

Finally, LLMs can inadvertently propagate and amplify biases present in their training data, leading to outputs that may be discriminatory or offensive.

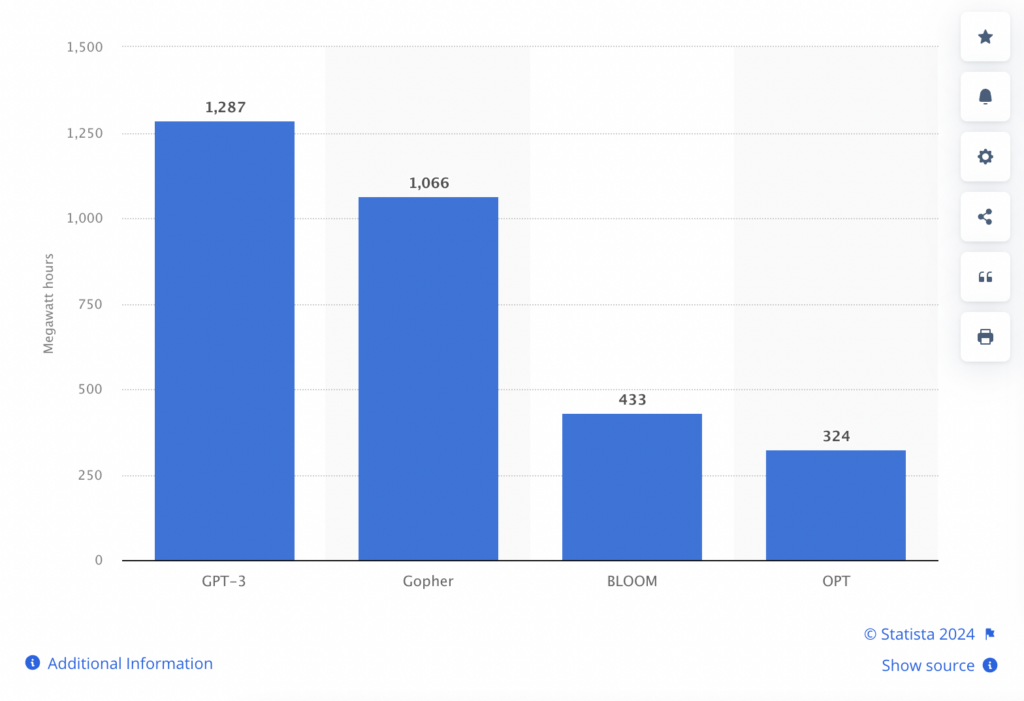

Scalability and Environmental Impact

The scalability of LLMs is tied to the impact it has on the environment. And that impact is turning out to be a big one.

Training a system like GPT-3 took 1,287 Megawatt hours (MWh) of energy. To put that into perspective, 1 MWh could power about 330 homes for one hour in the United States.

The image below shows the energy consumption of training four different LLMs.

Energy consumption doesn’t end at training—operating LLMs also uses a grotesque level of energy.

In one report, Alex de Vries, founder of Digiconomist, has calculated that by 2027 the AI sector will consume between 85 to 134 Terawatt hours each year. That’s almost the same as the annual energy demand of the Netherlands.

We can’t help but wonder how sustainable that is and what the long-term environmental impact will be on our energy sources. Especially when you consider LLMs are only going to become larger and more complex as we advance their capabilities.

And to maintain large language models, we’ll need to update them with new data and parameters as they arise. That will only expend more energy and resources.

The Future of Language Models: What Comes Next?

Now that we’ve seen drastic and rapid improvement in the capabilities of LLMs through Generative AI, we expect users of AI to be fine-tuning prompts and discovering new use cases and applications.

In the workplace especially, the focus will be on productivity hacks. It’s something we experiment with already through our Generative Driven Development™ offering, where our team has increased the productivity of software development by 30-50%.

Hilary Ashton, Chief Product Officer at Teradata, shared her predictions for the future of LLMs and AI in AI Magazine:

First, I foresee a massive productivity leap forward through GenAI, especially in technology and software. It’s getting more cost-effective to get into GenAI, and there are lots more solutions available that can help improve GenAI solutions. It will be the year when conversations gravitate to GenAI, ethics, and what it means to be human. In some cases, we’ll start to see the workforce shift and be reshaped, with the technology helping to usher in a four-day work week for some full-time employees.”

Hilary Ashton

And she’s right, especially when it comes to ethical considerations and where we humans add value AI can’t replicate.

We’ll also see further democratization of AI with it infiltrating other areas of our life, much the same the computer has done since its invention.

What we know for certain is the development of LLMs and Generative AI is only getting started. And we want to be leading conversations on its use, ethics, scalability, and more as it evolves.

You can be part of that conversation too:

Listen or watch our Talking AI podcast where we interview AI experts and talk or sign up for our newsletter where we share insights and developments on LLMs, AI/ML, and Data governance, curated by our very own CTO, Omar Shanti.

Frequently Asked Questions About Large Language Models LLMs

1. What is a Large Language Model (LLM)?

A Large Language Model (LLM) is an artificial intelligence model that uses machine learning techniques, particularly deep learning and neural networks, to understand and generate human language. These models are trained on massive data sets and can perform a broad range of tasks like generating text, translating languages, and more.

2. How do Large Language Models work?

Large Language Models work by leveraging transformer models, which utilize self-attention mechanisms to process input text. They are pre-trained on vast amounts of data and can perform in-context learning, allowing them to generate coherent and contextually relevant responses based on user inputs.

3. What is the significance of transformer models in LLMs?

Transformer models are crucial because they enable LLMs to handle long-range dependencies in text through self-attention. This mechanism allows the model to weigh the importance of different words in a sentence, improving the language model’s performance in understanding and generating language.

4. Why are Large Language Models important in AI technologies?

Large Language Models are important because they serve as foundation models for various AI technologies like virtual assistants, conversational AI, and search engines. They enhance the ability of machines to understand and generate human language, making interactions with technology more natural.

5. What is fine-tuning in the context of LLMs?

Fine-tuning involves taking a pre-trained language model and further training it on a specific task or dataset. This process adjusts the model to perform better on specific tasks like sentiment analysis, handling programming languages, or other specialized applications.

6. How does model size affect the performance of Large Language Models?

The model size, often measured by the parameter count, affects an LLM’s ability to capture complex language patterns. Very large models with hundreds of billions of parameters generally perform better but require more computational resources during the training process.

7. Can LLMs generate code in programming languages?

Yes, Large Language Models can generate code in various programming languages. They assist developers by providing code snippets, debugging help, and translating code, thanks to their training on diverse datasets that include programming code.

8. What is “in-context learning” in Large Language Models?

In-context learning refers to an LLM’s ability to learn and perform specific tasks based solely on the input text provided during inference, without additional fine-tuning. This allows the model to adapt to new tasks or instructions on the fly, enhancing its versatility across a broad range of applications.

9. How do LLMs handle multiple tasks like text generation and sentiment analysis?

LLMs are versatile due to their training on diverse data. They can perform multiple tasks like text generation, sentiment analysis, and more by leveraging their learned knowledge. Through fine-tuning, they can be adapted to perform specific tasks more effectively.

10. What are “zero-shot” and “few-shot” learning in Large Language Models?

Zero-shot learning allows an LLM to perform a specific task it wasn’t explicitly trained on by leveraging its general language understanding. Few-shot learning involves providing the model with a few examples of the task within the prompt to guide its response. Both methods showcase the model’s ability to generalize and adapt to new tasks with minimal or no additional training data.