If you’re an enterprise or solution architect, you’re probably feeling the squeeze from both sides. The business wants speed. Delivery teams want autonomy.

And you’re still accountable for reliability, security, interoperability, and cost when things go sideways.

That’s the gap this playbook closes.

Not by pretending AI replaces architecture. It doesn’t.

The real win is using GenDD to turn architecture decisions into repeatable, AI-assisted workflows that live in the repo, get reviewed like code, and stay current as systems evolve.

Like Liquibase to SQL and Semantic Versioning to REST APIs, this playbook brings auditability, repeatability, and consistency to the business-critical practice of enterprise architecture.

- A clear definition of Generative-Driven Development™ (GenDD) and what it’s not

- A simple architecture loop you can run in Git, not in slide decks

- Five high-impact workflows: bounded contexts, API contracts, ERDs, IaC/migrations, and standards enforcement

- A pragmatic 30/60/90-day rollout plan with week-1 artifacts

- Starter prompts your team can use immediately

Why Architects Need GenDD Now

AI-era initiatives are piling up, and they rarely fail because “the model wasn’t good enough.”

They fail because the system around the model is messy: unclear boundaries, brittle integrations, inconsistent standards, and slow review cycles.

Anyone familiar with the DevOps and MLOps waves understands this all too well. The model is just the tip of the iceberg; it’s solving for everything else that makes or breaks a project’s success.

Architects play a central role in this. And not without a challenge. They are being asked to move faster and prevent chaos with emerging technologies: to launch today while learning from yesterday and safeguarding for tomorrow. They are asked to build bespoke solutions that meet the current state of an enterprise’s evolving standards.

That’s the tension GenDD helps resolve.

Done well, GenDD is a structured antidote to “vibe coding” because it pushes architecture decisions into versioned workflows that teams can run repeatedly, not just discuss in meetings.

What Is GenDD?

GenDD (Generative-Driven Development) is the practice of embedding AI and agent workflows across the software development lifecycle.

For architects, that shift is less about “AI generating code” and more about AI accelerating the design work that ensures things are built in the right way for the enterprise. This involves optimizing for an enterprise’s bespoke prioritized blend of scalability, cost-efficiency, auditability, and other non-functional requirements.

It also comes with a reality check: GenDD is only as good as the context you give it. If your standards, diagrams, and decision history live in scattered slide decks and one-off docs, AI will produce confident output that’s disconnected from how you actually build.

So GenDD starts with a deceptively simple rule:

The repo is the system of record.

Prompts, standards, contracts, diagrams, and documentation live alongside the code. They’re versioned, diffable, reviewable, and easy to update when the system changes.

That one move eliminates a lot of “architecture drift” before you ever get to fancy agents.

The Gap: Traditional Architecture in an AI-Native World

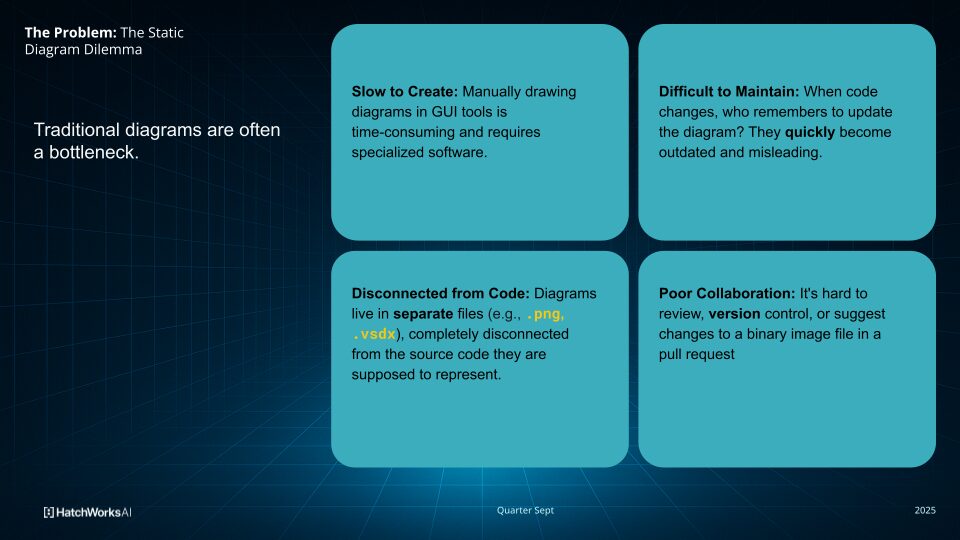

Traditional architecture practices and artifacts aren’t wrong. They’re just too slow at the pace most enterprise teams ship today.

You’ve felt the pain:

- Days lost in onboarding because the system diagram created at kickoff has diverged from reality.

- Missed deadlines and delayed releases due to siloed knowledge created by a “code first, document later” mindset.

- Issues in compliance, security, and usability are caught only after a breach, as standard enforcement is manual, inconsistent, and dependent on who reviews the PR.

The issue is that architecture is often static, slow, and painful. So we asked the question: how do we solve this? How can we bring architecture to the AI era?

Enter GenDD.

GenDD’s purpose is to shrink the distance between intent and implementation by making architectural work faster to produce and easier to keep current.

Core GenDD Principles Through an Architect’s Lens

GenDD doesn’t promise “automatic everything.” The reality is more practical. It’s a workflow multiplier when you set guardrails.

- Context first: AI needs a curated corpus (docs, diagrams, standards) to reason consistently.

- Orchestration: repeatable workflows for documentation, reviews, and infra provisioning.

- Tight feedback loops: automated audits on code, diagrams, and infra against standards.

- Human-in-the-loop governance: architects still approve high-risk choices and exceptions.

If GenDD removes anything, it should remove heroics, not accountability.

The GenDD Architecture Loop: From Intent to Verified System

Think of this as a repeatable loop you run continuously, not a one-time phase.

1) Capture intent in plain language

Start with the constraints architects care about: data sensitivity, latency budgets, integration boundaries, failure modes, cost targets, and compliance requirements. Marry those with enterprise architecture standards: shared libraries, reusable patterns, API contracts, tech stack elections, and so on. AI is useful here because it can structure messy inputs into a coherent first draft—then you refine it.

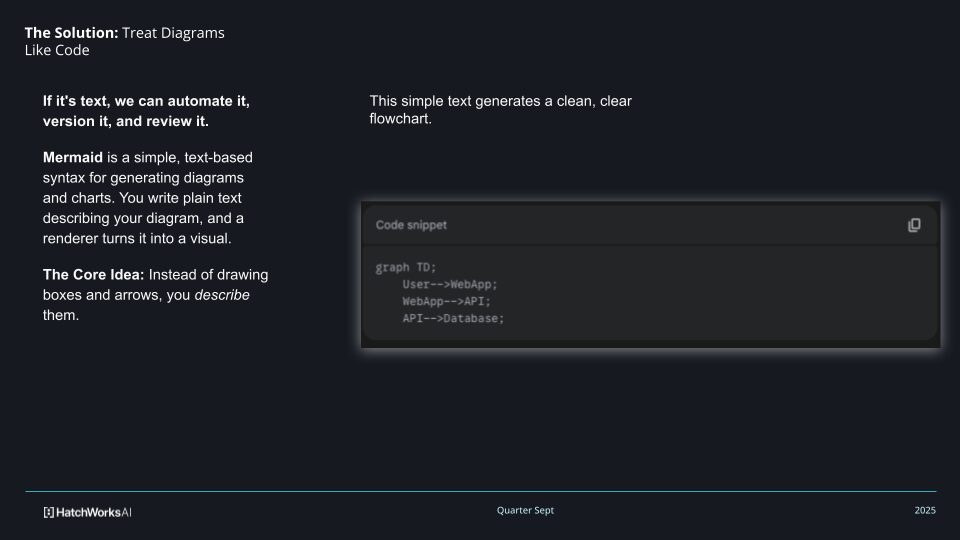

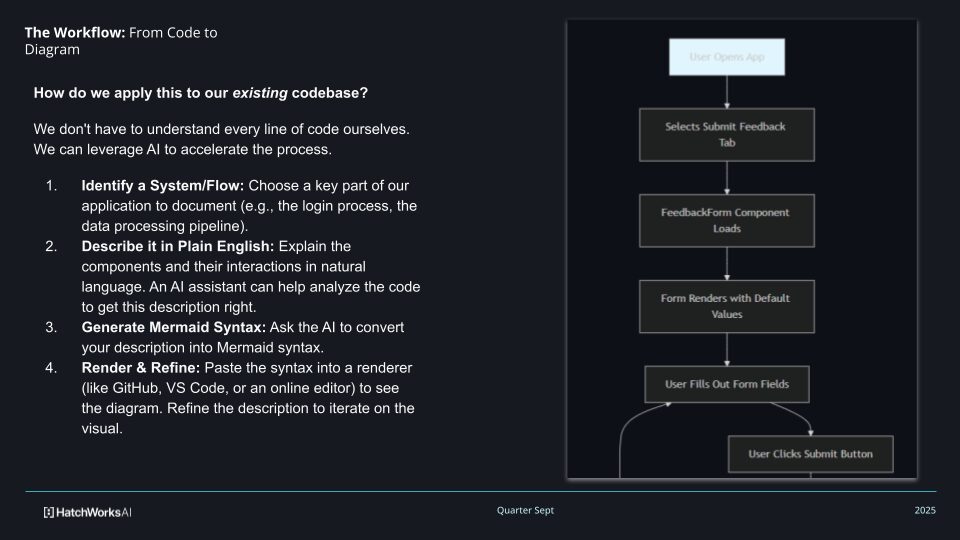

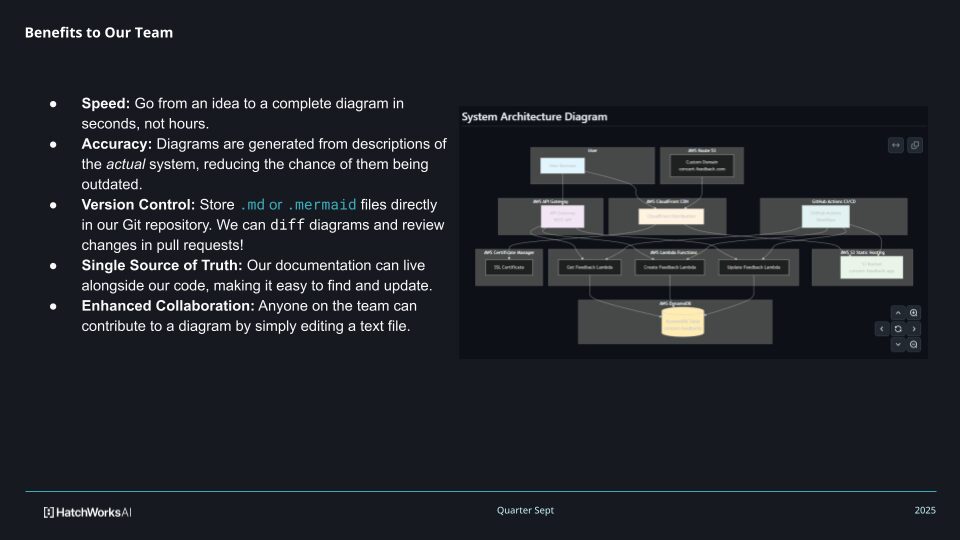

2) Generate diagrams as code (and keep them close to the code)

Use text-based diagram representations (Mermaid is the common choice), so diagrams are easy to review and update. The point isn’t pretty pictures. It’s fast iteration and source control. Think Liquibase.

3) Derive contracts, schemas, and infrastructure definitions

With intent and diagrams aligned, use those same artifacts to automatically generate concrete artifacts: OpenAPI contracts, JSON Schemas, ERDs/DDL, Terraform modules, and migration plans. It’s the power of diagramming as code!

AI accelerates the drafting; architects keep control of the standards and constraints.

4) Implement, then continuously audit drift

This is the step that makes GenDD “enterprise-grade.”

You don’t just generate artifacts; you validate that the system stays aligned through audits and checks: standards conformance, contract validation, security requirements, missing tests, naming consistency, and dependency drift.

A practical “step zero” that helps: ask the assistant to summarize the repo like a new engineer. If it can’t explain your system cleanly, that’s usually a sign your architecture knowledge isn’t captured in a reusable way yet.

Use Case 1 – Bounded Context Creation with GenDD for Architects

Bounded contexts help you scale development without tightly coupling components. But in practice, they fail when boundaries exist only in people’s heads or in a one-time diagram nobody updates.

GenDD helps you go from “we think these are the boundaries” to “these are the boundaries, here’s how we enforce them, and here’s what changes require review.”

A good workflow looks like this:

- Use AI to propose candidate domain boundaries based on service descriptions, tickets, integration patterns, and team ownership.

- Generate a system context diagram and a short “context narrative” in Markdown.

- Review with the team, then commit it in /docs so it becomes reusable and enforceable.

- Leverage this single source of truth to prevent drift, by assessing whether new endpoints or services unintentionally cross boundary lines.

What you want in the repo

- A Mermaid system context diagram

- A bounded-context decision doc (short, opinionated, and specific)

- A simple checklist for what “crossing a boundary” requires (contract review, security review, etc.)

Use Case 2 – API Contract Definition and Governance

APIs usually don’t fail in immediate, overt ways. They fail later, in more subtle and far-reaching ways that derail development team velocity and cause compliance and regulatory nightmares. The usual suspects are inconsistent naming, undocumented behaviors, surprise breaking changes, and error-handling chaos.

GenDD helps by making contract work faster and more enforceable. You start with intent (“what consumers need”), generate a contract draft, validate it against your enterprise standards, create a mock endpoint relaying dummy data, and implement the logic.

A strong pattern is:

- AI drafts OpenAPI specification + examples quickly, according to enterprise API standards.

- Architects review for things AI may not reliably judge: domain correctness, stability expectations, security posture, and versioning strategy.

- AI creates and publishes a Mock Endpoint serving examples.

- Automated checks validate the contract against your standards, so review becomes predictable.

Keep the standards explicit

Instead of “we usually do it this way,” encode it:

- auth requirements

- naming conventions

- pagination patterns

- error format and status code rules

- performance expectations

That’s how you scale governance without becoming the API gatekeeper for every team.

Use Case 3 – ERD and Data Architecture with GenDD

AI-generated ERDs are useful, but only if you treat them as living artifacts.

While the application of GenDD to drawing the first ERD is plain to see – DDL in seconds, consistent conventions in line with enterprise standards, optimized for query patterns – it is actually in the continuing processes of data architecture that GenDD shines.

Application teams are under constant pressure to release new features on tight timelines. When these require a change to their data store schema, they need to move nimbly and autonomously (much to the chagrin of the data engineers building ETL pipelines against their databases). Stopping to assess whether their data architecture is in line with enterprise standards imposes hurdles that are easiest to just avoid all together – and so that’s exactly what they do.

Here is where GenDD shines.

It gives your teams auditable continuity in data architecture, and certainty that the proposed schema changes align with the service’s nonfunctional requirements. And it does so in fractions of the time, drastically accelerating the shipping of new features in compliant, scalable ways.

A practical workflow:

- Generate an ERD + short schema narrative (“what this data is for”).

- Generate or validate DDL/migration scripts.

- Generate schema changes on the fly, in accordance with enterprise standards.

- Add audits that compare environments/services for drift and flag mismatches.

When you do this well, analytics and lineage improve as a side effect. You’re not “doing extra documentation.” You’re reducing future ambiguity.

Use Case 4 – Infrastructure as Code and Cloud Migrations

Reference architectures are valuable. But they’re not execution. And cloud migrations die in the gap between “target state diagram” and “what we actually need to provision.” On the rare occasion that diagrams do evolve, further drift is introduced very often.

GenDD helps by producing infrastructure definitions and migration steps as a starting point, then you refine and secure them. Go from architecture diagram to infrastructure as code in seconds.

A pragmatic flow:

- Start from the target architecture and constraints.

- Generate Terraform patterns and a migration plan with prerequisites and sequencing.

- Apply security and cost guardrails early (segmentation, encryption, logging, autoscaling defaults, tagging).

The point is not to “auto-provision production.” It’s to turn migration planning from weeks of workshops into days of concrete, reviewable artifacts.

Use Case 5 – Enforcing Enterprise Standards at Scale

This is where GenDD pays off fast.

Most architect burnout is caused by repetitive reviews:

- “Why is this API inconsistent?”

- “Why did we choose this auth pattern?”

- “Why is observability missing again?”

GenDD shifts enforcement from human memory to automated checks:

- Encode standards as templates, checklists, and rules

- Run audits across repos on a cadence

- Route exceptions to humans (with context) instead of sending humans hunting for violations

This is how you move from reactive firefighting to proactive governance—without becoming a blocker.

How GenDD Changes the Architect’s Day-to-Day Workflow

Before GenDD, the job was meetings, slide updates, long review cycles, and being the bottleneck by default.

With GenDD, the job becomes orchestration: shorter feedback loops, living diagrams, and automated checks.

Practically, that shows up as “intake prompts” for new projects, copilots in IDEs to keep implementation aligned continuously, and lightweight workshops where teams run prompts against their own context instead of listening to abstract guidance.

☕ Curious what this looks like in practice? Here’s a day-by-day GenDD workflow from a developer’s POV.

Diagrams as Code: Mermaid + AI for Rapid Architecture Iteration

Mermaid isn’t the goal. Versioned diagrams are.

Mermaid works because diagrams become text: versioned, diffable, and reviewable like code. AI makes it usable at speed by producing solid first drafts from plain-English descriptions and repo context.

A practical flow: describe a system or flow → generate Mermaid syntax (system context, sequence diagrams, ERDs) → render and refine in GitHub/VS Code → store it in /docs. Keep docs GitHub-readable so they’re immediately usable.

Quantifying the Impact: From Hours and Days to Minutes

If you want to quantify GenDD’s value, don’t try to measure “AI usage.” Measure cycle time reduction in architecture-heavy work:

- Time to produce a system context diagram that’s review-ready

- Time to draft and validate an API contract

- Time to generate a migration plan + IaC baseline

- Time to run standards audits across repos

This is where teams often see the minutes instead of hours/days shift, because the workflow becomes repeatable and the artifacts become reusable.

Case Study Snapshot: ALTAS AI and GenDD-Accelerated Integration Delivery

ALTAS AI is building a unified cost management platform that brings SaaS, cloud, hardware, and data centers into one place.

That sounds clean on paper. In practice, it means dozens of integrations and a constant stream of external API and infrastructure changes you don’t control.

We used GenDD to compress the integration build cycle and reduce the long-tail maintenance burden.

The key wasn’t “AI writes code.” It was using AI across the workflow to speed up integration logic, validation, and the repeatable updates that follow every third-party change.

What we did

- Ran a collaborative discovery phase with ALTAS AI and pilot customers to prioritize the highest-value integrations and set clear MVP completion criteria.

- Embedded GenDD into delivery using Cursor to accelerate full-stack coding and integration work.

- Put maintenance on rails by automating AI-based analysis for API changes and using iterative review to verify accuracy and functionality with each update.

Results

- Reduced an estimated 20 business days of integration effort to under 5 business days.

- Delivered robust integrations aligned to platform goals, with feedback loops that improved the customer experience.

- Reduced ongoing maintenance staffing needs through automation.

If you’re architecting a platform in a “moving target” ecosystem, this is the real takeaway: GenDD is most valuable when it speeds up delivery and makes change cheaper after launch. That’s where enterprise systems usually bleed time and budget.

Security, Compliance, and Enterprise Readiness in GenDD

The first objection is fair: Can we do this if data can’t leave our VPC?

In most enterprise environments, the real requirements are data control, auditability, and predictable governance, not “no AI.”

GenDD can meet those constraints if you treat it as a workflow design problem first and a tooling decision second. That typically means controlling where models run and how data is handled: using approved providers and private endpoints, deploying models inside your VPC or isolated VMs when needed, and keeping sensitive data out of prompts unless it’s explicitly permitted.

Enterprise readiness also depends on the guardrails around output quality and risk. For high-impact changes, keep humans in the loop with clear approval gates.

And make the system traceable: log what was generated, what was accepted, what was modified, and why. That audit trail is what turns “AI-assisted” into “compliance-friendly.”

Bottom line: GenDD works in regulated settings when governance is built in from day one, and the tools are chosen to enforce that governance, automatically, consistently, and with a paper trail.

Implementing GenDD for Architects in 30, 60, and 90 Days

First 30 days: prove the loop on one system

Pick one product area and implement the core loop:

- Repo summary prompt

- System context diagram (Mermaid)

- One bounded context doc

- One API contract + standards checklist

Next 60 days: add automation

Extend to ERDs and IaC patterns. Add lightweight CI checks:

- Contract validation

- Standards checks

- Basic drift detection triggers

Also, identify 1–2 champions who can run the workflow without you.

By 90 days: scale governance

Roll audits across key repos on a cadence. Formalize exception handling. Align with governance groups so GenDD becomes a standard delivery motion, not a side experiment.

Week-1 artifacts to create immediately

- prompts.md (your workflow entry point)

- standards.md (architectural and AI usage standards)

- /docs/ structure for diagrams and decision logs

How GenDD Fits into Your Existing Software Development Company or Partner Model

GenDD doesn’t replace partners. It makes collaboration cleaner.

In-house architects define standards and constraints; partner pods execute using GenDD; shared diagrams and prompts keep everyone aligned across organizational boundaries.

The more explicit your standards and workflows are, the less coordination tax you pay.

What You Can Pilot This Week with GenDD for Architects

Pilot GenDD as a process, not a tool.

Start building AI-powered architecture outputs (diagrams, contracts). Run GenDD-style audits to fight sprawl and tech debt. Shift from reactive firefighting to scheduled AI reviews across key systems.

Copy-paste starters

- “Summarize this repo like a new engineer: components and key flows.”

- “Generate a Mermaid system context diagram and save it in /docs.”

- “Draft an OpenAPI spec that matches current patterns, with examples.”

- “Check this contract against our standards and flag violations.”

- “Identify inconsistencies with our API standards checklist and propose fixes.”

- “Propose a migration plan to the target architecture with prerequisites and sequencing.”

When GenDD for Architects Isn’t a Fit (Yet)

GenDD impact is limited when there are no centralized standards yet (or the architect org is still forming), or when you’re dealing with small legacy systems that are about to be decommissioned.

Treat this as a readiness checklist: if you can’t answer “where do standards live?” or “how do we audit drift?” GenDD will surface that gap quickly.

That’s still progress. It just changes what you pilot first.

Next Steps: Partnering with HatchWorks AI on GenDD for Architects

If you want GenDD to stick, you need more than a few good prompts. You need a repeatable motion your architects can govern, and your teams can run without constantly pulling you into review cycles. HatchWorks AI can help in three ways, depending on where you are today.- GenDD Training Workshop: If your teams are early, start by getting everyone on the same operating model. The GenDD Training Workshop is expert-led and built around hands-on, practical work: AI-assisted coding/prototyping, live demos, and collaborative exercises that show how to embed AI, agents, and agentic workflows across the SDLC. It can run as a half- or full-day session, remote or on-site.

- GenDD Accelerator: If you’re ready to pilot, use our Generative-Driven Development™ approach to streamline delivery with AI and automation. The focus is measurable throughput, with reported productivity gains of 30–50% when teams operationalize the method across design, development, and testing.

- AI Engineering Teams: If you need execution capacity, plug in AI and data talent on-demand. Our engineers are trained in our GenDD methodology, and you can engage via staff augmentation, dedicated teams, or outcome-based delivery, backed by a 30-day performance promise.