This article walks through a typical day in the life of an AI software developer working inside a GenDD (Generative-Driven Development) workflow.

Whether you’re trying to modernize your team’s process or simply curious about what working with AI actually looks like in practice, this breakdown is designed to help you see and start building toward the future of software development with AI workloads.

GenDD Is a Workflow, Not a Stack

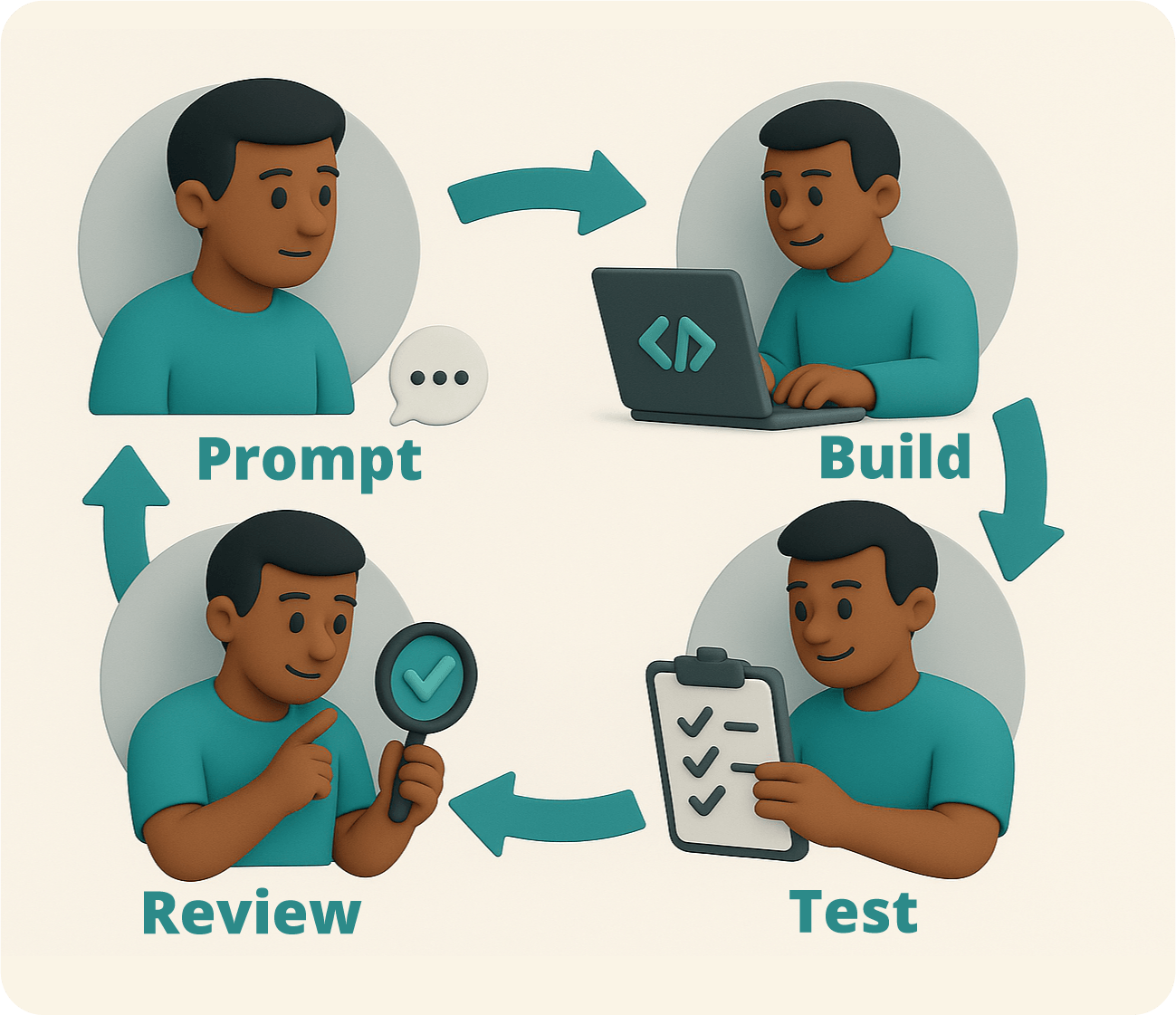

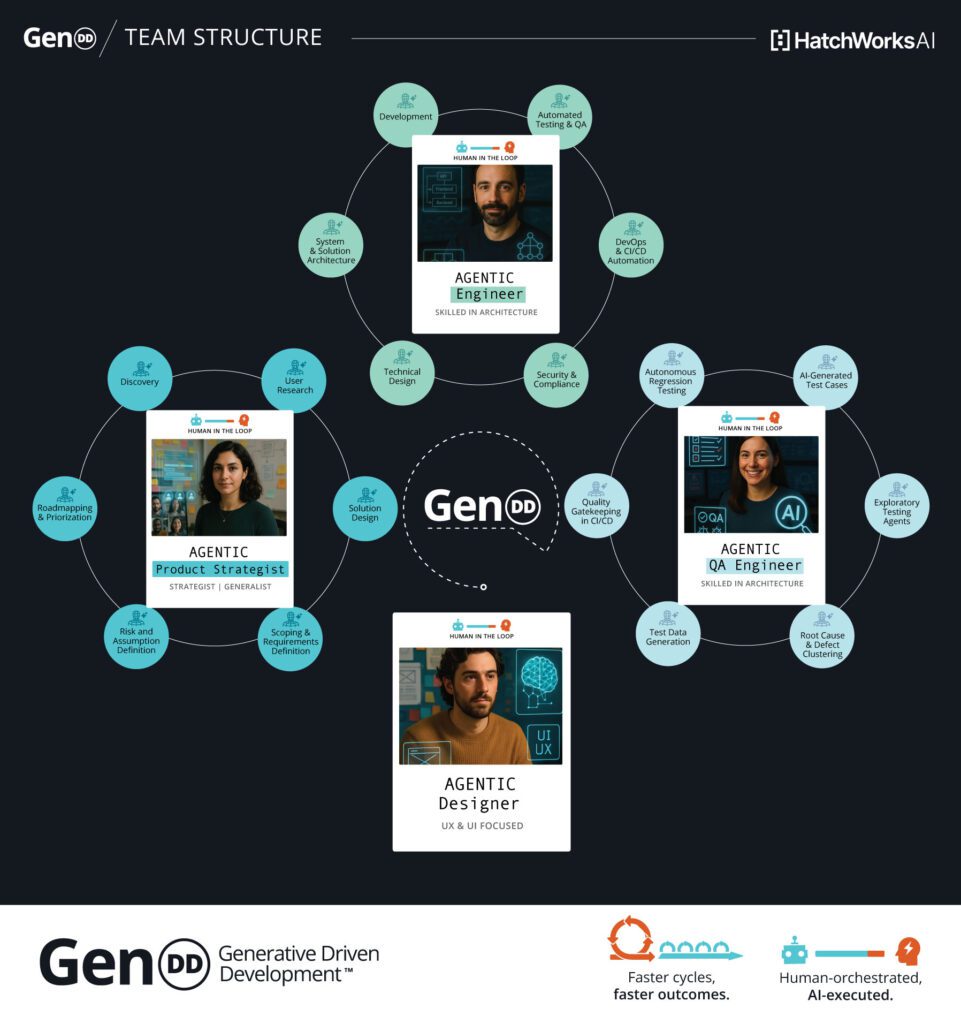

Generative-Driven Development™ (GenDD) is HatchWorks AI’s approach to building software in this new context. It’s a workflow designed around AI-native collaboration. Developers use tools like Lovable, Cursor, Claude, and n8n—not in isolation, but as connected parts of an end-to-end system.

Prompts shape user stories and architecture, but context engineering gives the AI superpowers. Agents automate workflows and reasoning steps. Code, tests, and documentation are often generated in a single pass.

And it’s come about because the days of heads-down coding as the sole driver of progress are behind us. Today’s developers are directing AI systems as much as they are writing code. Or at least, the best ones are—the ones with their eye to the future.

They’re crafting prompts, configuring workflows, evaluating model behavior, providing valuable context, and guiding the AI on making decisions that increase the intelligence of these systems towards tangible and greater business outcomes.

If you want to see what this looks like in practice, keep reading. We’ll walk you through a typical day in the life of a GenDD developer—tools, tasks, and all.

Our tip: Make this schedule your own or pass it along to your team so they too can embody the AI development team of the future.

A Day in the Life of an AI Software Developer: At a Glance

| Time of Day | Focus Area | Activities & Tools |

|---|---|---|

|

8:30 AM

|

☕ Coffee + Kickoff

|

Skim AI-generated Slack summary from yesterday’s work. Skim prompt logs + commit digests.

|

|

9:00 AM

|

🚦 Planning & Alignment

|

Async or live standup. Tools: Cursor, Claude Code, GitHub, n8n, Slack.

|

|

9:30 AM

|

✍️ Refining Requirements

|

Translate product specs into prompts, break into tasks. Tools: Claude, DeepSeek, GPT-4.

|

|

11:00 AM

|

💻 Build & Pair with AI

|

Code in Cursor, generate tests + docs inline. Copilot for quick scaffolds. Claude /output-style (explanatory or learning)

|

|

12:30 PM

|

🥗 Lunch + Side Project Time

|

Casual prototyping, testing tools, or exploring new prompts. Or just eat and recharge for the rest of the day.

|

|

1:30 PM

|

✅ Testing & Governance

|

Self-healing tests (CodiumAI, TestRigor, CodeRabbit), run security checks, CI guardrails.

|

|

3:00 PM

|

🧠 Chain-of-Thought Tasks

|

Deep reasoning work with Claude Code tag or GPT-4o. Debugging, design tradeoffs.

|

|

4:00 PM

|

📄 Auto-Documentation + Cleanup

|

AI summarizes commits, writes integration notes, and logs edge cases.

|

|

4:30 PM

|

🔍 Async AI PR Review

|

AI flags logic gaps + edge cases. Developer reviews and revises.

|

|

5:00 PM

|

🧪 Prompt Library Contributions

|

Save successful prompts, annotate what worked, and refine the playbook.

|

|

5:30 PM

|

🚶 Wrap

|

Sync notes, shut down IDEs, and step away from the machine.

|

Early Morning: Planning, Prompting, and Pairing

The goals of your developers at this point in the day are the same:

- review what’s been done

- identify what’s next

- surface blockers

- align with the team

What’s changed is how all of that happens. For the AI software developer, the morning starts with a curated, AI-generated summary of the previous day’s work or recent work performed by the Agent. Tools review yesterday’s code pushes, prompt logs, and repo activity, then package it into a quick, digestible update with blockers flagged, wins are highlighted, and ambiguities turned into questions.

From there, developers use prompting and pairing tools to move from intention to implementation faster than traditional workflows allow. Some even use a text-to-speech (TTS) feature to get a readout while getting ready, coming back from the gym, or simply taking an early morning walk.

The early part of the day is all about alignment: with the work ahead, with the team, and with the AI systems that will be part of the build. That alignment typically happens through two key workflows—AI-powered standups and the refinement of requirements with AI.

AI-Powered Standups

With GenDD, standups shift from status updates to strategy checkpoints. Because AI has already surfaced yesterday’s work, complete with summaries, blockers, and anomalies, developers come into standup ready to talk about what matters. That includes ambiguous requirements, integration risks, or prompt behaviors that need review.

What you get is a faster, more focused sync where teams spend less time aligning on what happened and more time deciding what to do next.

To make this work in practice, most teams use a combination of:

- Version control systems (GitHub, GitLab) to track commits and diffs

- AI-enhanced IDEs like Cursor to capture prompt context alongside code changes

- Communication tools like Slack or Teams

- Custom agents or workflows built with tools like n8n, Zapier, or MCPs to monitor repos and generate summaries

A common setup might look like this:

- Developers push code or update prompts in Cursor.

- An n8n agent monitors commit activity and extracts relevant metadata.

- A prompt is triggered that reads: “Summarize yesterday’s repo activity and generate 3 questions for today’s standup.”

- The output is posted in Slack, either as a thread or a daily digest, giving the team visibility into what moved, what stalled, and what needs discussion.

This approach allows for both async and live formats. Some teams still hold brief morning huddles; others rely entirely on the Slack digest.

Our tip: Schedule an in-person stand-up once or twice a week. Maybe on Mondays and Fridays or Mondays and Thursdays. Face time is important for your team to build camaraderie, familiarity, and talk through problems in real-time. Just because we can do things async all the time, doesn’t mean we should.

Refining Requirements with AI

Once priorities are aligned, developers shift into shaping the work, and this is where AI adds real leverage. Instead of manually translating product specs into technical plans, developers collaborate with models like Claude, DeepSeek, or GPT-4 to generate system designs, outline edge cases, and break down features into testable units.

To set this up, teams typically use:

- LLM interfaces like ChatGPT, Claude, or Cursor for prompt-driven architecture and planning

- Prompt libraries stored in GitHub, Notion, Obsidian, or internal wikis to reuse successful scaffolding patterns

- Task management tools (Linear, Jira, Height) to structure outputs into tickets or subtasks

- Custom workflows in crewAI, Haystack or LangGraph to chain prompt results into follow-up actions—e.g., generating tests or API contracts from the same source input

A typical sequence might look like this:

- The developer drops in a product brief or user story.

- A prompt is issued: “Turn this user story into a set of engineering tasks, acceptance criteria, and initial test cases. We’re using TypeScript and React, and see these documents @agile-guidelines.md, @coding-guidelines.md, and @product-prd.md, and follow them.”

- The model returns structured output ready for review and refinement.

- The dev validates the logic, adjusts for architecture, and feeds tasks into the project board.

In some teams, data scientists and developers collaborate on prompt design and edge case exploration, ensuring AI systems behave reliably under real-world conditions.

And with that, the early morning gives way to late morning, where it’s time to build.

Late Morning: Building with AI Tools

With tasks defined and requirements in place, developers shift into hands-on implementation. But even here, writing code is rarely a solo effort. AI is embedded directly into their tools—ready to scaffold, refactor, debug, and document as the work unfolds.

Code with Cursor or GitHub Copilot

Developers work inside AI-native IDEs like Cursor, where models like GPT-4 Turbo can access the full codebase, understand architecture, and respond in context. Prompts can be embedded inline, tied to file structures, or used to trace logic across modules.

While GitHub Copilot remains useful for quick completions and boilerplate, Claude Code is often preferred for deeper tasks—especially in larger codebases—thanks to its repo-level awareness and support for complex refactoring.

Typical use cases include:

- Scaffolding components based on ticket prompts

- Refactoring functions across modules with consistent naming and logic

- Generating documentation or integration notes directly within the code editor

Multi-Agent Testing with Playwright MCP with Cursor or Claude Code

As features are built, they’re immediately pulled into testing flows—often through multi-agent systems built in tools like n8n. These aren’t just automated test runners—they’re configurable agent chains that coordinate across LLMs, vector stores, and API validators.

For example, a developer might wire up the following:

- An agent to generate unit tests from the function signature and its expected inputs

- A second agent to simulate edge cases and inject likely failure paths

- A third agent to cross-check outputs against a schema pulled from a vector database

- A final agent to log results to GitHub or Slack for review

Each of these steps is prompt-driven but runs autonomously once configured. Developers review results, tune the prompts, and re-run until they’re satisfied with both test coverage and behavior.

In more lightweight builds or early-stage prototypes, tools like Replit provide fast environments to test model logic or API flows without needing a full local setup. This is especially useful for internal tools, sandboxed POCs, or experiments with model-agent interaction. And all this is done before a well-deserved lunch.

Early Afternoon: Testing and Deploying Smarter

Once the core functionality is in place, attention shifts to stability and safety. In GenDD workflows, testing and governance are embedded in the development loop, handled by AI as part of the natural flow of work.

Self-Healing Tests

Testing is one of the clearest areas where AI changes the game. Tools like CodiumAI or TestRigor are used to generate unit tests, integration tests, and edge-case scenarios based on live code and recent changes. The process starts with a prompt or simply saving a file—AI tools immediately scan for test coverage and flag gaps.

When a test fails, the workflow doesn’t stop. Models are trained to analyze the failure, propose a fix, and—in many cases—update the test code directly. Developers step in to confirm or override, but the cycle from detection to resolution is much faster and often more comprehensive than manual QA cycles.

This works particularly well when test generation is chained to prompts already used in development. For example:

- A developer prompts for a function scaffold

- The same prompt context is passed into CodiumAI

- The tool generates tests that match both the structure and logic of the implementation

Developers spend time validating, refining, and improving coverage.

Security and Governance Checks

Alongside testing for functionality, developers also monitor for unintended behavior—both in code and in the AI models themselves. This includes hallucinated responses, unsafe prompt chains, or skipped reasoning steps.

Tools like OpenAI’s eval framework, LangChain’s output parsers, incident.io, or internal prompt guardrails are used to run validation checks on AI-generated outputs. These checks flag:

- Incomplete reasoning (e.g., jumping to a solution without showing logic)

- Skipped validations or null checks in generated code

- Unsafe API calls or insecure authentication patterns

Some teams configure these checks to run as part of the CI pipeline, while others use manual prompt review workflows before outputs are merged.

These evaluations borrow best practices from machine learning operations (MLOps)—where continuous validation, guardrails, and model oversight are essential to safety and quality.

It’s a new layer of QA. Less about bugs in the code, more about blind spots in the AI logic.

Late Afternoon: Context Handling & Chain of Thought

As the day winds down, developers turn their attention to tying off loose ends and making the work understandable to others (or to their future selves).

In GenDD workflows, this means leaning on advanced reasoning models and AI-based documentation tools that extend thinking and preserve context.

Reasoning Models at Work

For problems that require multi-step logic, evaluation, or prioritization, developers use models like DeepSeek, Claude Opus, or GPT-5 to break challenges into structured thought flows.

For example: “Break this problem into sub-tasks and reason through each step, identifying dependencies and risks.”

This approach is used for tasks like:

- Designing systems that span multiple services or teams

- Debugging issues where multiple components may be at fault

- Evaluating trade-offs between architectural decisions or implementation paths

The results aren’t always right on the first pass but they accelerate the thinking process, surface blind spots, and often lead to better questions. Developers use these outputs as scaffolding. Some teams go further, wrapping these reasoning prompts into internal agents that are triggered in certain files or branches.

Auto-Documentation and Agent Summaries

In GenDD workflows, documentation is built as part of the commit process.

When developers push code, tools like Cursor or custom n8n agents generate summaries based on the changes, prompt history, and file context. These include:

- Descriptions of what the code does

- Notes on edge cases or limitations

- Integration considerations for downstream systems

Instead of treating documentation as a separate task, it becomes part of the development output.

What once required hours of manual writing is now handled by agents that understand your computer’s file structure, history, and context and generate documentation that sticks.

This is especially valuable when onboarding teammates, revisiting code months later, or debugging systems with multiple contributors.

Evening: Wrapping with Review and Feedback

The final stretch of the day is about tightening the loop. In GenDD teams, the feedback cycle also applies to prompts, patterns, and the entire system of development. AI plays a role here too, helping surface issues early and capturing what’s working to make future work faster and clearer.

Async Peer Reviews with AI Assist

Before code is reviewed by a human, it’s often run through an AI review pass. Tools configured within Cursor, GitHub workflows, or custom agents use models like GPT-4 to scan the PR, flag inconsistencies, and surface edge cases that might be missed in a quick glance.

These AI reviews focus on:

- Logical gaps in implementation

- Unclear diffs or unexplained changes

- Missed test coverage or non-obvious regressions

Developers get this early signal before requesting human review, making the review process faster, cleaner, and more focused on higher-order concerns.

Think of this as a first layer of defense, and one that scales well across multiple teams and codebases.

Feedback Loop to Improve Prompts

Throughout the day, developers issue dozens of prompts. Some are successful, some aren’t. Instead of treating each one as a one-off, GenDD teams track which prompts produce strong results and which ones fall short.

Prompts that consistently lead to accurate code, clean scaffolding, or usable tests are saved into a shared prompt library. This library becomes a searchable, team-specific resource. It will be organized by task type, stack, or use case.

For example:

- Prompts that generate reliable test coverage for TypeScript services

- Prompts that break down ambiguous product specs into ticket-ready chunks

- Prompts tuned to handle specific architectural patterns like event-driven systems

AI software developers contribute to this library throughout the week, either directly or by tagging useful prompts in tools like Obsidian, Notion, GitHub, or Slack. Some teams go further by reviewing failed prompts, annotating what went wrong, and updating examples to avoid future errors.

This ongoing refinement helps teams get better at working with AI over time. And it’s here that the GenDD workday ends.

All Day, Every Day: The GenDD Developer Mindset

The work may shift throughout the day (planning in the morning, building by midday, testing and refining by afternoon) but the mindset stays consistent across every hour.

Developers working in a GenDD environment operate differently from traditional engineers, not just because of the tools they use, but because of how they think about their role.

Here’s what the mindset looks like:

They orchestrate, not just execute. Code isn’t the whole job. Developers guide AI systems, structure prompts, shape workflows, and validate results. They design the system around the system.

They prioritize clarity over speed. The goal isn’t to move fast at the keystroke level—it’s to reduce confusion downstream. Clean prompts, clear specs, and structured feedback loops save more time than rapid typing ever could.

They build with context. The best results come when the AI understands the full picture. Developers keep that picture sharp by working in tools like Cursor and Claude, where history, repo structure, and prior prompts are all part of the environment.

They see prompts as artifacts, not throwaways. Every prompt is a potential tool for the next developer—or the next project. Good ones are saved, tested, and improved. The team learns by logging what works and fixing what doesn’t.

They treat tools as extensions, not crutches. AI is embedded in the workflow, but it doesn’t replace critical thinking. Developers stay in the loop, knowing when to follow suggestions and when to challenge them.

The GenDD mindset is as much about discipline as it is about experimentation. It’s structured, fast-moving, and fundamentally collaborative between humans, AI systems, and the workflows that connect them.

How to Become a GenDD Developer

You don’t need to be an AI expert to work this way. But you do need to learn how to think, build, and collaborate differently. What it really comes down to is learning to work with AI as a system.

Here’s how that journey unfolds:

1. Start with Prompting Fundamentals

Begin where most developers enter GenDD: working inside AI-powered IDEs like Cursor or tools like ChatGPT or Claude. Focus less on generating code fast and more on communicating intent clearly.

Key skills to build:

- Writing prompts that include context, constraints, and format expectations

- Iterating based on model feedback

- Using prompt chaining for more complex tasks (e.g., generate → test → document)

Practice this daily. It’s the foundation for everything that follows.

2. Build Context-Aware Workflows

Once you’re comfortable with prompting, start expanding your environment. Use tools like crewAI, Haystack, or even Google ADK to coordinate tasks across models, vector databases, and APIs.

This is where you shift from using AI reactively to designing how it works alongside you.

What to learn:

- How to manage input/output flows between agents

- When to automate versus when to review manually

- How to connect AI outputs into real development tools (e.g., CI/CD, Slack, GitHub)

3. Treat Prompts and Patterns as First-Class Artifacts

Don’t throw away your best prompts. Save them, share them, refine them. GenDD teams create prompt libraries just like they maintain shared code modules or UI patterns.

Helpful habits:

- Version and document your best prompts

- Review and tag failed prompts with feedback

- Build internal resources that others can learn from

This turns personal experimentation into team-wide acceleration.

4. Think Like a System Designer

At the highest level, GenDD developers treat AI outputs as part of the system’s architecture, factoring in accuracy, explainability, and performance at scale. That requires architecture thinking, model awareness, and an eye for risk.

To grow here:

- Learn how models make decisions—and where they fail

- Build guardrails for AI safety and output validation

- Treat LLMs and agents as architectural components, not just assistants

Ready to build the GenDD way?

HatchWorks helps teams adopt Generative-Driven Development with confidence, clarity, and speed. Whether you’re exploring AI-augmented workflows or ready to scale a full AI-native development team, we offer:

- Hands-on workshops to upskill your team in prompting, orchestration, and multi-agent workflows

- Custom GenDD playbooks tailored to your tools, architecture, and delivery model

- AI development environments preconfigured with tools like Cursor, Claude, and LangChain

- Strategic support to help you roll out GenDD without disrupting delivery

We don’t just talk about GenDD, we use it every day. And we can help you do the same.