Generative-Driven Development (GenDD) is our structured approach to building with AI. The one that helps us ship faster, solve harder problems, and avoid falling into the trap of vibe coding.

We’re sharing our process over a series of articles so your team can skip the trial and error we’ve gone through to perfect it. In this piece, we cover the AI tools that make GenDD possible.

You’ll get:

- The AI tools we rely on across real client builds

- Role-based recommendations for product managers, designers, engineers, QA, and data teams

- Prompt examples and tips to apply each tool effectively

- Advice for building your own GenDD-ready stack and how we can help

The GenDD AI Developer Tech Stack

When it comes to GenDD, success doesn’t come from pulling every AI tool out of the proverbial toolbox. It comes from knowing which tools make you faster without compromising quality and deploying them accordingly.

Below is the stack we’ve leaned on across real client builds. It’s optimized to support code generation, AI-powered orchestration, and team-wide acceleration across the software development lifecycle.

(Keep in mind, these are just the tools we reach for first. There are loads more listed by role in a later section.)

Our GenDD Tech Stack at a Glance:

| Tool | Category | Primary Use |

|---|---|---|

|

Cursor

|

AI IDE

|

Full-repo code editing, debugging, refactoring, and automated documentation with context-aware code suggestions

|

|

GitHub Copilot

|

AI Code Assistant

|

Inline code snippets, boilerplate generation, and intelligent code assistance in Visual Studio Code

|

|

Claude Code

|

AI Code Assistant

|

Contextual, high-quality code generation, refinement, and debugging suggestions across multiple languages

|

|

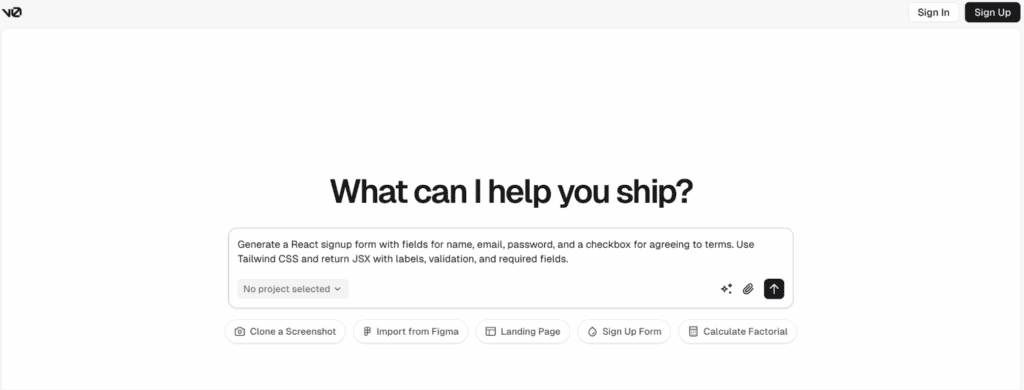

v0.dev

|

UI Scaffolding

|

Generate UI components from natural language descriptions; great for React code scaffolding

|

|

ChatGPT

|

Prompting & Research

|

User stories, acceptance criteria, UX copy, documentation, and natural language inputs

|

|

n8n

|

Workflow & Agent Builder

|

Low-code automation and AI agent orchestration across multiple APIs and tools

|

|

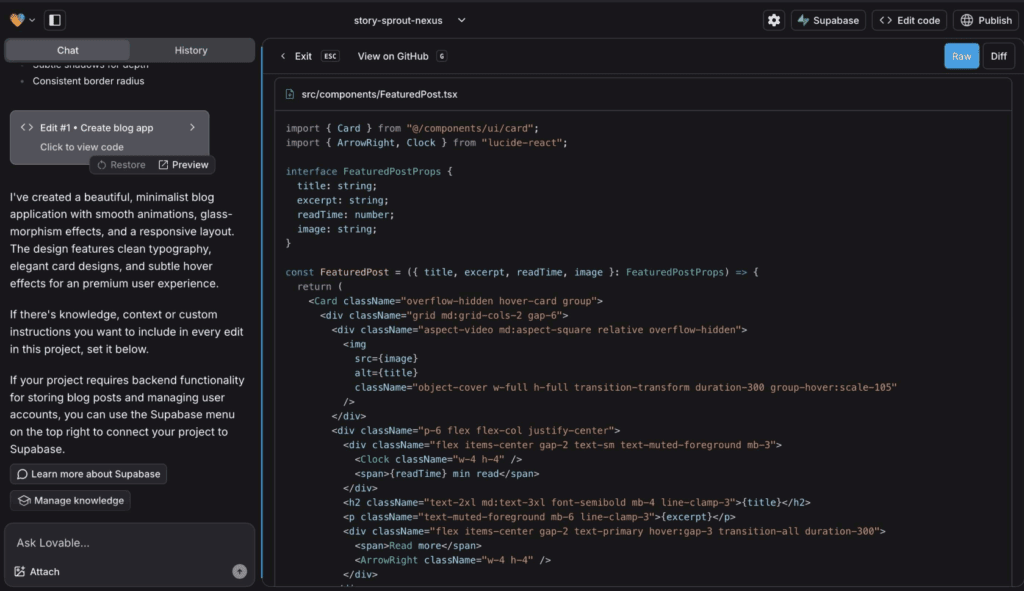

Lovable

|

AI Prototyping

|

Rapidly creating interactive AI-powered prototypes and POCs for user validation and testing

|

|

Gemini

|

Agent Platform

|

Context-aware content generation, email workflows, and lead qualification tasks

|

|

Roo Code

|

Autonomous Coding Agent Plugins

|

Autonomous, role‑mode AI assistant that reads/writes code, runs commands, interacts with browser

|

|

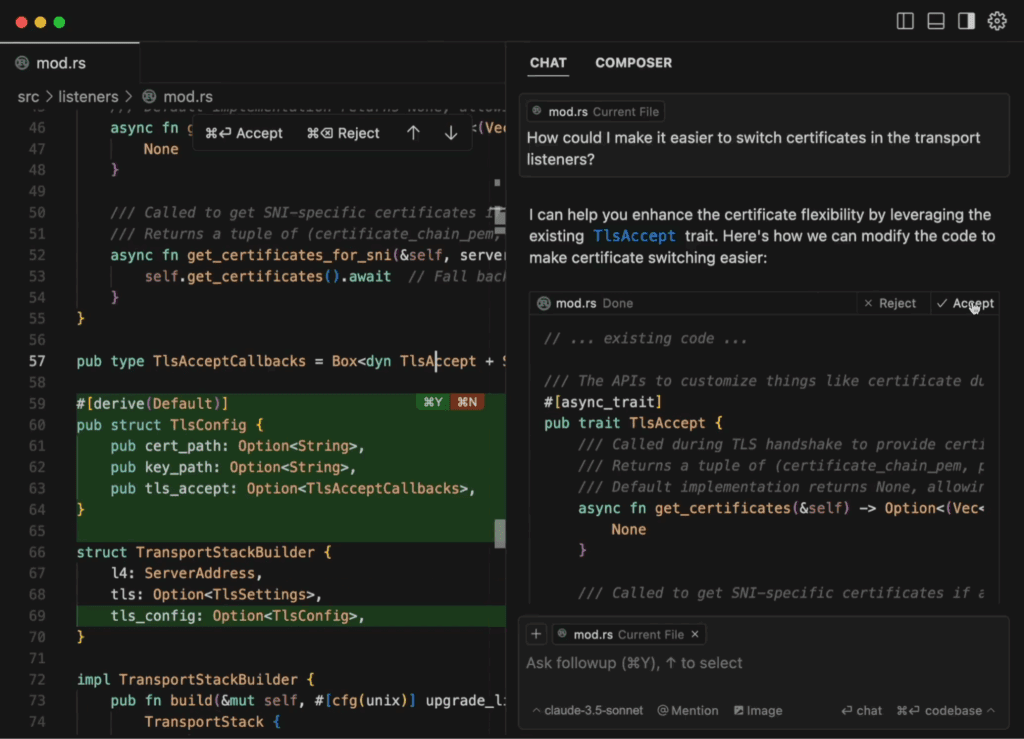

Cline

|

Autonomous Coding Agent Plugins

|

Open‑source autonomous coding agent with planning/execution modes, terminal and browser integration

|

Below, we’ve broken them into three key groups, based on how they support our GenDD workflow.

Code Editors and AI Developer Tools

Cursor is our go-to integrated development environment (IDE).

It supports full-repo code editing, applies project rules, and allows you to write code, refactor, and generate documentation without losing context. Cursor also works across multiple programming languages, including JavaScript, Python code, and TypeScript.

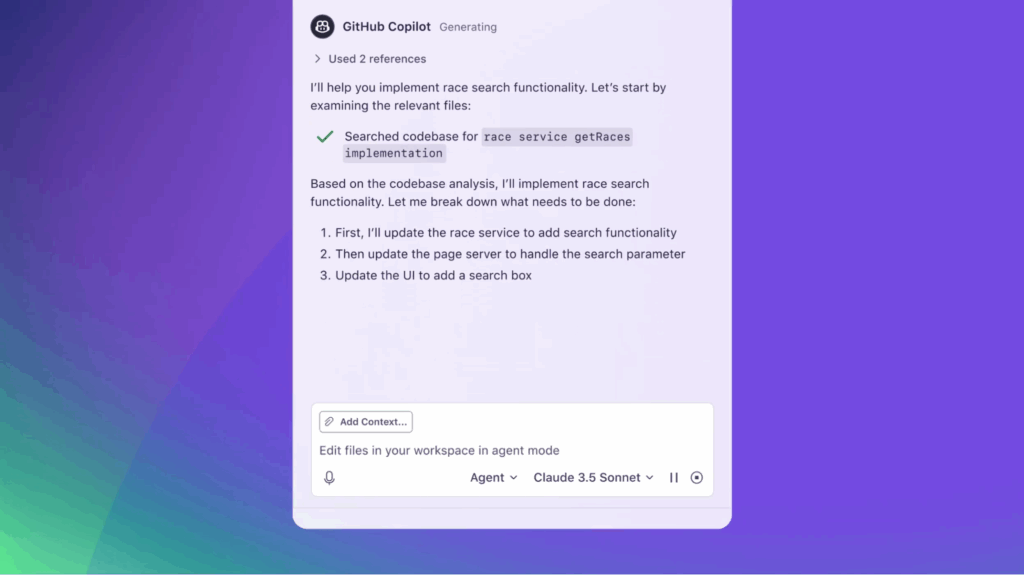

For lighter tasks, we still use GitHub Copilot or Claude Code for in-line code completion and boilerplate generation (particularly useful in Visual Studio Code). They’re fast, low-friction, and great for getting unstuck mid-sprint.

We also often use v0.dev and Lovable to scaffold UI components directly from natural language prompts. They both export-ready React code and speeds up early prototyping without waiting on design handoffs.

🔦 GenDD Spotlight: How Josue Alpizar Uses Cursor to Debug, Document, and Deliver Faster

“Cursor is my daily driver for everything from generating tests to debugging tricky issues. It helps me document features clearly for both technical and non-technical stakeholders, and it’s especially useful for troubleshooting test failures—just drop in the terminal output and it explains what’s going wrong. It’s like having a very experienced partner who never gets tired and has a fresh mind.”

— Josue Alpizar, Senior Solutions Architect at HatchWorks AI

Prompting and Research Tools

We use ChatGPT to shape early product thinking and contribute to the development process with rapid research, planning, and even code documentation. Combining ChatGPT’s Deep Research functionality with state of the art search tools like Perplexity means we can perform market validation faster than ever before.

From there, it’s especially powerful when used to create user stories, generate code descriptions, or summarize technical inputs, all from natural language descriptions.

This helps product managers, designers, and developers get aligned faster and sets the stage for better AI outputs downstream.

ChatGPT also helps teams write prompts for other AI tools, generate acceptance criteria, and align cross-functional priorities faster. We often use it to compare existing code patterns, explore improvements, or simulate edge case behaviors.

🔦 GenDD spotlight: How Luis Villabobos uses ChatGPT to write code summaries, generate code documentation, and accelerate PRD drafts during early planning.

“I use ChatGPT to accelerate code reviews, quickly understand unfamiliar codebases, and plan more effectively with engineering teams. It’s invaluable for AI Labs experimentation and feature delivery, letting me iterate faster and stay aligned with the team. During early planning or when reviewing complex pull requests, I use it to generate clear summaries and documentation. This not only speeds up my workflow but also enables deeper, 1:1-level technical conversations with engineers and clients who need context or clarification—turning high-level ideas into structured, actionable input.”

— Luis Villalobos, Labs Lead at HatchWorks AI

Workflow and Agent Builders

As your build matures, workflow tools bring orchestration and integration into the fold. Platforms like n8n provide low-code automation that connects AI models, code extensions, APIs, and conditionals into scalable flows. Teams can quickly build onboarding flows, reporting pipelines, and internal tools without heavy engineering lift.

For more developer-oriented orchestration, frameworks like Apache Airflow offer robust, production-grade scheduling and pipeline management across your data and AI stack.

When you’re ready to extend beyond workflows and into agent-based architectures, frameworks like LangChain, LangGraph, and Haystack enable richer orchestration of LLM agents. These tools support task planning, memory management, and multi-agent collaboration, making them ideal for building AI-powered agent workflows (including Retrieval-Augmented Generation, or RAG).

For content-heavy or customer-facing tasks, Gemini provides fast, generative outputs like lead qualification messages or personalized emails. It integrates seamlessly into existing Google environments and can bring agentic intelligence directly into everyday operations.

🔦 GenDD spotlight: How Brayan Vargas uses n8n to create a code generation agent that connects natural language requests to executable Python code, then pipes results into a version-controlled repo.

“We used n8n to orchestrate a full reporting pipeline—logging tasks, analyzing content with LLMs, and generating polished PDF reports. Cursor helped define the schema and refine the HTML structure, while n8n handled the automation, routing data through BigQuery and into API-based PDF generation. It’s become a powerful agentic workflow that turns raw input into client-ready deliverables.”

— Brayan Vargas, Software Engineer at HatchWorks AI

Remember: You’re the Orchestrator, AI is the Executor

GenDD is not vibe coding. We don’t paste prompts into a chatbot and hope something usable comes out. If anything, GenDD’s structure prevents the mistakes vibe coders make:

- Writing prompts with zero context and expecting perfect output

- Asking AI to “build the app” without breaking the work into manageable parts

- Trusting the first draft of a solution without checking the logic

- Letting the tool lead, instead of setting a clear direction from the start

It’s the GenDD structure that keeps humans firmly in control while treating AI as a talented co-worker who comes to projects with zero context.

If you want good results, you have to give that AI co-worker the full picture. That means sharing the architecture, naming the constraints, defining success, and building in feedback. Otherwise, it will guess. And it’ll guess wrong in ways that cost you time, quality, or trust.

Your role is to think through the problem, set the boundaries, and direct the AI toward a useful outcome. Tools like Cursor, ChatGPT, and LangChain will handle the execution. But they only work well when they’re grounded in context and guided by intention.

“Garbage in, garbage out” still applies. But when you bring the right inputs, clear direction, scoped tasks, and relevant data, you turn AI into a powerful extension of your team.

Structure over vibes. Orchestration over improvisation.

That’s the GenDD difference.

AI Developer Tools by Role: What GenDD Developer Teams Actually Use

Below, we’ve broken down the tools we use most in each function, along with how they actually support faster, smarter development when applied with structure.

Product Managers and Their GenDD Tools

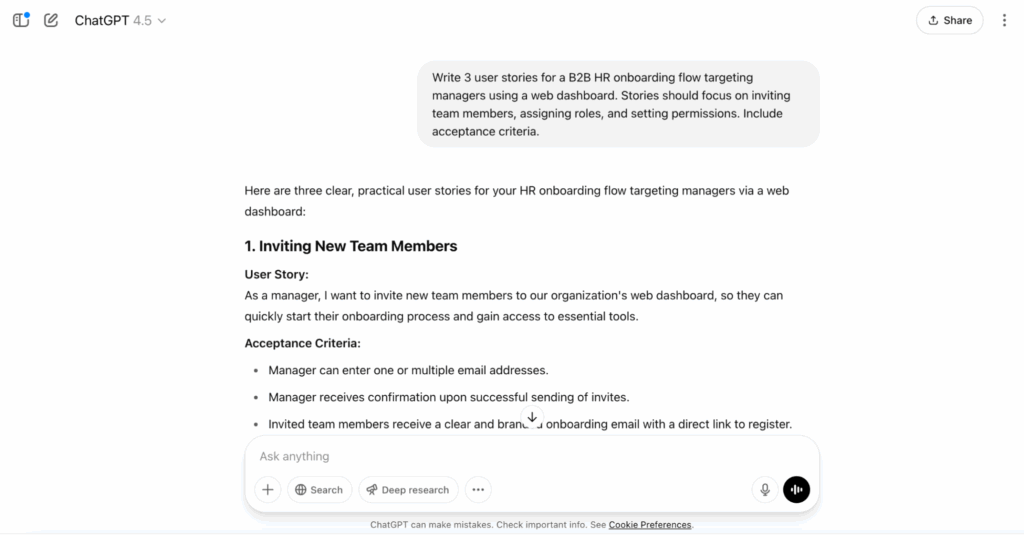

ChatGPT (Prompting & Product Structuring)

ChatGPT acts as an on-demand assistant for product managers—helping them move faster from business goals to actionable plans. It’s not there to replace judgment, but to speed up the parts that used to take hours: story writing, acceptance criteria, persona outlining, and even rough PRD drafts.

How we use it in GenDD: PMs use ChatGPT during the earliest phases of a project to translate vision into dev-ready input. That includes shaping backlog items before sprint planning or generating first-pass content to align teams faster.

Try this prompt → “Write 3 user stories for a B2B HR onboarding flow targeting managers using a web dashboard. Stories should focus on inviting team members, assigning roles, and setting permissions. Use the standard user story format: As a [persona], I want to [goal], so that [benefit]. Include acceptance criteria for each story.”

Example output:

User story: As a hiring manager, I want to invite new employees to the onboarding platform so that they can complete required training before their start date.

Acceptance criteria:

- User can input employee name and email.

- Invitation email is sent with a secure sign-up link.

- User receives confirmation once the employee has accepted the invitation.

What to watch for: It still needs strong direction. Without platform context or business goals, outputs will default to generic SaaS filler.

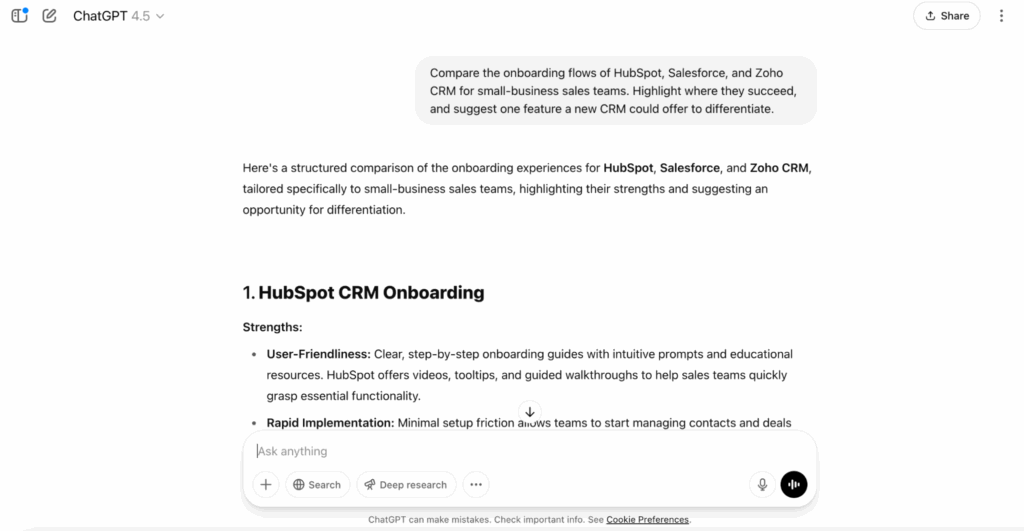

ChatGPT (Competitive Research + Strategic Input)

Beyond tickets and stories, ChatGPT is surprisingly helpful for market-level thinking. It pulls together trends, summarizes competitor flows, and offers structural comparisons you can refine further.

How we use it in GenDD: When defining product differentiators, we’ll use ChatGPT to scan competing workflows and distill areas of opportunity. It gives PMs a starting point that’s faster than manual research—and often just as insightful.

Try this prompt → “Enable Deep Research, then compare the onboarding flows of HubSpot, Salesforce, and Zoho CRM for small-business sales teams. Highlight where they succeed, and suggest one feature a new CRM could offer to differentiate.”

What to watch for: Details will vary. Double-check outputs against live products before sharing insights with stakeholders.

Designers and Their GenDD Tools

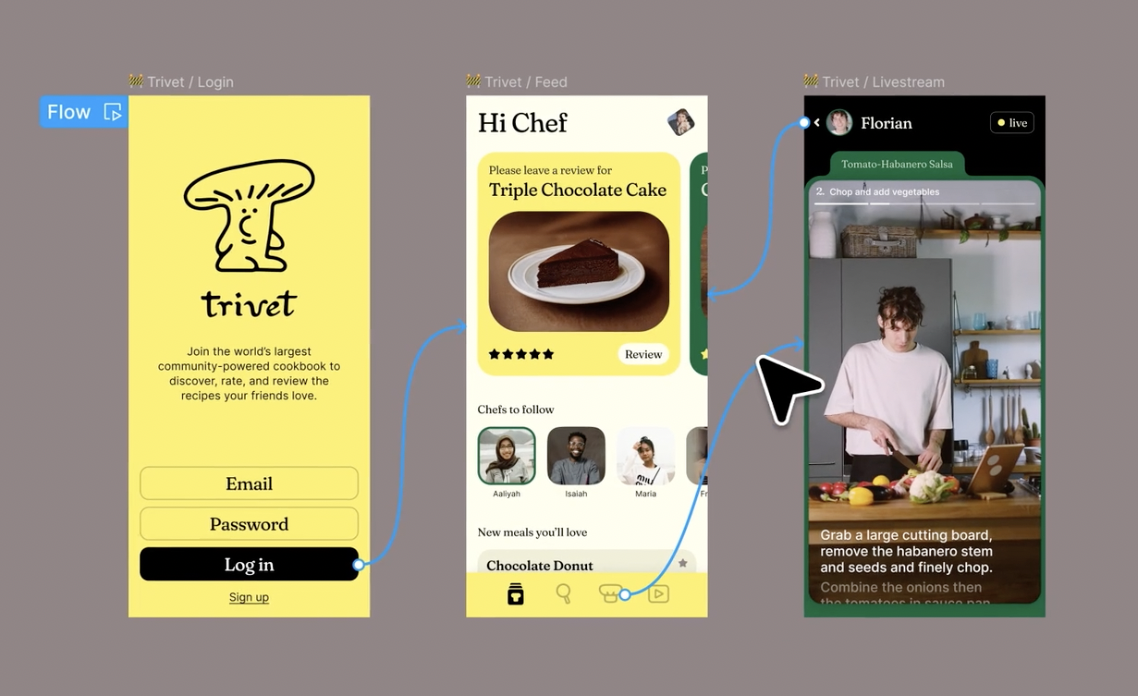

Figma AI (AI-Augmented Prototyping)

Figma AI enhances what you’ve already designed. It can refactor layouts, generate responsive variants, and surface potential usability issues. It’s less about generating from scratch, more about leveling up fast.

How we use it in GenDD: Designers use Figma AI once the core screen is built. It helps test mobile variants, speed up layout tweaks, and get to production-ready designs with fewer cycles.

Try this prompt → “Refactor this dashboard layout for better spacing and alignment. Then generate a mobile version that maintains usability across breakpoints.”

What to watch for: AI suggestions are useful, but still need to be checked against your brand system and accessibility standards.

Lovable (AI UI Builder)

Lovable turns structured prompts into clean, visual UI mockups. It’s great for getting ideas on the table before design sprints kick off. Now, it’s not really meant for handoff, but it’s fast, visual, and sharp enough to communicate real intent.

How we use it in GenDD: Lovable comes in during discovery, pre-sales, or rapid ideation. We use it to prototype screens or flows we want feedback on before the design system comes into play.

Try this prompt → “Design a responsive patient login screen for a healthcare portal. Include fields for email and password, a forgot password link, and a call-to-action for new users. Prioritize accessibility and mobile-first design.”

What to watch for: Outputs look polished but don’t follow your design system or accessibility rules unless you spell them out.

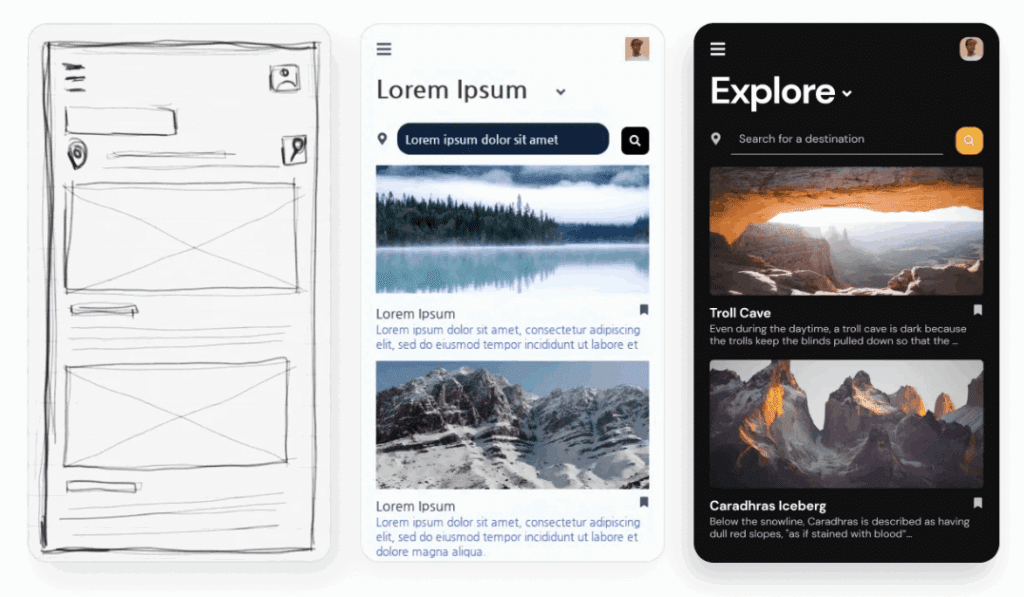

Uizard (Rapid Wireframing)

Uizard sits between sketching and design, turning ideas, text prompts, or even napkin drawings into working wireframes. It’s fast, flexible, and great for teams who need feedback before investing in polish.

How we use it in GenDD: We use Uizard to mock up early user flows, especially for internal tools or concept testing. It helps cross-functional teams visualize interfaces without getting stuck in Figma too early.

Try this prompt → “Create wireframes for a multi-step healthcare intake form. Include fields for name, date of birth, insurance provider, and emergency contact. The form should work on desktop and mobile.”

What to watch for: You’ll still need to map UX patterns and edge cases because Uizard gives you the structure, not a spec.

v0.dev (Code-Based UI Scaffolding)

v0.dev bridges the gap between design and dev. It turns plain-English prompts into working React components, which is ideal for fast, functional prototypes that don’t just look good but run.

How we use it in GenDD: We use v0 during early frontend development when we want something real, not speculative. It’s especially helpful for building out standard UI patterns like forms, dashboards, and user flows.

Try this prompt → “Generate a React signup form with fields for name, email, password, and a checkbox for agreeing to terms. Use Tailwind CSS and return JSX with labels, validation, and required fields.”

What to watch for: The code is solid but not context-aware. You’ll need to adjust structure, styling, or logic to match your architecture.

Developers and Their GenDD Tools

Cursor (AI-First IDE)

As we mentioned before, Cursor is our go-to AI IDE. It understands your full codebase, supports project-wide refactoring, and lets you prompt in real time without losing context. Unlike plugin-based tools, Cursor is built for AI-native workflows from the ground up.

How we use it in GenDD: Used across all phases—scaffolding, debugging, refactoring, and documentation. Cursor keeps our team in flow while automating the execution-heavy work and assisting with complex coding tasks like multi-module refactors or deep dependency tracing.

Try this prompt → “Generate a new Express route in TypeScript that handles POST requests to /register. Validate input using Zod, call our existing createUser service, and return a typed success response. Match the project’s folder structure.”

What to watch for: Needs strong guidance from humans to match architectural patterns and reuse logic from across the repo.

GitHub Copilot (Inline Code Assistant)

Copilot fills in code as you write, suggesting functions, tests, and snippets based on context. It’s not repo-aware like Cursor, but it’s great for filling in the blanks quickly.

How we use it in GenDD: Ideal for boilerplate, repetitive logic, and quick iteration inside VS Code. As a VS Code extension, it integrates seamlessly with most developers’ existing workflows.

Try this prompt (as an in-code comment) → // Write a function that validates corporate email domains and rejects public ones (e.g. gmail, yahoo).

What to watch for: Suggestions are fast, but they don’t understand system-wide context. You’ll still need to edit for architecture and logic.

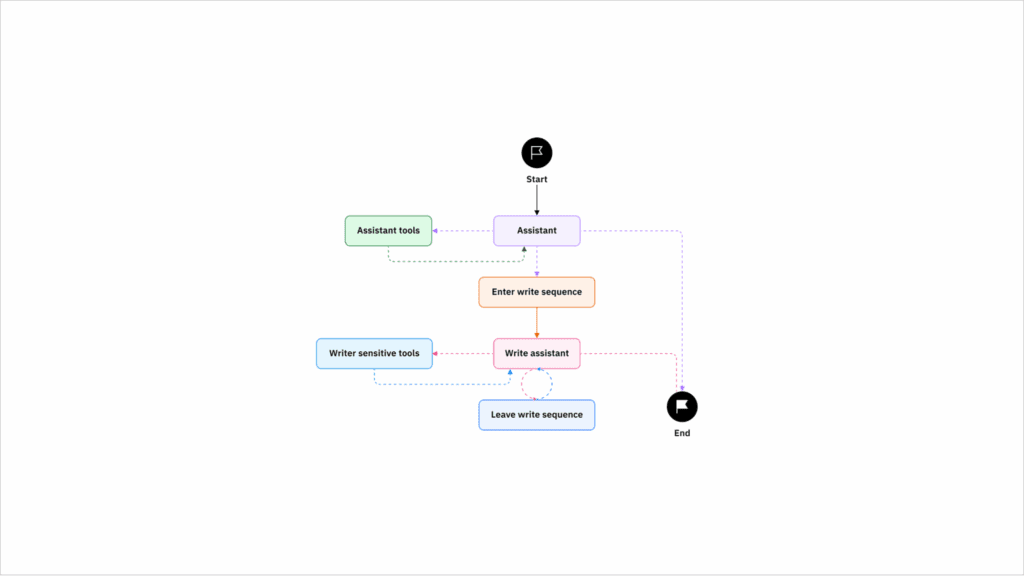

LangGraph (Agentic Dev Framework)

LangGraph helps developers build structured, multi-step agent workflows by combining stateful execution with memory, tools, and external APIs. It’s ideal for building AI copilots, dynamic automations, or internal developer agents with defined flow control.

How we use it in GenDD: We use LangGraph to create agents that summarize docs, call APIs, and hand off tasks between steps—all while maintaining memory and state across the workflow. While LangGraph is traditionally an ML engineering framework, in GenDD we leverage it to build the agents and orchestration logic that extend AI’s role beyond code generation into end-to-end workflows. It gives developers fine-grained control over how LLMs behave throughout the process.

Try this setup → Create an agent that extracts text from a PDF, summarizes it using GPT-4, and sends the result to a Notion database via API. Use LangGraph to manage state and transitions between each step in the workflow.

What to watch for: It’s powerful but requires clear definition of states and transitions. Best suited for developers who are comfortable designing agent workflows and managing orchestration logic.

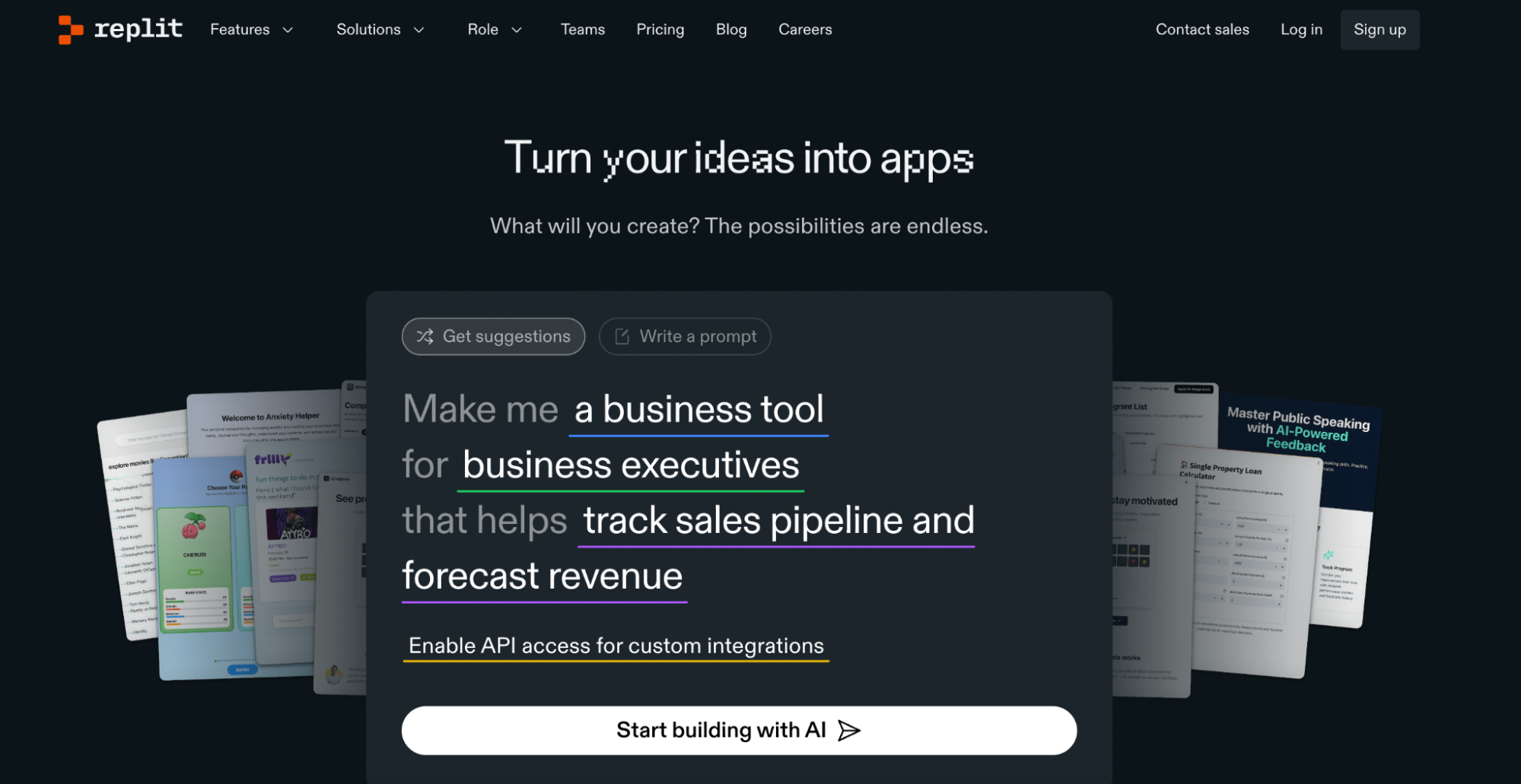

Replit (Browser-Based IDE)

Replit is a browser-based IDE that includes built-in AI assistance, instant deployment, and team-friendly collaboration. It’s ideal for early builds, demos, or quick MVPs.

How we use it in GenDD: Used during experimentation or early concepting—especially when we need to deploy something quickly or test AI functionality in a sandboxed environment.

Try this prompt → “Build a Python Flask app with a single /upload route that accepts a file, validates it as a PDF, and returns the number of pages.”

What to watch for: Not ideal for scaling or complex systems, but it’s great for prototyping with instant feedback.

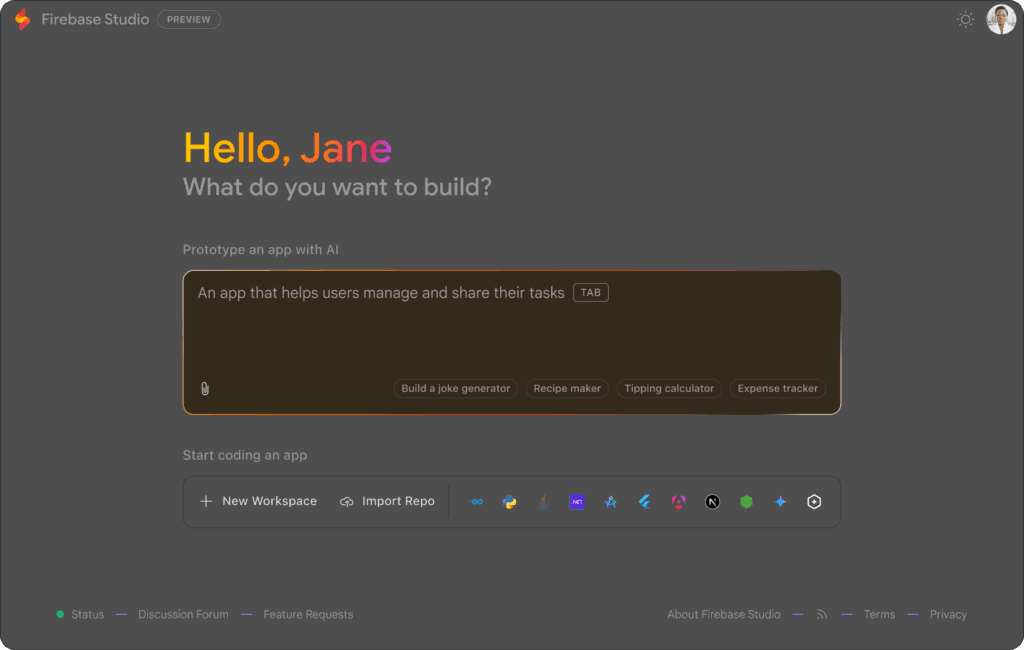

Firebase Studio (AI App Builder)

Firebase Studio blends AI-generated frontend logic with Firebase’s backend stack (Firestore, Auth, and Functions) into an all-in-one app building experience.

How we use it in GenDD: Useful for internal tools or prototypes where real backend functionality is needed without wiring everything from scratch.

Try this prompt → “Create a contact manager app with a form to add contacts (name, phone, email) and store them in Firestore. Include basic validation and list view.”

What to watch for: Strong for CRUD apps and quick full-stack demos, but not suited for complex UI or enterprise-grade architecture.

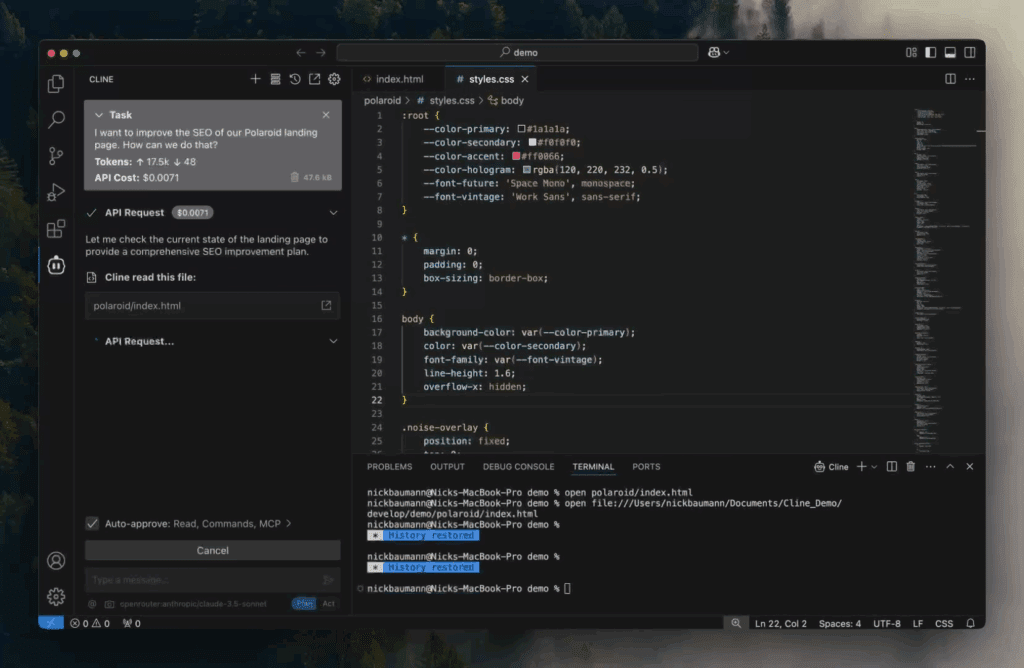

Cline (Multi-Agent Coding Environment)

Cline is an open-source IDE designed for multi-agent coding workflows. It lets you assign different LLM agents to different roles and coordinate them around your codebase.

How we use it in GenDD: Cline is used when we’re building or experimenting with dev agents themselves. It’s a flexible way to simulate multi-agent collaboration and customize workflows deeply.

Try this setup → Assign an “architect” agent to plan module structure, a “coder” to write it, and a “reviewer” to comment on improvements—all within the same flow.

What to watch for: Not for beginners. Best used by teams building or refining their own AI agents or dev toolchains.

Roo Code (Autonomous Coding Agent Plugins – Built on Cline)

Roo Code takes Cline’s foundation and adds enterprise readiness—security, collaboration, and performance features that support scaling GenDD across teams.

How we use it in GenDD: We recommend Roo Code for clients looking to roll out AI-native dev environments across engineering orgs. It supports everything from agent management to CI/CD integration.

Try this use case → Build a multi-agent dev workflow with structured task routing, secure tokenized access to vector DBs, and GitHub actions integration.

What to watch for: It’s still evolving. Best for teams with an AI infrastructure strategy already in place.

Haystack (Agentic Dev Framework)

Haystack is an open-source framework for building production-ready pipelines that combine LLMs, document retrieval, and external tools. It’s ideal for creating AI-powered search systems, question-answering workflows, or custom RAG (Retrieval-Augmented Generation) applications.

How we use it in GenDD: We use Haystack to design robust pipelines where LLMs interact with structured data sources and APIs. This allows developers to quickly build solutions like knowledge assistants, search-based agents, or multi-step workflows tied to enterprise data.

Try this setup → Build a RAG workflow that retrieves relevant documents from a vector database, summarizes them with GPT-4, and delivers an answer to the user. Use Haystack to manage the retrieval, ranking, and orchestration layers.

What to watch for: Haystack is powerful but requires careful pipeline design. It works best for developers comfortable with retrieval systems, orchestration logic, and integrating LLMs with external data sources.

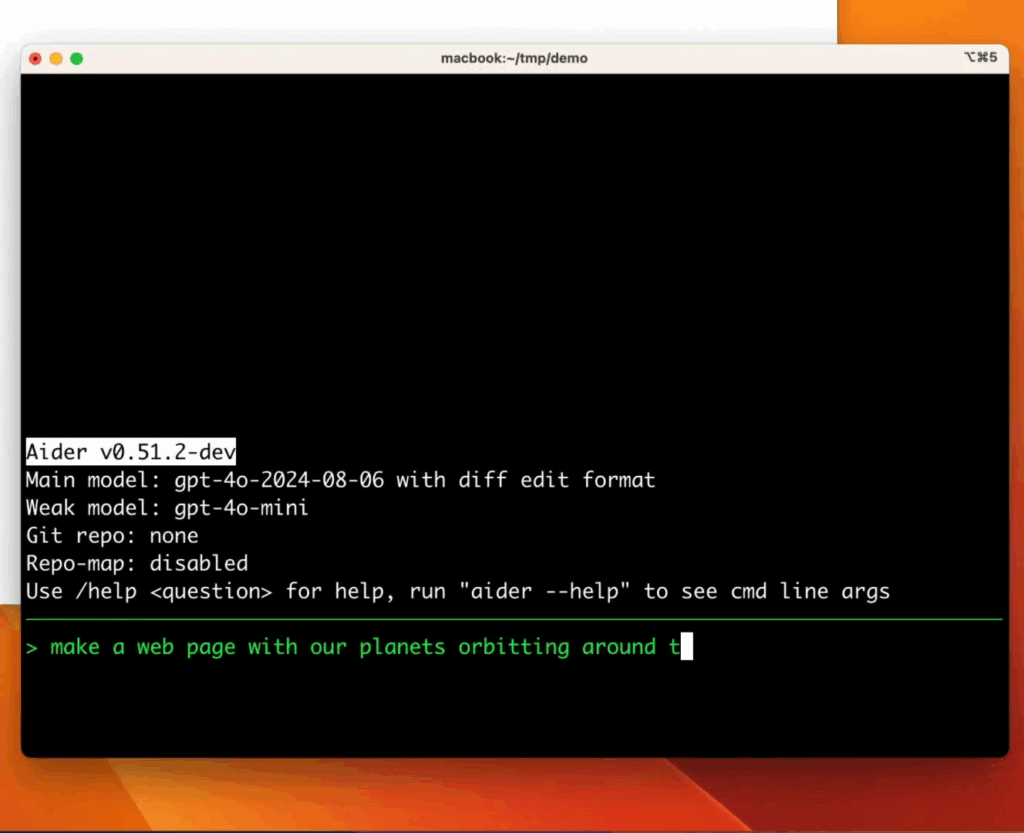

Aider (Terminal-Based AI Pair Programmer)

Aider works in the terminal and edits your actual codebase through conversation. It’s lightweight, fast, and perfect for CLI-heavy workflows.

How we use it in GenDD: When we’re working in minimal environments like Docker containers, servers, or small microservices, we use Aider for fast, local edits without opening a full IDE.

Try this prompt → “Add input validation to register.py using Pydantic. The fields are name, email, and password. Email must be valid, password at least 8 characters.”

What to watch for: There’s no GUI safety net. Be specific, and commit frequently.

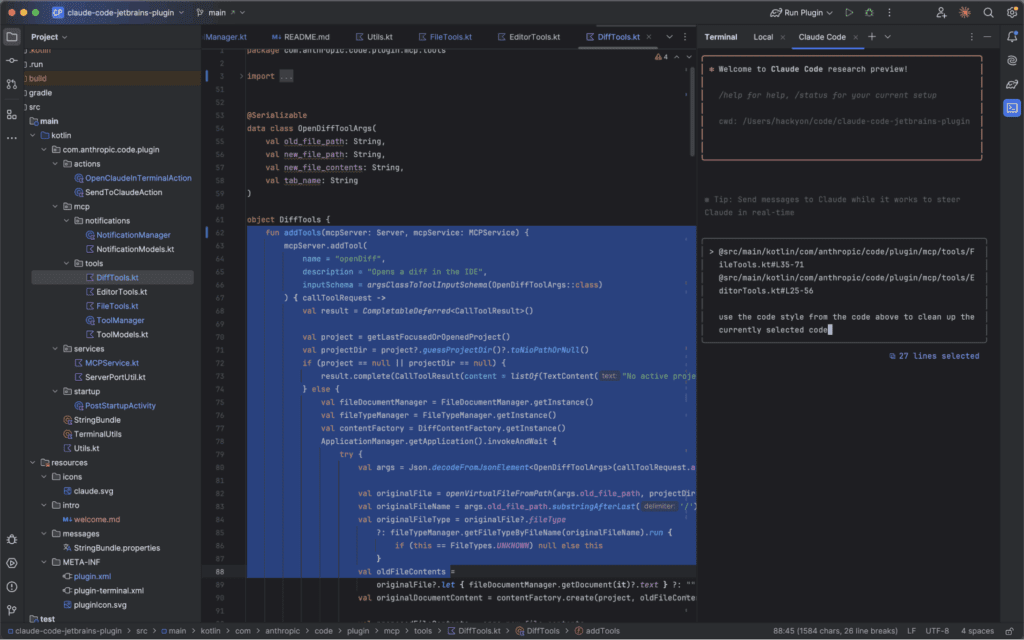

Claude Code (AI Code Assistant)

Claude Code is a versatile AI code assistant capable of understanding complex codebases and providing context-aware suggestions. It can help plan, write, debug, and refactor code across multiple languages, making it ideal for both day-to-day development and larger feature work.

How we use it in GenDD: We use Claude Code when we need high-quality, contextual coding support. It’s particularly useful for tasks like debugging tricky logic, refactoring legacy code, or generating tests during fast-moving build phases.

Try this prompt → “Analyze this existing Express.js API and refactor it to use a service layer. Then add input validation using Zod and create unit tests for the updated endpoints.”

What to watch for: Claude Code produces strong outputs but still requires review and integration into your architecture. It’s best leveraged as a collaborative partner, not a fully autonomous coder.

QA Engineers and Their GenDD Tools

Qodo (Reasoning-Based Test Generation)

Qodo brings structured reasoning to test writing. It analyzes your codebase and proposes intelligent unit tests, assertions, and edge cases based on actual logic. Previously known as CodiumAI, it’s now the broader platform housing tools like TestGPT.

How we use it in GenDD: We use Qodo during implementation to expand test coverage based on actual code behavior. It helps surface what’s missing and propose smarter validations across edge cases.

Try this prompt → “Analyze this function that calculates loan eligibility and generate unit tests for boundary conditions, including age, income, and debt ratio thresholds.”

What to watch for: Great for logic-heavy modules, but doesn’t replace judgment around product risks or test priorities.

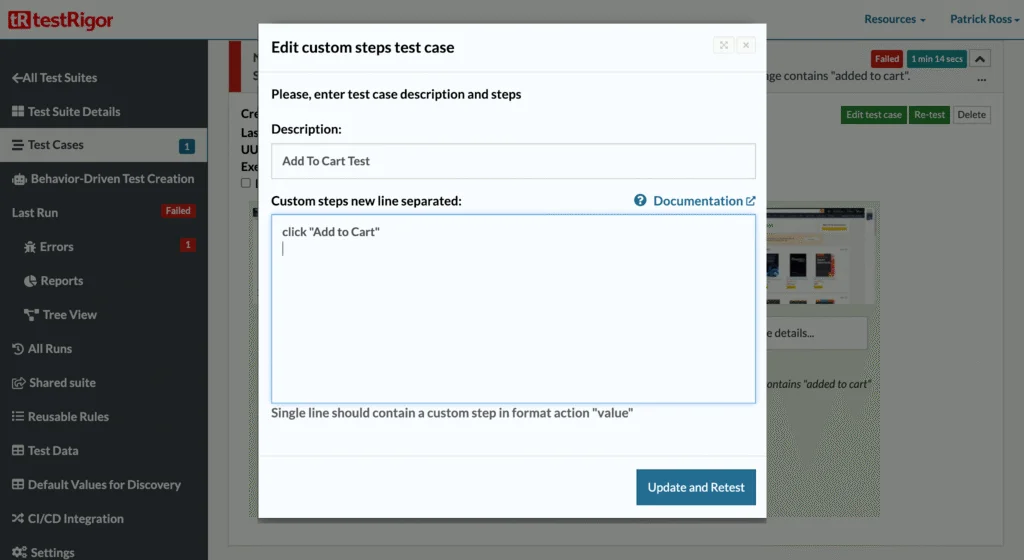

TestRigor (No-Code Test Writing)

TestRigor lets teams write end-to-end tests using plain language. It’s designed for QA engineers, PMs, or even support staff to create and maintain UI tests without digging into Selenium or Cypress.

How we use it in GenDD: We use TestRigor to cover flows like signups, logins, and payments, especially for client-facing apps.

Try this prompt → “Login as a returning user, go to the billing page, and verify that the saved credit card ends in 1234 and the ‘Update Payment’ button is visible.”

What to watch for: Best for happy paths. You’ll still want code-based tests for system-level or deeply conditional logic.

TestGPT (Self-Healing and Exploratory Testing)

TestGPT is a feature within Qodo. It goes a step further by proposing tests and adapting them when the code changes. It’s part test generator, part co-pilot for test maintenance.

How we use it in GenDD: We use TestGPT when working in unstable environments or fast-moving branches. This is especially helpful in early-stage products where UI and API shapes change frequently.

Try this prompt → “Review these test cases for our signup API and update them to match the new schema: email, password, firstName, lastName, referralCode (optional).”

What to watch for: It can miss edge cases unless you explicitly define constraints. Always review assertions before merging.

Data Engineers and Their GenDD Tools

Databricks Genie + Databricks AI/BI Dashboards (AI Pipeline & Analytics Management)

Databricks Genie is an AI-powered interface that makes working with data in Databricks more intuitive, helping teams build, query, and manage pipelines using natural language. AI/BI Dashboards extend this capability, turning complex data into dynamic, visual insights without manual reporting.

How we use it in GenDD: We use Databricks Genie to streamline pipeline creation, data querying, and AI model experimentation, all from a conversational interface. Then, we use AI/BI Dashboards to monitor performance metrics, visualize model outputs, and communicate results to stakeholders in real time. This combination supports everything from embedding generation to prompt tuning in a controlled, auditable environment.

Try this workflow → Use Databricks Genie to build a pipeline that classifies customer support tickets by urgency. Then create an AI/BI Dashboard that tracks ticket volume, classification accuracy, and resolution times, updating automatically as new data flows in.

What to watch for: While these tools simplify pipelines and analytics, they still require solid data engineering and governance practices to avoid misalignment or inaccurate reporting.

Check out our resources on Databricks and data governance to get up to speed:

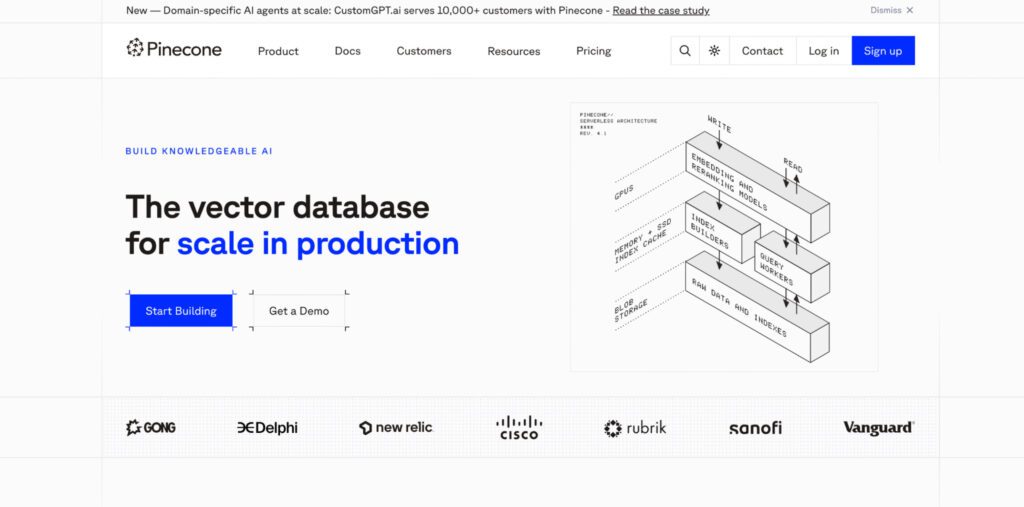

Pinecone (Vector Database for RAG)

Pinecone stores and indexes vector embeddings, making it easy to build Retrieval-Augmented Generation (RAG) systems that pull in relevant context before generating a response.

How we use it in GenDD: We use Pinecone to power search and question-answering features across support docs, policy data, or long-form text. It handles indexing, filtering, and similarity search without manual tuning.

Try this setup → Generate embeddings for your product documentation using OpenAI’s text-embedding model, store them in Pinecone, and create a search endpoint that retrieves the top 3 results per user query.

What to watch for: You still need a strategy for chunking, embedding granularity, and relevance tuning.

FAISS / Weaviate (Open-Source Alternatives to Pinecone)

FAISS and Weaviate are popular vector DB options for teams who want to self-host or experiment without a managed service. They offer customizability and lower-cost scaling, at the price of more ops work.

How we use it in GenDD: When we need tighter control over deployment—such as in regulated environments—we use FAISS for fast, local vector search, or Weaviate when we want to pair metadata filtering with embedding search.

Try this use case → Use FAISS to store embeddings of legal contract clauses and return matches for user queries like “termination clause in SaaS agreement.”

What to watch for: Setup takes more time. Be prepared to handle scaling, persistence, and API integrations manually.

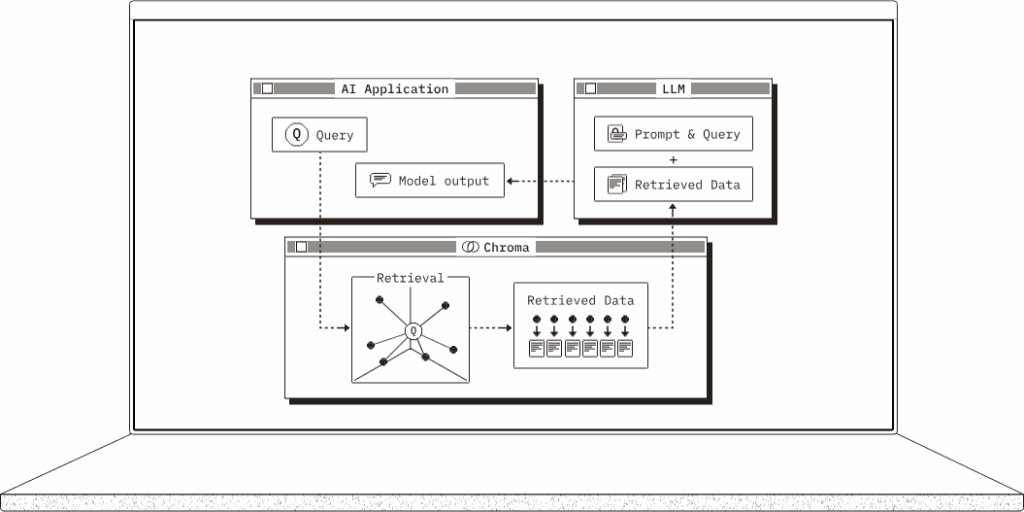

ChromaDB (Lightweight, Local Vector Store)

Chroma is a simple, local-first vector store for prototyping and dev environments. It’s ideal for building small RAG systems without spinning up a full service.

How we use it in GenDD: Used during early-stage development to mock and validate retrieval logic before moving to production-grade infrastructure.

Try this setup → Build a chatbot that searches your markdown-based project notes using embeddings stored in Chroma.

What to watch for: Best for development and demos. Not built for high concurrency or large-scale querying.

The Rise of the Agentic Mesh (and How it Works with GenDD)

Most developers who are using AI interact with it in a single-threaded way. One prompt, one model, one result. But that’s starting to change.

Agentic mesh systems coordinate multiple agents, each with their own role and responsibilities, into a collaborative structure. These agents observe, reason, hand off to each other, and even learn over time.

While still early, we’re already seeing agentic mesh structures take shape in real products. For example:

- A retrieval agent pulls documents or context from Pinecone or ChromaDB

- A planner agent breaks a task into steps

- An executor agent hits APIs, modifies code, or writes outputs

- A review agent checks the result or escalates issues for feedback

These agents often operate over shared memory, respond to system prompts, and rely on structured interfaces (like LangChain tools or n8n flows).

Our Advice for Engineering Teams Using Agents in GenDD

If you’re thinking about implementing agentic systems, here’s what we recommend:

- Start with one well-defined agent. Give it a clear role (e.g. write acceptance criteria), limit its scope, and let it operate with human oversight.

- Use your existing tools. Many orchestration platforms (like LangChain or n8n) are already mesh-ready—you don’t need to reinvent infrastructure.

- Design for escalation, not perfection. Agents will fail. Plan for that. Give them fallback behavior or make it easy for humans to step in.

- Treat agents like teammates. Give them documentation, constraints, and well-defined interfaces. The clearer your instructions, the better your results.

Building Your Own AI Coding Tool Stack

There’s no single “correct” stack for building with AI. But there are principles that separate teams who get results from those stuck in experimentation.

Start with these three:

- Prioritize feedback loops.

AI works best when humans guide the process rather than prompt and pray. Build checkpoints into your workflow. Review. Adjust. Re-prompt. Use context-aware code suggestions to iterate instead of starting from scratch. - Think in orchestration, not just tools.

A great tool is only as good as the system around it. Instead of asking “Which LLM should we use?”, ask “Who’s orchestrating what? Where does the AI step in? Where do humans still need to lead?” Intelligent code assistance and automated documentation only add value when they’re integrated into your actual development process. - Use tools that match your team’s depth.

You don’t need to start with an agent mesh. If you’re just getting going, combine Cursor (for AI-powered code generation and refactoring), ChatGPT (for planning, writing, and code documentation), and n8n (for chaining workflows using natural language descriptions). That stack alone supports code security, code optimization, and rapid execution across existing codebases.

Build the GenDD Way Today

At HatchWorks AI, we don’t just test tools. We help teams structure their agent workflows, pick the right IDEs, and build AI-native systems that help them build better, faster.

If you’re looking to:

- Choose the best AI developer tools for your team’s goals

- Set up intelligent code assistance and human-in-the-loop checkpoints

- Pilot a context-aware code generation or agent mesh workflow

- Integrate code completion and documentation tools into your SDLC

We’ll help you get there.

Fill out the form, and we’ll help your team turn a scattered AI experiment into a repeatable system with the best tooling and processes in place.