The majority of Generative AI pilots, often utilizing large language models (LLMs), don’t make it to production. After blazing through a successful Proof of Concept, they fail to meet the criteria needed to generate regular value at scale in an MVP, especially when faced with more complex tasks in natural language processing

The 80/20 rule is at play here, exacerbated because the best practices around workflows and tooling are still in flux. There’s a long tail, and the question is: how do I get to the end of it?

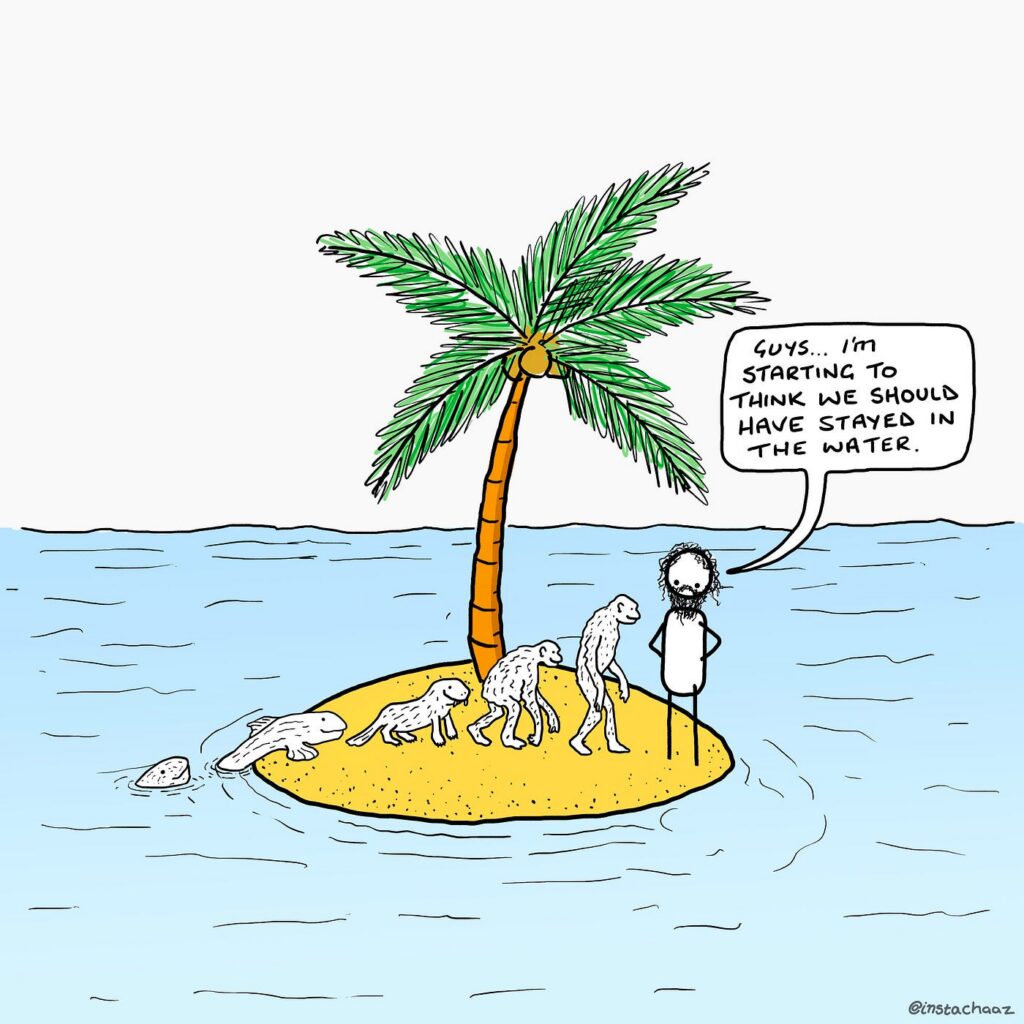

In this blog, we’ll dive deeper into this problem, exploring the factors that both help and harm productionalization. Rather than treat Generative AI as its own island, we’ll bridge it back to the worlds of Classical AI and Software Development, to empower you to see that these worlds aren’t so different after all. We’ll talk about the tools and processes and help you identify how to navigate the space.

Avoid Premature Optimization

“You shouldn’t pave the cow path.”

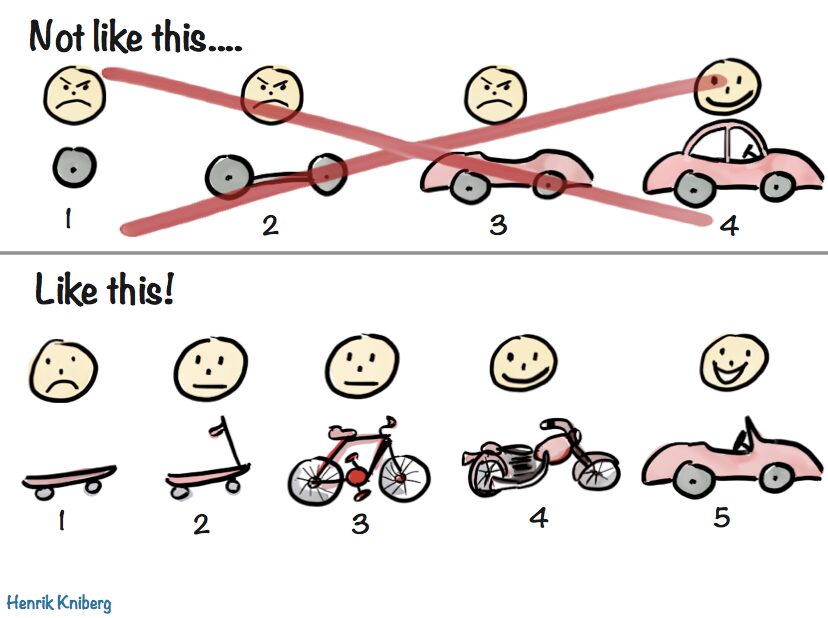

Many idioms relate a similar lesson. Look at the whole before finetuning the part. If the whole may change, why cast the part in stone? This wisdom is the cornerstone of Agile philosophy: products must be built iteratively, continuously validating requirements.

In software development, the most common incarnation of this wisdom is “avoid premature optimization.” Here, what classifies optimization as premature is risk. Specifically, the risk of changing requirements – whether due to the end-user, the business, or the other systems – forcing the code to change too.

Most are familiar with the process of tweaking code to perform better against a specific heuristic function, such as computational efficiency, memory, or speed. There are sophisticated techniques to measure and improve each of these.

Fewer are familiar with the importance and risks of organizing code to give maintainability – but it is no less important. If the former lets your code scale to traffic, the latter lets your codebase scale to new complexity.

For imperative programming, both the SOLID principles and the Unix philosophy provide a set of guidelines to follow. But there is still an art in applying them.

Take the Do Not Repeat Yourself (DRY) Principle. If you too eagerly pull out a piece of repeated logic from two services, you risk creating an unnecessary shared dependency across them. If the requirements of either service change, the function will have to change too and any service that calls it may potentially be impacted.

For those familiar with SOLID principles, the Dependency-Inversion Principle seems like our way out of this.

Depend upon abstractions, [not] concretions

High-level modules should not import anything from low-level modules. Both should depend on abstractions (e.g., interfaces). Abstractions should not depend on details. Details (concrete implementations) should depend on abstractions.

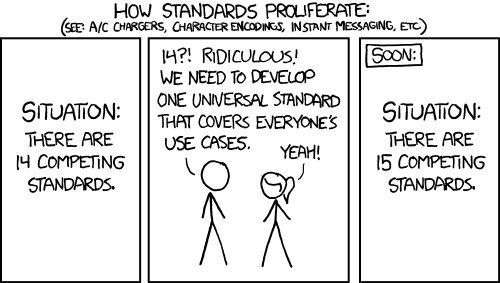

Abstraction Is All You Need, Until It Isn’t

It is not this blog post’s goal to suggest a single set of abstractions that are somehow universal and correct – in fact, that is precisely what this blog post warns against.

But what is abstraction anyway?

Abstraction is like how when you drive a car, you don’t need to understand the engine to drive. The dashboard gives you the controls you need, hiding the complex mechanics. In tech, abstraction simplifies complex systems, so users can interact with just what they need without worrying about the underlying details. When done incorrectly, these complicate rather than simplify and cause more harm than good.

For abstractions to be effective, they must be catered to the particularities of the problem you’re solving and the path you’re taking to solve it. A one-size-fits-all approach only works for the most well-defined use cases. The space around Generative AI, particularly language models like LLMs, just isn’t there – and yet there’s no shortage of LLM-branded tools implying it is.

These rigid abstractions end up causing challenges for LLM-based applications.

This isn’t necessarily a bad thing. These tools empower people to build working software faster with LLMs. They take risks, presenting workflows that respond to usage patterns. Their popularity indexes, at least in part, their adoption and the value they add. However, in practical usage, these tools may not always align with the project’s needs, and their rigid abstraction levels can cause substantial heartburn for the teams seeking more control over their workflows.

But the same tools that speed you up in delivering your pilot might also slow you down on the road to production. As an ever-growing number of articles and blog posts shows, even the most popular tools aren’t immune. Many enterprise teams end up sunsetting LLM native libraries like LangChain and LlamaIndex in favor of communicating with LLMs directly, without additional conceptual abstractions.

Consider this from Octomind’s Why We No Longer Use LangChain

LangChain was helpful at first when our simple requirements aligned with its usage presumptions. But its high-level abstractions soon made our code more difficult to understand and frustrating to maintain. When our team began spending as much time understanding and debugging LangChain as it did building features, it wasn’t a good sign."

The effort needed to hack LangChain to do what I want it to do would cause insane amounts of technical debt"

🎙️ For more information, check out my bonus Talking AI podcast episode

The issue is straightforward. The abstractions were defined before the usage patterns were. The tools were built and the builders were tasked with figuring out how to use them after the fact. They are reengineering their workflows to fit into the available tools, introducing inefficiencies and risks along the way. Here, the abstractions are defining rather than reflecting usage patterns – and for many use cases, these definitions just aren’t all that useful.

Building Right For the Right Moment

So what is an aspiring team to do? What’s the right way to balance short-term velocity with long-term scalability? Here are three guidelines to find the right tools, with the right abstractions, for the right moment.1. Think process first, tooling second.

With all of the excitement and glamor of the latest frameworks, it’s easy to fall into tool-centered thinking and become a hammer looking for a nail. It’s important we move beyond this and think bigger than any single tool.

To do this, we should understand the workflows that they make possible. For example, the typical RAG (Retrieval-Augmented Generation) workflow is made up of a series of processes, including:

- ETL Jobs, e.g. loading in unstructured data into the vector store

- Prompt Engineering, e.g. tuning the inputs we provide to the LLM to optimize our output.

- Orchestration, e.g. performing the potentially multistep inference workflow.

- Caching, e.g. maintaining a secure state of active conversational history.

- Observability, e.g. logging the information.

Focusing on the processes helps us understand all of the moving parts, how to optimize each, and consequently, how to identify the ideal architecture to use. As the space around LLMs continues to evolve rapidly and handle more complex tasks, it’s important to keep sight of what is really going on under the hood so that we can adapt our approaches accordingly.

We took this modular approach to building our RAG-as-a-Service offering. Learn more here.

2. Don’t treat Generative AI as an island.

Decades of Software Development and Machine Learning have given us key learnings around iteratively writing software – we shouldn’t reinvent the wheel. Software engineers working with artificial intelligence should not treat Generative AI and large language models as isolated fields.

3. Build, forecast, adapt

Lastly, it’s crucial to take an iterative, nimble approach.

We’ve already commented that because software is a living, breathing thing, it’s impossible to know all of the requirements upfront. We learn as we build and get user feedback. Additionally, with emerging technology such as Generative AI, the space will continue to mature as we build. New capabilities will be unlocked, new ways of doing things will be established, and new tools will be eagerly celebrated.

It’s therefore crucial that we regularly allocate time to adapt our architecture to both our new learnings and the new developments in the space. However, rather than just adopting what has come, there’s a chance to future-proof by forecasting what the growth of our software as well as that of the space will look like.

For example, at the time of writing, these are a few trends in the LLM space:

- Multimodal inputs: expanding the input of a model to seamlessly include combinations of text, images, and videos.

- Multimodel workflows: combining LLMs and SLMs into a singular workflow so that each can do the task they are best for.

- Structured outputs: models returning data in formats that are more ready to be actioned on.

- Increasing token availability: sending more data into your LLM at more efficient prices.

- Context caching: offloading conversational history from the input window by storing it in a dedicated data store.

An architecture that wishes to be future-proof should be built with these in mind.

Conclusion

It is easy to build Generative AI pilots, but hard to do so well. As a result, the majority of projects never make it to production. Recognizing where Generative AI and LLM projects struggle allows us to adjust our strategies accordingly.

Some factors contributing to this are the prevalence of heavily opinionated tools, the inflated sense that technology must be reinvented, and the rapid rate of change in the field. As an antidote, we recommend sticking to the basics – seeing Generative AI as on a continuum with software development and machine learning, and leveraging core learnings from both. By focusing on practical usage and real-world applications, teams can better navigate the challenges.

FAQs About Abstraction

Why do most LLM projects fail to scale beyond Proof of Concept?

Let’s break this down: most LLM projects struggle to move past the Proof of Concept stage because they often overlook the complexities of real-world integration and scalability. The initial excitement around deploying an LLM can overshadow practical considerations like data pipelines, system orchestration, and user needs. Think of it as building a prototype car without considering the road conditions it will face—it might look impressive, but it won’t perform where it matters. The real challenge lies in bridging that gap between a promising demo and a production-grade system that’s maintainable, scalable, and aligned with business objectives.

What role do software engineers play in the success of LLM projects?

Software engineers are the backbone of any successful LLM project. Think of this as orchestrating a symphony where each instrument must play in harmony. They ensure that the LLM integrates seamlessly with existing systems, handling everything from scalable architectures to robust data workflows. Without their expertise in building the necessary abstractions and infrastructure, even the most advanced language models can become siloed tools that don’t deliver real value. It’s all about balancing cutting-edge AI capabilities with solid software engineering principles to create systems that are both innovative and reliable.

How can natural language models be used for more complex tasks?

The real challenge lies in leveraging natural language models for complex tasks without overcomplicating the system. It’s about building the right abstractions that allow the model to handle complexity while remaining accessible and maintainable. Think of this as designing a library catalog that not only stores books but also understands reader preferences to suggest new titles. By integrating LLMs with classical AI techniques and focusing on data-centric approaches, we can extend their capabilities to tackle more intricate problems effectively.

What are abstraction levels in LLM-based projects?

Abstraction levels in LLM-based projects refer to the different layers at which we manage and simplify complexity within the system. Think of them as tiers in software architecture, where each layer handles specific functionalities while hiding the complexities of the underlying processes. This hierarchical structuring allows developers to focus on high-level operations without getting bogged down by low-level details, facilitating better orchestration and adaptability in the system.

What is conceptual abstraction, and why is it important in LLMs?

Conceptual abstraction involves distilling complex operations into general concepts or modules that are easier to understand and manage. It’s crucial in LLMs because it helps us build systems that are both powerful and flexible. Abstraction is only useful if it enables the system to adapt to changing requirements and integrate seamlessly with other components. By focusing on conceptual abstraction, we ensure that our LLMs can evolve over time, accommodating new features and technologies without requiring complete overhauls.

What should teams focus on to bring LLMs to production successfully?

It’s all about balancing innovation with practicality. Teams should focus on building robust data pipelines, scalable architectures, and user-centric designs. Avoiding premature optimization and over-abstraction is key; instead, adopt an iterative approach that allows for fine-tuning as real-world usage patterns emerge. Think of this as paving the cow path—observing how the system is used and refining it accordingly. By emphasizing adaptability, data-centric practices, and solid software engineering principles, teams can successfully transition LLMs from concept to production.

We’re ready to support your project!

Instantly access the power of AI with our AI Engineering Teams.