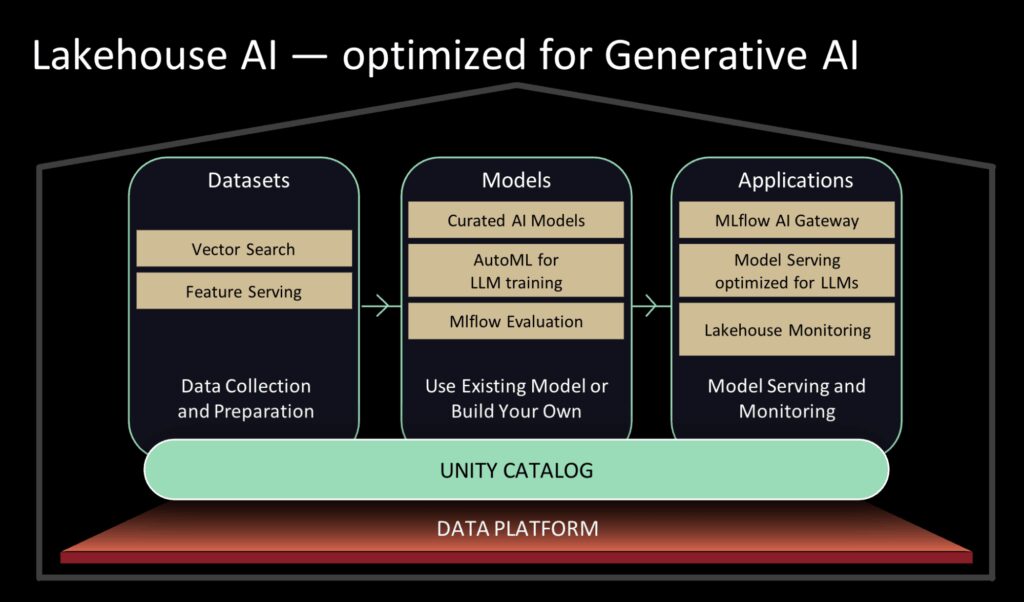

With Lakehouse AI, your data powers generative AI applications that transform customer experiences, unlock hidden insights, and boost your team’s productivity.

Too good to be true? See for yourself. In this article, we’ll show exactly how Lakehouse AI helps teams like yours quickly build, deploy, and deliver impactful AI solutions.

What is Lakehouse AI?

Pioneered by Databricks, Lakehouse AI is a data-centric approach to building generative AI applications.

It combines the flexibility of data lakes with the structured analytics capabilities of data warehouses, tailored specifically for AI workloads. With it, you can deploy AI models within your organization and deliver AI solutions to your customers.

That could look like a system that parses through your customer data to help answer client queries or one that helps your developers quickly query codebases, documentation, and internal data to build context-aware applications and debug complex issues in real-time.

With innovations such as Vector Search, Lakehouse Monitoring, and GPU-powered Model Serving optimized specifically for large language models (LLMs) and other machine learning models, Lakehouse AI is decreasing model serving latency. This enables real-time AI applications and empowers organizations to derive immediate value from their AI models.

Benefits of Lakehouse AI

Now that you understand what Lakehouse AI is, here’s how it can specifically benefit your team:Faster AI Deployments

Lakehouse AI’s unified architecture integrates data storage, analytics, and model serving, accelerating deployments.

This setup reduces the delays typically associated with deploying AI models, enabling teams to move from development to production faster. From there, organizations can quickly iterate and refine their models, shortening the time-to-value and improving their competitive edge.

What’s not to love?

Improved Data Governance

If there’s one thing you can always rely on Databricks for, it’s their approach to data governance and data management. Their Lakehouse AI feature is just as reliable and robust.

With clear lineage, traceability, and auditability, businesses maintain complete visibility into their AI assets.

This transparency simplifies regulatory compliance, enhances data security, and ensures responsible AI use across the enterprise. Teams can confidently deploy AI models knowing that governance controls are firmly in place.

Enhanced Collaboration

Lakehouse AI brings together data scientists, engineers, and analysts onto a common platform. By bridging the gap between traditionally siloed data and AI teams, it fosters better communication, reduces redundancies, and accelerates decision-making.

This unified data layer and environment significantly improves efficiency and productivity, enabling smoother collaboration on complex AI projects.

Flexibility for Innovation

The inherent flexibility of Lakehouse AI empowers businesses to experiment, test, and scale AI applications more efficiently than ever before.

Organizations gain the freedom to innovate by easily adapting their AI models, experimenting with different data sets, and iterating rapidly to refine performance. This agility helps companies adapt quickly to market changes and capitalize on new AI-driven opportunities.

Data Lakes vs. Data Warehouses vs. Lakehouse AI

Data Lakes are excellent at handling vast volumes of raw, unstructured data, making them suitable for flexible, exploratory analysis. However, they often fall short in supporting structured analytics and AI workloads because data can become disorganized and governance can be challenging.

Data Warehouses, on the other hand, provide strong structure, robust analytics capabilities, and clear governance, which are crucial for business intelligence.

Yet, they’re less flexible when dealing with unstructured data and can slow down innovation by requiring strict schema definitions upfront, making them less suitable for rapidly evolving AI-driven projects.

Lakehouse AI combines the best of both worlds by blending the flexibility and scalability of data lakes with the analytics capabilities and governance found in data warehouses.

Benefits Over Traditional Architectures

Compared to traditional data lakes and data warehouses, Lakehouse AI delivers clear advantages:

- Less Redundancy: A unified platform eliminates the need to replicate data across multiple systems. Organizations save resources and simplify their data infrastructure, lowering operational overhead.

- Faster Analytics: With a single unified architecture, teams can rapidly process data for AI workloads without delays from data transfers and integration across disparate systems. This accelerates insights, enabling real-time decision-making.

- Reduced Complexity: Lakehouse AI simplifies infrastructure by managing diverse data types, AI models, analytics, and governance through one integrated solution. This approach significantly reduces complexity, making it easier for teams to collaborate effectively and innovate at speed.

How Lakehouse AI Supports AI Model Development

From data preparation to deployment, Lakehouse AI simplifies every step, allowing data engineering teams to focus on creating value rather than managing fragmented tools and systems.

It Prepares Data for AI Models in a Unified Way

Effective AI model development begins with reliable, high-quality datasets.

Lakehouse AI unifies structured, semi-structured, and unstructured data into one cohesive environment.

By combining the flexibility of data lakes and the structured approach of data warehouses, Lakehouse AI enables organizations to easily clean, transform, and enrich data without redundancy or data silos. This unified view accelerates dataset preparation, ensuring AI models have timely access to high-quality, trusted data.

It Trains, Validates, and Deploys Models on Lakehouse Infrastructure

Once data is prepared, Lakehouse AI makes model training, validation, and deployment easier than ever:

| Model Training: | Model Validation: | Model Deployment: |

|---|---|---|

|

Lakehouse AI provides optimized environments for training complex AI models at scale, including GPU-powered computing resources specifically tailored for generative AI and large language models (LLMs). This accelerates the training process and allows data teams to rapidly iterate and improve model performance.

|

With built-in validation and experimentation capabilities, Lakehouse AI ensures thorough testing of AI models against production-like conditions. Teams can quickly evaluate model accuracy and effectiveness, fine-tuning parameters and features within the same integrated environment.

|

Lakehouse AI enables fast, reliable, and scalable deployment through purpose-built features such as GPU-powered Databricks Model Serving. This reduces latency, ensuring AI models are deployed efficiently and perform reliably in production environments.

|

It Leverages Databricks-Specific Tools and Integrations

Databricks enhances the Lakehouse AI experience with specialized tools and integrations designed explicitly for the AI model lifecycle:

- Databricks Model Serving: Optimized for generative AI workloads, Databricks Model Serving leverages GPU-powered infrastructure to reduce latency and provide real-time inference. This allows teams to serve complex models—such as LLMs—with speed, reliability, and scalability.

- MLflow for Model Versioning: Databricks’ MLflow provides end-to-end management and versioning of AI models. It helps track experiments, manage model revisions, and maintain clear lineage throughout development. This ensures reproducibility, transparency, and governance at each step.

- AutoML Integrations: Databricks’ AutoML features empower teams to quickly prototype and iterate on models without manual tuning. AutoML automates common tasks such as feature selection, algorithm choice, and hyperparameter tuning, significantly accelerating the model-development process and allowing teams to test ideas rapidly.

What Are the Costs of Lakehouse AI?

Lakehouse AI solutions—like those provided by Databricks—typically operate on consumption-based, pay-as-you-go models, meaning the actual costs vary according to your usage, workload demands, and specific feature utilization.

While direct costs matter, we must consider the broader ROI of Lakehouse AI solutions.

With faster AI deployments, improved collaboration, reduced redundancy, and enhanced governance, Lakehouse AI can lower the total cost of ownership compared to fragmented, traditional data architectures—especially as AI workloads grow.

But there are a few ways that organizations manage those expense to get even more bang for their buck:

- Cost Monitoring and Governance: Databricks provides built-in tools to track resource consumption, ensuring transparency and enabling proactive cost control.

- Optimized Resource Usage: Leveraging auto-scaling, scheduling clusters, and right-sizing compute resources allows teams to align expenses closely with actual workloads.

- Reserved or Committed Usage Plans: For predictable workloads, organizations may opt for reserved-instance pricing, volume discounts, or enterprise agreements to manage and predict costs effectively.

Factors Influencing Lakehouse AI Costs:

1. Data Storage and Processing

Costs are driven primarily by how much data you store, process, and query within your lakehouse. Since Lakehouse AI unifies your analytics and AI workloads into a single platform, you’re paying for storage and compute resources based on actual usage rather than fixed hardware.

2. GPU and Compute Resources for AI Models

Generative AI workloads, particularly Large Language Models (LLMs), can demand GPU-intensive processing. Databricks charges based on the specific compute resources you select, including GPU instances optimized for model training and serving. Prices vary depending on the GPU type, region, and uptime.

3. Model Serving and Deployment

Deploying and serving AI models—especially real-time, GPU-powered deployments—can also influence costs. Databricks’ GPU-powered Model Serving, designed specifically for low-latency AI use cases, has associated resource costs proportional to deployment scale, inference load, and uptime requirements.

4. Premium Features and Tools

Advanced Lakehouse AI functionalities like Vector Search, AutoML, Lakehouse Monitoring, and integrated version-control tools (e.g., MLflow) typically come as part of your Databricks subscription. But certain enterprise-grade functionalities or specialized tools may have additional licensing costs or require higher-tier subscriptions.

Best Practices for Any Lakehouse AI Platform

Here’s a rapid fire list of best practices you should follow when implementing Lakehouse AI:

- Leverage automation for data ingestion, cleaning, and AI pipeline orchestration.

- Automate AI lifecycle components to streamline the development and deployment of AI models.

- Use existing models and fine-tune them on your organization’s data.

- Use data vector search to quickly index your data as embedding vectors and perform low-latency vector similarity searches.

- Convert data and queries into a vector database for efficient model serving.

Craving more information on how to get the most out of platforms like Databricks?

Check out our article that lists out, in depth, all our best practices.

Challenges of Implementing Lakehouse AI

And here are the most common challenges we see when customer are implementing Lakehouse AI into their systems:

- Transitioning fragmented legacy data into a unified Lakehouse environment can be difficult without structured migration support (Databricks offers resources to simplify this).

- Teams used to traditional data infrastructure may need significant training to manage a unified platform effectively.

- Poor data can lead to biases, hallucinations, and toxic output, making it difficult to evaluate Large Language Models (LLMs) without an objective ground truth label.

- Organizations struggle to know how many data examples are enough, which base model to start with, and how to manage the complexities of infrastructure required to train and fine-tune models.

Databricks: The Tool You Need for Lakehouse AI

If you want to use data efficiently in your generative AI applications, your best bet is to go with Databricks. They seamlessly integrate data lakes and data warehouses into a unified platform optimized explicitly for AI workloads.

With Databricks you get:

- Native Integration with Major Cloud Providers: Databricks tightly integrates with major cloud platforms, including Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). These integrations make provisioning resources and managing AI workloads across hybrid and multi-cloud environments effortless.

- Advanced AI Features and Tools: Databricks offers specialized innovations tailored for generative AI, including GPU-powered Model Serving, Vector Search, Lakehouse Monitoring, MLflow for model version control, and built-in AutoML features, significantly accelerating AI development and deployment.

- Robust Data Governance and Collaboration: The platform combines scalability, unified governance, and comprehensive version control, empowering diverse teams—including data scientists, engineers, and analysts—to collaborate seamlessly on AI projects within a single environment.

Head to our Databricks service page to learn how we can help you make the most of your most valuable asset (your data) and the potential of AI.

Make Data Your Biggest Differentiator

We build scalable, modern data systems tailored for AI.

Using Databricks’ industry-leading platform, we ensure your data is ready, secure, and optimized for AI.